-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The Pascal GTX 1070 Owners Thread

- Thread starter Kaapstad

- Start date

More options

Thread starter's postsMy evidence is my own personal experience. I can play BF1 multiplayer with 64 people, a CPU-demanding situation, at 1080p/solid 60fps absolutely fine with a 3.4Ghz 3570k.So you have posted no evidence then.

And who said the 4670k was no good for 1080p?

Do get your facts right please.

Please dont talk to me about 'facts' when you're straight up talking nonsense.

And YOU said that a 4790k at 3.9Ghz was "too slow" for 1080p gaming. You said exactly that. Which would necessarily imply that a 4670k at stock would be even worse.

It's a seriously dumb claim and I'm shocked to hear supposed hardware enthusiasts saying this stuff and even others supporting you!

Dont get me wrong, I'm not claiming that overclocking the 4790k wouldn't help more at 1080p than at a higher resolution, but to say it is 'too slow' is complete rubbish. That's a damn good CPU and 3.9Ghz is nothing to scoff at.

Have you got a 4690k? I did, infact I still do as it's in my media server now.

Your making broad sweeping statements when, as Kaap has already said, you have no evidence of this?

I game with high FPS as I have a 144Hz monitor so anytihng less than 140fps is noticeable to me. I do not want to settle for 60fps.

At 1080p, whether it was at low, medium, high or ultra settings, in BF1 my CPU was at 100% and my GPU was hardly being used in multiplayer games.

I switched to a 6 core HT system and bingo, GPU usage is now 90-100%, i've got constant 140fps on ultra settings. I'm telling you how it is.

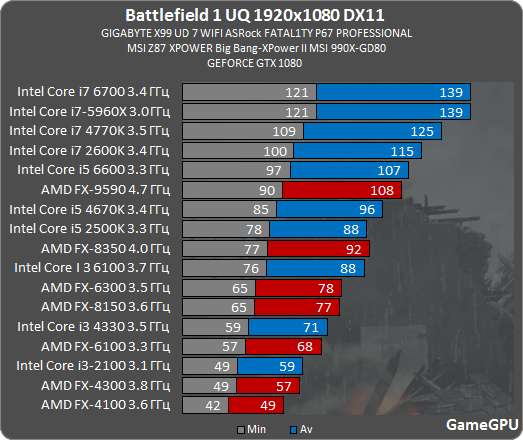

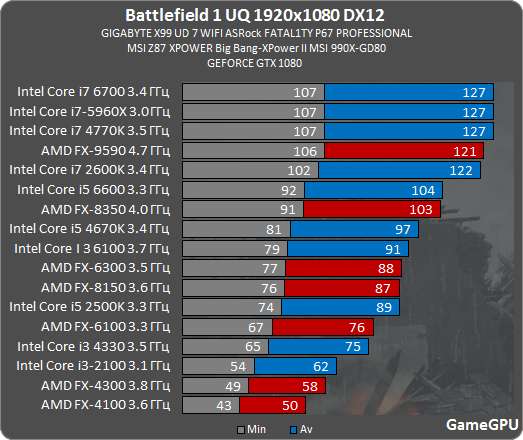

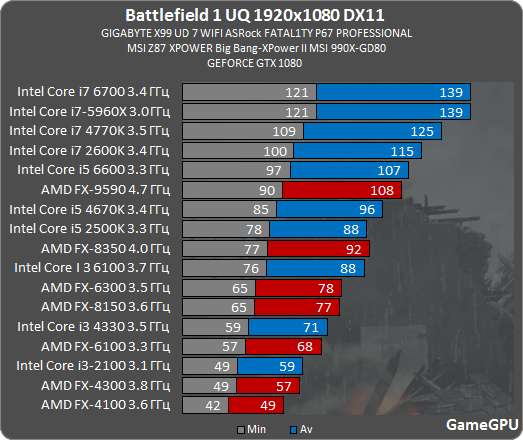

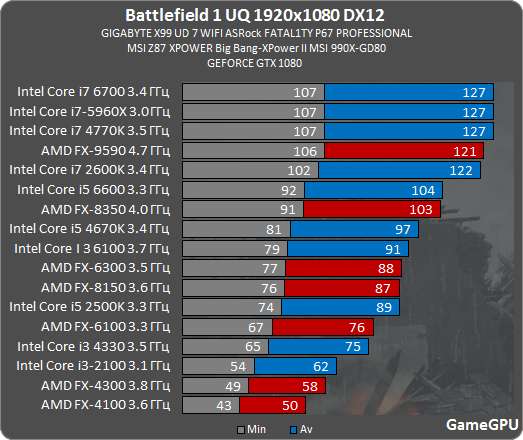

Here you go, these images may help you process this 'absurd' information.

6700K myself.Jesus some serious cpu usage during BF1 multiplayer.Only spotted it myself last night.Smooth as silk though

Yup, what I'm seeing there is even a 3.3Ghz 2500k is still *plenty* good for achieving good performance.Have you got a 4690k? I did, infact I still do as it's in my media server now.

Your making broad sweeping statements when, as Kaap has already said, you have no evidence of this?

I game with high FPS as I have a 144Hz monitor so anytihng less than 140fps is noticeable to me. I do not want to settle for 60fps.

At 1080p, whether it was at low, medium, high or ultra settings, in BF1 my CPU was at 100% and my GPU was hardly being used in multiplayer games.

I switched to a 6 core HT system and bingo, GPU usage is now 90-100%, i've got constant 140fps on ultra settings. I'm telling you how it is.

Here you go, these images may help you process this 'absurd' information.

Nobody specified anything about playing with 144hz monitors. The claim was that a 3.9Ghz 4790k was 'too slow' for 1080p gaming. That's it. That's what I'm disputing.

Please pay attention here and dont confuse the context of what I'm saying.

EDIT: And really, if we're talking 144hz monitors, then people at higher resolutions will face the same issues here in terms of CPU bottlenecking. If your system cant do 140fps at 1080p with a given CPU, it certainly isn't gonna do any better turning the resolution up. You're just more likely to introduce a GPU bottleneck at some point, reducing performance even more.

Last edited:

Your CPU could do with an overclock as 3.9ghz is a little too slow for 1080p.

Yup, what I'm seeing there is even a 3.3Ghz 2500k is still *plenty* good for achieving good performance.

Nobody specified anything about playing with 144hz monitors. The claim was that a 3.9Ghz 4790k was 'too slow' for 1080p gaming. That's it. That's what I'm disputing.

Please pay attention here and dont confuse the context of what I'm saying.

EDIT: And really, if we're talking 144hz monitors, then people at higher resolutions will face the same issues here in terms of CPU bottlenecking. If your system cant do 140fps at 1080p with a given CPU, it certainly isn't gonna do any better turning the resolution up. You're just more likely to introduce a GPU bottleneck at some point, reducing performance even more.

I did not mention htz rate, you are busted.

As to resolution, the higher you go the easier it is for the CPU.

I'm at 3440x1440 and still getting 99% usageI did not mention htz rate, you are busted.

As to resolution, the higher you go the easier it is for the CPU.

Is that a bad thing?Game is smooth,have it under a h115 temps max 65.

Is that a bad thing?Game is smooth,have it under a h115 temps max 65.

Last edited:

I do see your point Seanspeed but please bear in mind the owners of the previous Sandybridge cpu's were clocking their processors on average around 4.5 Ghz and that was then.

Now for example you have Gears of war 4 ideal cpu requirement is a Haswell i7 at 4 Ghz.

I am not defending anyone here, I am just saying that to get the best out of a gtx 1070 and higher, a cpu overclock is recommended of over 4 ghz in my opinion.

I do apologize if I caused any offense.

Now for example you have Gears of war 4 ideal cpu requirement is a Haswell i7 at 4 Ghz.

I am not defending anyone here, I am just saying that to get the best out of a gtx 1070 and higher, a cpu overclock is recommended of over 4 ghz in my opinion.

I do apologize if I caused any offense.

I'm at 3440x1440 and still getting 99% usage

Depends what settings you are using.

If you are using lower settings and getting high fps then it will impact on the CPU.

Normally @2160p you are limited to a 60htz monitor so high settings and low fps make it easy for the CPU.

The CPU does not care what settings are used in the frames, just how many there are.

What the hell are you talking about? I responded to somebody else who was talking about 144hz monitors.I did not mention htz rate, you are busted.

And you're right, you *didn't* mention refresh rate, which hurts your argument greatly. If you were claiming that a 3.9Ghz Haswell i7 wasn't fast enough for 144fps gaming at 1080p, you might have had an inkling of an argument. But you didn't. Your claim was that it was 'too slow' for 1080p. That's it.

Even if that was true(which it isn't), it doesn't support your claim that an i7 Haswell at 3.9Ghz is 'too slow' for 1080p gaming.As to resolution, the higher you go the easier it is for the CPU.

You seem to not understand that going to higher resolutions simply means you are more likely to run into GPU bottlenecks. Honestly, most people are already hitting GPU bottlenecks in 90% of cases at 1080p. But either way, introducing a GPU bottleneck by raising the resolution, where before there was a CPU bottleneck, just means you're going to get WORSE performance than had you just been hitting a CPU bottleneck. This is not a better scenario.

Depends what settings you are using.

If you are using lower settings and getting high fps then it will impact on the CPU.

Normally @2160p you are limited to a 60htz monitor so high settings and low fps make it easy for the CPU.

The CPU does not care what settings are used in the frames, just how many there are.

Thanks Kaap.I have everything turned up but AA down a notch or 2 to try achieve 100fps with the gsync.100% res.Mind you I sold a 1070 so am using a single until the titan arrives.1070 performs well very suprised.Sli seemed to be worse for me anyway.That and gears pushed me toward the titan.

Last edited:

Recommended, sure. If you notice, I am also recommending the person overclock their CPU. But not because it's simply 'too slow' if they dont, but because there will be the occasional situation or title where it does help some.I do see your point Seanspeed but please bear in mind the owners of the previous Sandybridge cpu's were clocking their processors on average around 4.5 Ghz and that was then.

Now for example you have Gears of war 4 ideal cpu requirement is a Haswell i7 at 4 Ghz.

I am not defending anyone here, I am just saying that to get the best out of a gtx 1070 and higher, a cpu overclock is recommended of over 4 ghz in my opinion.

I do apologize if I caused any offense.

Recommended, sure. If you notice, I am also recommending the person overclock their CPU. But not because it's simply 'too slow' if they dont, but because there will be the occasional situation or title where it does help some.

I agreed.

This is also not correct.The CPU does not care what settings are used in the frames, just how many there are.

There are settings in games that are largely or partly CPU-dependent. A big one being draw distance-related settings, whether environmental, object or shadow-related. These involve extra draw calls for the system and the CPU is the processor that typically handles that. It's a big reason that open-world titles can often be more CPU-heavy than other types of games.

If you're getting the performance you desire, then dont worry about CPU usage being high. It's only a problem if it's causing performance issues.I'm at 3440x1440 and still getting 99% usageIs that a bad thing?Game is smooth,have it under a h115 temps max 65.

And Kaap is wrong. CPU's dont 'take a breather' just because a GPU bottleneck is introduced. It is still required to do the same workload it would if you were running a lower resolution.

Last edited:

Cheers Sean.This is also not correct.

There are settings in games that are largely or partly CPU-dependent. A big one being draw distance-related settings, whether environmental, object or shadow-related. These involve extra draw calls for the system and the CPU is the processor that typically handles that. It's a big reason that open-world titles can often be more CPU-heavy than other types of games.

If you're getting the performance you desire, then dont worry about CPU usage being high. It's only a problem if it's causing performance issues.

And Kaap is wrong. CPU's dont 'take a breather' just because a GPU bottleneck is introduced. It is still required to do the same workload it would if you were running a lower resolution.

And Kaap is wrong. CPU's dont 'take a breather' just because a GPU bottleneck is introduced. It is still required to do the same workload it would if you were running a lower resolution.

I only game on a couple of 2160p monitors what would I know lol.

I think you need to give this a break as you are making your self look silly.

Obviously not enough.I only game on a couple of 2160p monitors what would I know lol.

Buying expensive stuff does not mean you understand the technology behind it better than others, unfortunately for you.

Again, you seem to misunderstand that hitting a GPU bottleneck at a high resolution DOES NOT mean the CPU is alleviated of any workload. The CPU does not care what resolution a piece of gaming software is running at. Just because it's not the performance bottleneck anymore doesn't mean it's taking a breather. It's simply not how it works. And hitting a GPU bottleneck at a higher resolution when the CPU was the bottleneck at a lower resolution simply means your performance is WORSE than it was before. This is not a better scenario to be in unless you are purposefully prioritizing IQ over framerate.

By correcting your inaccurate or exaggerated claims? Not sure how that makes me look silly.I think you need to give this a break as you are making your self look silly.

Last edited:

Obviously not enough.

Buying expensive stuff does not mean you understand the technology behind it better than others, unfortunately for you.

Again, you seem to misunderstand that hitting a GPU bottleneck at a high resolution DOES NOT mean the CPU is alleviated of any workload. The CPU does not care what resolution a piece of gaming software is running at. Just because it's not the performance bottleneck anymore doesn't mean it's taking a breather. It's simply not how it works. And hitting a GPU bottleneck at a higher resolution when the CPU was the bottleneck at a lower resolution simply means your performance is WORSE than it was before. This is not a better scenario to be in unless you are purposefully prioritizing IQ over framerate.

By correcting your inaccurate or exaggerated claims? Not sure how that makes me look silly.

Post some evidence to back up your claims.

You obviously have a bee in your bonnet about this but you need to get over it.

2 x GTX 1080 @2038/2778

Hitman Absolution

Max Settings

1080p

6950X @4.0

6950X @4.4

Nice increase in fps just by overclocking my CPU a little bit and very welcome if I was using a 1080p monitor with a high refresh rate.

Hitman Absolution

Max Settings

1080p

6950X @4.0

6950X @4.4

Nice increase in fps just by overclocking my CPU a little bit and very welcome if I was using a 1080p monitor with a high refresh rate.

Associate

- Joined

- 1 Mar 2014

- Posts

- 2,419

4K+ uses vastly less CPU then 1080p and 1440p

The more you crank up settings and/or resolution the less is being offloaded to the CPU

Hense users with older CPUs getting bottlenecked at 1080p

Seanspeed you are making a fool of yourself, please stop.

The more you crank up settings and/or resolution the less is being offloaded to the CPU

Hense users with older CPUs getting bottlenecked at 1080p

Seanspeed you are making a fool of yourself, please stop.

Last edited:

Please pay attention here and dont confuse the context of what I'm saying.

Then don't single my posts out (especially since im the one who mentioned the 4690k) with replies like

But anybody who tells you your 4670k isn't good for 1080p or *anything else* has absolutely no idea what they're talking about, or are at the very least quite confused.