I have now added some more disks to my home server and have come to a point where I will no longer be using a dedicated controller to pass drives to my Windows Server VM I am using for sharing media.

This now gives me a bit of an issue.... How to give my Win Server VM storage and allow it to act as if it is a single large disk.

Setup.

Starting Config...

- 3x 2TB Seagate Barracudas in raid 5 on HP P812 SAS controller.

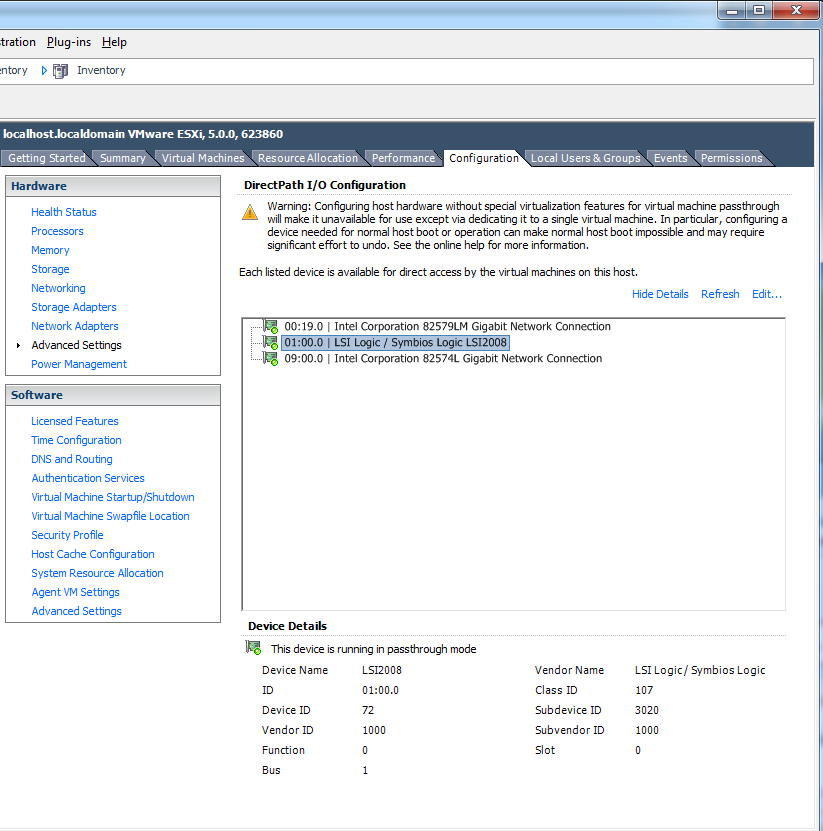

- 2x 2TB Seagate Barracudas (individual drives presented to WIn Server VM vi VT-d on a M1015).

- 1x 2TB WD green (individual drive presented to WIn Server VM vi VT-d on a M1015).

Intermediate Config...

4TB storage from Raid 5 array presented to Win Server VM so data can be copied over from the 2x 2TB Seagate drives.

Final Config...

8TB presented to the Win Server VM made up of a 5x 2TB Seagate Barracuda raid 5 array on the HP P812 controller.

Note the P812 also has other arrays on it so I cannot do a direct VT-d passthrough.

Thoughts.

1. Span 2TB VMDKs in Win Server to make one large drive.

- Possible to expand without loosing data ?.

2. Create a CentOS VM to act as a NFS server.

- Limited by Win Server only being able to use ET1000 network interface and if so is there a way around it.

3. Create an Openfiler VM much the same as CentOS in no 2.

4. Create a OpenIndiana VM for ZFS but the disks are already on a decent raid 5 controller with 1GB FBWC.

This is for a home setup so it does not have to be fully belt and braces but then again I am not adverse to looking at various solutions to gain knowledge.

I am using vSphere 5.1 free but I may move to vSphere 5.1 foundation is it helps.

Any other suggestions most welcome.

THanks

RB

This now gives me a bit of an issue.... How to give my Win Server VM storage and allow it to act as if it is a single large disk.

Setup.

Starting Config...

- 3x 2TB Seagate Barracudas in raid 5 on HP P812 SAS controller.

- 2x 2TB Seagate Barracudas (individual drives presented to WIn Server VM vi VT-d on a M1015).

- 1x 2TB WD green (individual drive presented to WIn Server VM vi VT-d on a M1015).

Intermediate Config...

4TB storage from Raid 5 array presented to Win Server VM so data can be copied over from the 2x 2TB Seagate drives.

Final Config...

8TB presented to the Win Server VM made up of a 5x 2TB Seagate Barracuda raid 5 array on the HP P812 controller.

Note the P812 also has other arrays on it so I cannot do a direct VT-d passthrough.

Thoughts.

1. Span 2TB VMDKs in Win Server to make one large drive.

- Possible to expand without loosing data ?.

2. Create a CentOS VM to act as a NFS server.

- Limited by Win Server only being able to use ET1000 network interface and if so is there a way around it.

3. Create an Openfiler VM much the same as CentOS in no 2.

4. Create a OpenIndiana VM for ZFS but the disks are already on a decent raid 5 controller with 1GB FBWC.

This is for a home setup so it does not have to be fully belt and braces but then again I am not adverse to looking at various solutions to gain knowledge.

I am using vSphere 5.1 free but I may move to vSphere 5.1 foundation is it helps.

Any other suggestions most welcome.

THanks

RB

.

.

.

.