Associate

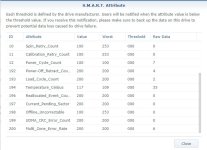

Get back from a short break this week to find my 4 bay Synology is having another issue with a 4TB red drive. So I have a offline spare which I keep on standby and replace the failed drive and it fails to rebuild saying drive 3 has bad sectors.

I also find that drive 2 has had 2 re-connections in the past month meaning that it won't fail just yet but probably at some point.

So that's three drives all with issues and they are ALL replacements which I have received from western digital for failed drives. It seems that you cannot put any trust in re-certified drives in a raid.

If you own a Synology unit and on the monthly extended test a drive appears to be stuck at 90% then it DOES have bad sectors and needs replacing.

I'm stuck now, having to RMA two drives back to WD and one drive is out of warranty. I think i'm going to put two 4TB's back in a raid 1 this time and run two drive's in a JBOD with less critical data on them. I just cannot afford to replace all 4 of them at around £480.

I also find that drive 2 has had 2 re-connections in the past month meaning that it won't fail just yet but probably at some point.

So that's three drives all with issues and they are ALL replacements which I have received from western digital for failed drives. It seems that you cannot put any trust in re-certified drives in a raid.

If you own a Synology unit and on the monthly extended test a drive appears to be stuck at 90% then it DOES have bad sectors and needs replacing.

I'm stuck now, having to RMA two drives back to WD and one drive is out of warranty. I think i'm going to put two 4TB's back in a raid 1 this time and run two drive's in a JBOD with less critical data on them. I just cannot afford to replace all 4 of them at around £480.