Soldato

Personally think it's an absolutely backwards step.

Yes it may be "necessary" for high speed memory, but at what cost? May as well just solder the RAM onto the motherboard in terms of what this realistically does for allowing "upgrades".

Yes it takes up marginally less board area than 4 DIMM slots - but for what reason? If board area savings are so important then either dropping to 1 or 2 DIMM slots saves space. Similarly if it was all about board area then SO-DIMM modules have been a thing for almost as long as DIMMs have too

It also saves height - but other than in laptops or small form factor machines, why does that matter? An ATX based PC has lots of other components that are higher (backplate, CPU cooler, PCI-E Cards), so why does the RAM also need to be flat?

Want to add some more RAM?

Oh wait - rather than just buying another DIMM/2 DIMMS, now you have to throw away that perfectly good CAMM module, and replace it with a bigger one.

No problem - I'll just resell the old CAMM module... except no one wants it because everyone wants a higher capacity one. Whereas in most cases older lower capacity DIMMs could be reused to make 4 DIMM sets etc in older machines to add more Memory.

I'd wager it's actually a non-consumer practice dreamt up by the RAM cartels in order to grab a slice of the "Apple" non-upgradable business model, whilst still purporting to give you the upgrade option (however impractical that now becomes)

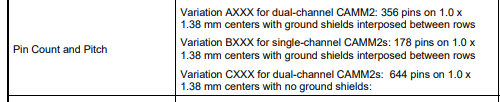

And that's before even considering that the so-called "standard" already has so many different variations (CAMM2 vs LPCAMM2) that they aren't even necessarily going to be interchangeable between say your laptop and a possible desktop PC.

Don't think any of these points are much of a concern at all on an enthusiast-grade motherboard. Perhaps it's because you're speaking from the perspective of someone who doesn't upgrade that often or isn't interested in the performance benefits.

1. In terms of losing physical DIMM slots, there is no "cost" in terms of drawbacks. Both speed and latency are the spearheads that insight change in this space, and if something offers improvements then adoption makes sense. Space saving and airflow are also welcome benefits. For enthusiasts, monoblocks that cover the memory array and CPU are open to a more practical approach now.

2. "Adding some more RAM" ultimately isn't an issue, either. Why? Because high-speed binned CAMM modules aren't to be mixed in the same way that conventional memory kits aren't. They're binned by the vendors in the density they're sold. So another non-issue unless wanting to run close to stock, in which case perhaps these products aren't for you.

Last edited:

some of us like changing our memory over time, upping from 8 to 16 or 16 to 32 or even buying better binned kits and so on.

some of us like changing our memory over time, upping from 8 to 16 or 16 to 32 or even buying better binned kits and so on.