Rasterization performance

Ray tracing performance

VRAM amount

Feature set on offer i.e. shadowplay, dlss, fsr

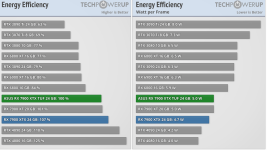

Power consumption & efficiency

Have left out price per performance as obviously that is the main thing for everyone and goes without saying and obviously, it would be nice to have all of them but as we all know, every gpu has some form of a compromise so this thread/poll is purely for what is most important as of now and for the future when it comes to your next purchase.

Any others or better poll choice suggestions?

Ray tracing performance

VRAM amount

Feature set on offer i.e. shadowplay, dlss, fsr

Power consumption & efficiency

Have left out price per performance as obviously that is the main thing for everyone and goes without saying and obviously, it would be nice to have all of them but as we all know, every gpu has some form of a compromise so this thread/poll is purely for what is most important as of now and for the future when it comes to your next purchase.

Any others or better poll choice suggestions?