If that’s a DX limit, that’s just for passing data to the GPU. The game will also have worker threads. Most games run fine on 6 core chips, but an increasing number are starting to use more, some also ignore SMT threads as they can reduce performance as they have ZERO compute hardware. This will increase as Intel chips have a lot more cores, especially the low to mid-range. If AMD release more £250-300 6 cores, they are taking the **** and deserve to lose market share.

Helldivers 2 will happily spread across all 28 cores/threads on my 14700K - though it is only about 18-20 CPU threads worth of processing and doesn't really suffer on a 8/16 CPU or less.

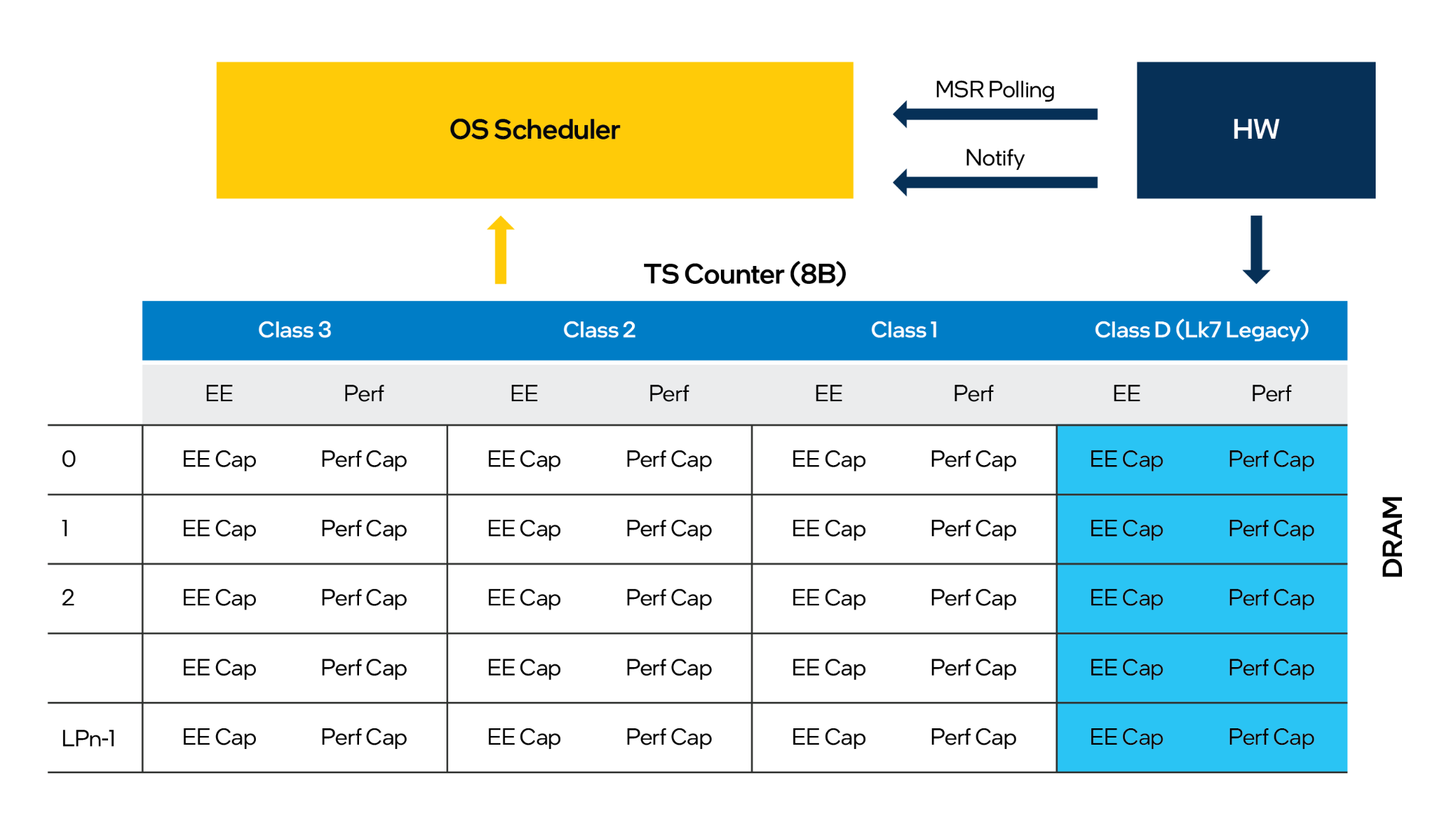

these mixed performance core CPU's are all kerfuied

these mixed performance core CPU's are all kerfuied

Either system should last long enough that the 'dead platform' shouldn't be that big of a deal. But if you do want an upgrade path then obviously AM5 is the right choice. Price difference not that much overall and efficiency can be tuned if required.

Either system should last long enough that the 'dead platform' shouldn't be that big of a deal. But if you do want an upgrade path then obviously AM5 is the right choice. Price difference not that much overall and efficiency can be tuned if required.