DDR4, specifically BDIE can run upto 4200+ in Gear 1. When tuned that will have a lot of bandwidth + low latency.

The current DDR5 kits you can (kinda) buy are the entry level Micron kits which are just plain slow esp when you take into account the latency hit from running the controller at half the memory speed. You can see that in a lot of reviews where they show DDR4 beating DDR5 in gaming and understandably so. Here's one such reviewer that I know personally:

So the fix for this is waiting for high end kits to show up from SK Hynix and Samsung. Then DDR5 gets to stretch it's legs and ADL can pull away from DDR4 easily.

To show you an example of what slow vs mid range DDR5 kit performance looks like so you have an idea of what's coming:

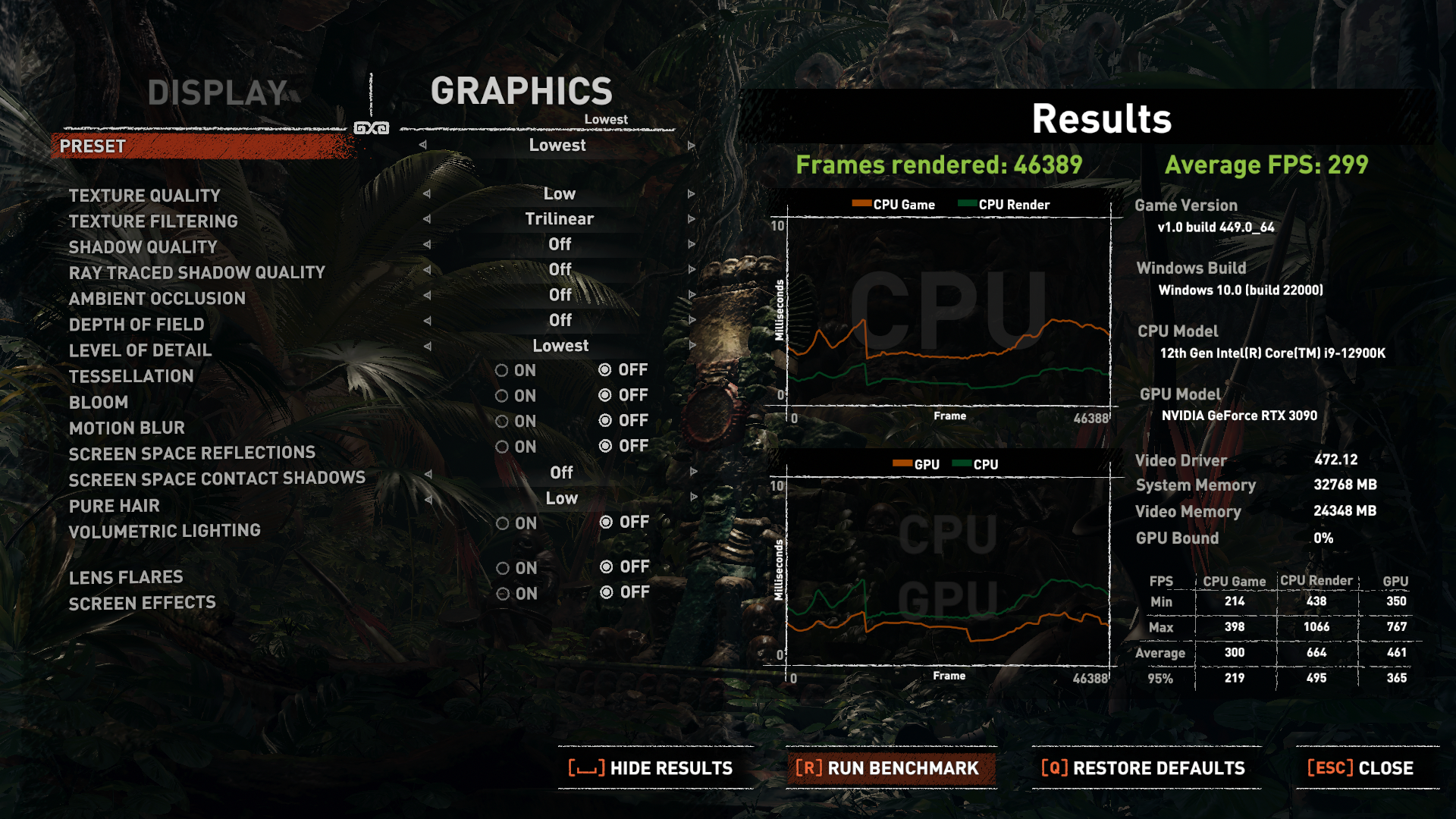

This is an entry level Micron Kit manually tuned to max:

DDR5, CL36-37-37-48, tweaked subs, 5.2 ghz all cores

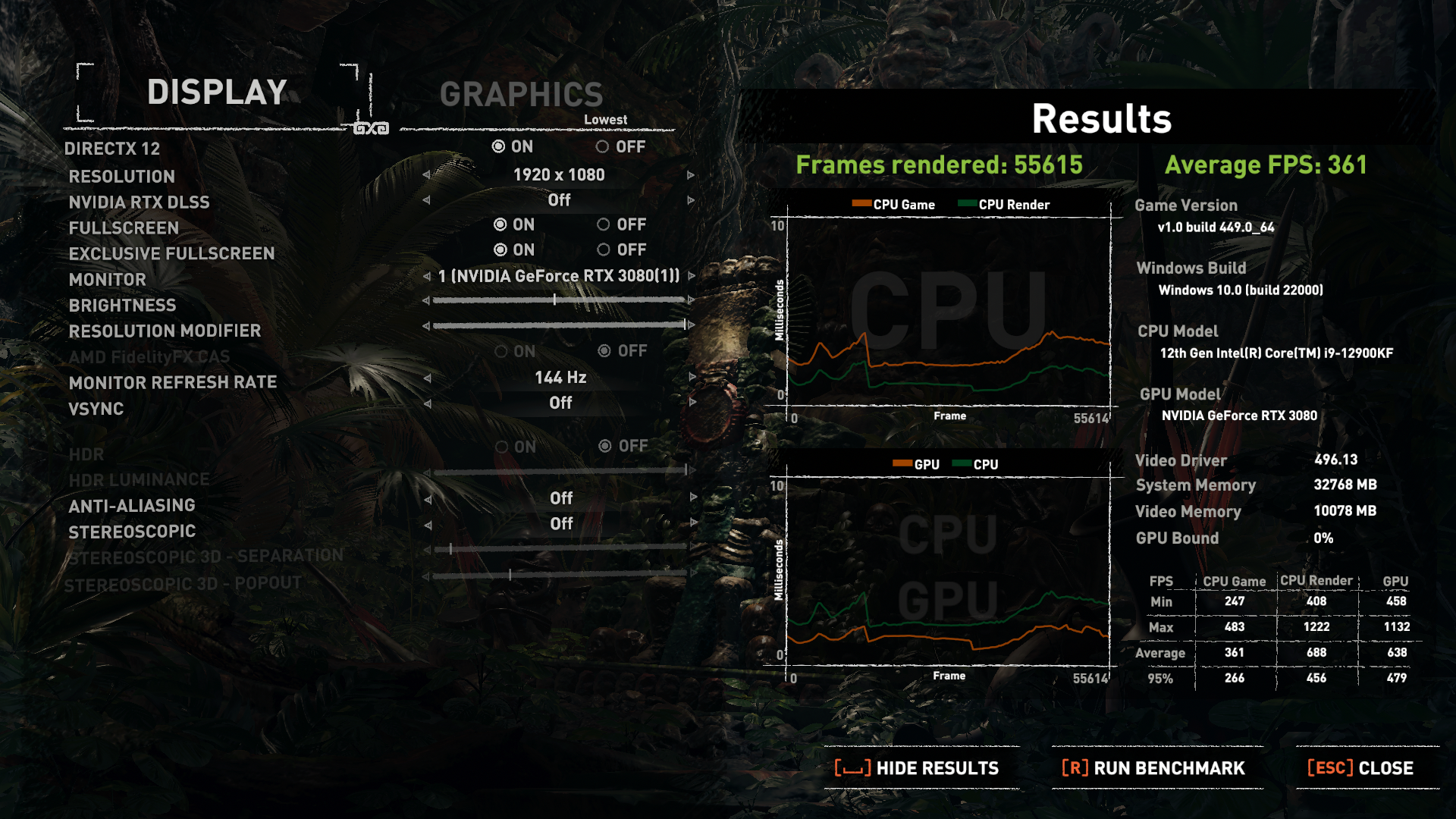

Here's a SK Hynix: CL36-37-37-28 Command Rate 1. 5.2ghz all core

And remember this is NOT the *really* high end stuff but still quite good.

Its like he expected the Intel system to draw about the same power as the AMD system under load, realised it does in fact draw twice as much power and thought "oh #### that didn't work, quick find some other way of making Intel looks good, so...... that idle power draw on Intel, good isn't it"

Its like he expected the Intel system to draw about the same power as the AMD system under load, realised it does in fact draw twice as much power and thought "oh #### that didn't work, quick find some other way of making Intel looks good, so...... that idle power draw on Intel, good isn't it"