Good.Well given what has just been shown Intel better bring the goods with 10nm server CPU's or they are going to be bleeding market share by the end of 2019.

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD announce EPYC

- Thread starter Journey

- Start date

More options

Thread starter's postsWell given what has just been shown Intel better bring the goods with 10nm server CPU's or they are going to be bleeding market share by the end of 2019.

Intel cannot bring 10nm until 2020. When Zen 3 will be due out.

It has nothing to compete with AMD until they spend the next 5 years changing architecture.

By that time Zen 5 will be out. (there is no Zen 6, new architecture after that).

Em, and why is this bad? In today's landscape of everyday use, let's keep Ryzen at 8 cores and leave the higher cores to threadripper. What would normal desktops gain by going higher than 16threads? Let the games catch up to current core count, then push it again. This blind chasing of cores is gonna end badly very soon.So, Rome is 8 8-core chiplets, and not 4 16-core chiplets. This means no 6-core CCX and it means Ryzen 7 3700 can be an octo-core

Em, and why is this bad? In today's landscape of everyday use, let's keep Ryzen at 8 cores and leave the higher cores to threadripper. What would normal desktops gain by going higher than 16threads? Let the games catch up to current core count, then push it again. This blind chasing of cores is gonna end badly very soon.

Threadripper has one major disadvantage - its very high power consumption - compare the 65W 2700 with the 180W+ 12-core.

Threadripper has one major disadvantage - its very high power consumption - compare the 65W 2700 with the 180W+ 12-core.

The Ryzen 7 2700 sticking to the 65w TDP only boost to about 3.4GHz on all cores, the 2920X is more like a 2700X with 4 extra cores, and a slightly lower clock speed on all cores at once. 180w/12 = 15w per core 105W/8 = 13.125w per core, and the 65w/8 = 8.125w per core, you can have high clocks and lower power all at the same time.

Caporegime

- Joined

- 9 Nov 2009

- Posts

- 25,806

- Location

- Planet Earth

Em, and why is this bad? In today's landscape of everyday use, let's keep Ryzen at 8 cores and leave the higher cores to threadripper. What would normal desktops gain by going higher than 16threads? Let the games catch up to current core count, then push it again. This blind chasing of cores is gonna end badly very soon.

Each chiplet is in multiples of two. That means its most likely the desktop CPUs will have 16 cores.

That's not disavantage, this is a biproduct of having so many cores in one place. Also, what's stopping you from downclocking and downvolting threadripper? AMD already is giving us products that intel never even thought of giving at such prices and we are still not happy?Threadripper has one major disadvantage - its very high power consumption - compare the 65W 2700 with the 180W+ 12-core.

I really really hope not. What will you do with 32 thread gaming CPU? What is the point of so many threads for a simple desktop? There are quite a few games which freak out seeing more than 16 threads. There are only 1 game engine which supports fully all 16 threads and that engine is still in development. The rest of them are still favouring higher clocks than core count.

Each chiplet is in multiples of two. That means its most likely the desktop CPUs will have 16 cores.

I really hope AMD does not release 16 core normal desktops CPU and give us higher clocked 8 core. We have a hardware, we need software to wake up now, then we can make next step

I really really hope not. What will you do with 32 thread gaming CPU?

To use it for other things, not only gaming. Or gaming plus some work in the background.

What is the point of so many threads for a simple desktop?

I wonder if these processors can run 8K video with a normal load of less than, let's say, 10-15% on the CPU. And not 50% on all the cores.

Last edited:

Ray tracing more than 1080p @ 60hz ......I really really hope not. What will you do with 32 thread gaming CPU? What is the point of so many threads for a simple desktop?

There are only 1 game engine which supports fully all 16 threads and that engine is still in development.

X4 Foundation support 32 cores/threads not 16.

Man, you guys are unbelievable  Just a year and a half ago AMD pushed 16 threads to mainstream, with 99% of software not using even quarter of the resources available and now you are coming up with some random excuses to get even more cores. What other stuff besides gaming? Encoding random video clip? You already have 16 threads for that. If its your day job to convert videos, threadripper is on the market. Random 3d modeling? you already have 16 threads for that, if you are semi serious about it, threadripper is on the market. What else? streaming, well, you already have 16 threads which if clocked higher with Zen 2 will be able to do at high resolutions with high bitrate without losing any frames. If you are really serious about that, threadripper is on the market, OR do like intel diehards do, get fastest 8-12 thread Intel chip and get another computer to stream

Just a year and a half ago AMD pushed 16 threads to mainstream, with 99% of software not using even quarter of the resources available and now you are coming up with some random excuses to get even more cores. What other stuff besides gaming? Encoding random video clip? You already have 16 threads for that. If its your day job to convert videos, threadripper is on the market. Random 3d modeling? you already have 16 threads for that, if you are semi serious about it, threadripper is on the market. What else? streaming, well, you already have 16 threads which if clocked higher with Zen 2 will be able to do at high resolutions with high bitrate without losing any frames. If you are really serious about that, threadripper is on the market, OR do like intel diehards do, get fastest 8-12 thread Intel chip and get another computer to stream

Talking of 4k and 8k videos, we have GPUs for that. Isn't it a bit early to talk about 8k? But if you are serious about that, threadripper is in the market, up to 32 cores.

Just a year and a half ago AMD pushed 16 threads to mainstream, with 99% of software not using even quarter of the resources available and now you are coming up with some random excuses to get even more cores. What other stuff besides gaming? Encoding random video clip? You already have 16 threads for that. If its your day job to convert videos, threadripper is on the market. Random 3d modeling? you already have 16 threads for that, if you are semi serious about it, threadripper is on the market. What else? streaming, well, you already have 16 threads which if clocked higher with Zen 2 will be able to do at high resolutions with high bitrate without losing any frames. If you are really serious about that, threadripper is on the market, OR do like intel diehards do, get fastest 8-12 thread Intel chip and get another computer to stream

Just a year and a half ago AMD pushed 16 threads to mainstream, with 99% of software not using even quarter of the resources available and now you are coming up with some random excuses to get even more cores. What other stuff besides gaming? Encoding random video clip? You already have 16 threads for that. If its your day job to convert videos, threadripper is on the market. Random 3d modeling? you already have 16 threads for that, if you are semi serious about it, threadripper is on the market. What else? streaming, well, you already have 16 threads which if clocked higher with Zen 2 will be able to do at high resolutions with high bitrate without losing any frames. If you are really serious about that, threadripper is on the market, OR do like intel diehards do, get fastest 8-12 thread Intel chip and get another computer to stream

Talking of 4k and 8k videos, we have GPUs for that. Isn't it a bit early to talk about 8k? But if you are serious about that, threadripper is in the market, up to 32 cores.

Ray tracing more than 1080p @ 60hz ......

X4 Foundation support 32 cores/threads not 16.

Come on, ray tracing is only for RTX

I didn't know about X4 Foundation, so well, 2 game engines then

Come on, ray tracing is only for RTX

Actually given the inability of TurDing cards to do even 1080p @ 60fps, DICE offloaded a lot of Ray Tracing to the CPU. That is why BF5 official requirement need Ryzen 2700 or I7 8700K

So for 2560x1440 @ 144hz a 32core 64thread CPU might be sufficient....

Death to quad cores by the hand of Nvidia....

Caporegime

- Joined

- 9 Nov 2009

- Posts

- 25,806

- Location

- Planet Earth

I really really hope not. What will you do with 32 thread gaming CPU? What is the point of so many threads for a simple desktop? There are quite a few games which freak out seeing more than 16 threads. There are only 1 game engine which supports fully all 16 threads and that engine is still in development. The rest of them are still favouring higher clocks than core count.

I really hope AMD does not release 16 core normal desktops CPU and give us higher clocked 8 core. We have a hardware, we need software to wake up now, then we can make next step

I do,because it means 6C to 8C Ryzens will be cheaper,and it also means for content creators,instead of Threadripper people can go with a cheaper platform.

There is nothing stopping AMD releasing a higher clockspeed 8C version,using salvaged dies too,ie, 4C per chiplet.

If anything it would be better. Running 8C on one chiplet at high clockspeed is going to be hard to cool,especially since it estimated the chiplet is between 70MM2~80MM2 in size.

Also AMD needs to compete with Intel in laptops. Intel is already at 8C,so at the very least they need 8C APUs.

So the only way I can see them staying at 8C is if there are no consumer Ryzen CPUs,ie,AMD only makes consumer Ryzen 2 CPUs an APU. That would mean most of the SKU will be a controller chip and a GPU.

Then people will moan,why is there a big GPU they won't use! So AMD cannot win either way then!!

Plus what happens when Intel moves to 10NM(or whatever it will be called at that time),and then adds another 2C to 4C?? Suddenly AMD is at a core disadvantage.

AMD needs to beat Intel whilst they can do it,so 16C is what they should be doing now,since they can pick and choose to make high clockspeed "low" core count SKUs and high core count low clockspeed variants.

Gamers need to understand there is no harm in buying a cheaper SKU for gaming. Let the high end SKU be for people who really need it.

They also forgot back in the day there was one platform for consumer - not consumer and HEDT. It was one and the same. I would like a move back to that!

People have forgotten for years most gamers didn't buy the top SKU but overclocked a cheaper one.

I would be quite happy for 6C/8C to move to cheaper territory. It will mean more mass market adoption of such CPUs,and more games will start to use the CPU resources now.

Remember,back in the day people said why would you need a Q6600 when they were cheap??

Last edited:

When the 8-core chiplet is only 70-80 mm², it is the most normal thing to expect the 8-core processors to become even more than mainstream, they should be relegated to low end already.

Remember that the consoles are with 8-cores, most smartphones, even the cheapest have already been using 8-core for years. Only the PC users still "enjoy" dual, quad and hexa cores.

Yes, @CAT-THE-FIFTH you are right that AMD must use the opportunity to push Intel while it is still present. Nowadays, the only way to push Intel, is to give more cores, with higher frequency, better IPC, the effects will be much less pronounced.

And the Threadripper is expensive, only the CPU and motherboard cost €1000, plus double the RAM cost.

As for 8K, NHK Japan begins broadcasting 8K channels from December 1st. This is 3 weeks from now. https://www.newsshooter.com/2018/08/16/nhk-will-broadcast-8k-from-december-1st/

Remember that the consoles are with 8-cores, most smartphones, even the cheapest have already been using 8-core for years. Only the PC users still "enjoy" dual, quad and hexa cores.

Yes, @CAT-THE-FIFTH you are right that AMD must use the opportunity to push Intel while it is still present. Nowadays, the only way to push Intel, is to give more cores, with higher frequency, better IPC, the effects will be much less pronounced.

And the Threadripper is expensive, only the CPU and motherboard cost €1000, plus double the RAM cost.

As for 8K, NHK Japan begins broadcasting 8K channels from December 1st. This is 3 weeks from now. https://www.newsshooter.com/2018/08/16/nhk-will-broadcast-8k-from-december-1st/

Caporegime

- Joined

- 18 Oct 2002

- Posts

- 33,188

Em, and why is this bad? In today's landscape of everyday use, let's keep Ryzen at 8 cores and leave the higher cores to threadripper. What would normal desktops gain by going higher than 16threads? Let the games catch up to current core count, then push it again. This blind chasing of cores is gonna end badly very soon.

Software and studios follow hardware by the lowest common denominator. They will utilise 8 cores effectively when most gamers have 8 cores, not when most gamers have 4 cores. While most gamers have 4 cores they'll design for 4 cores, as dual core has been around heavily up to and including the current generations from Intel dual core is what they target.

You release 8/12/16 core options in mainstream and get Intel to match that is when devs will start targetting 8 cores baseline. Software follows hardware, hardware can't simply increase once games catch up because... that's not how it works. Push the CPU power available, kill dual and quad cores and games move forward.

We need the baseline performance to keep increasing if we want game devs to start spending more cpu power on physics, on AI and on other things. Almost every game made to date basically needs to run on a dual core i3, meaning the base game physics, AI and engine all have to fit inside that, then you add some cpu heavy effects that can bleed onto other cores but it's not much. When a dev goes oh, the lowest anyone has is an 8 core you can now say hell, I can put 3 cores purely for AI, and 2 more purely for tracking physical objects so lets have more items that can be interactable, destructible, etc and still have cores left over for other crucial things.

Driving cores and/or clock speed is crucial for the progression of gaming.

Caporegime

- Joined

- 9 Nov 2009

- Posts

- 25,806

- Location

- Planet Earth

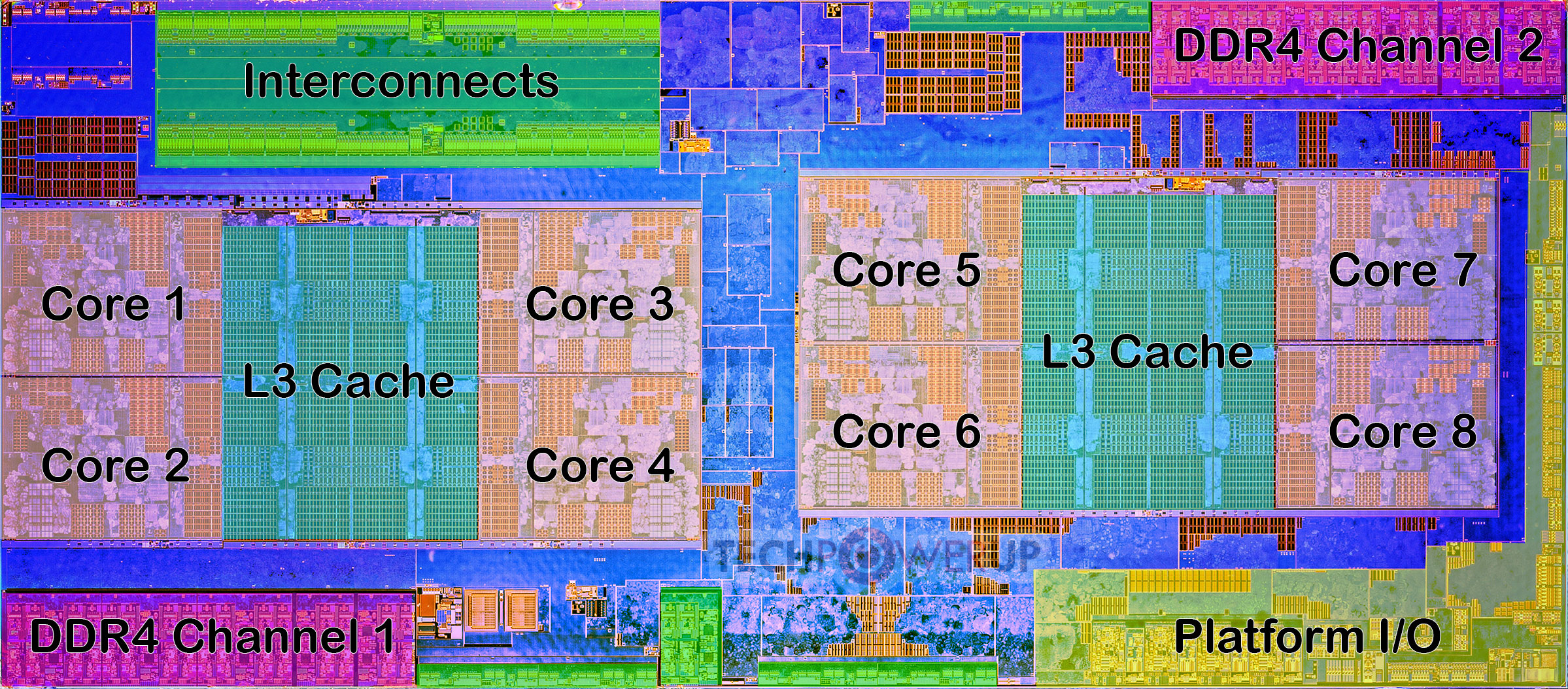

I've taken the liberty to do a comparison shot of the dies, and put an overlay of the Rome die (2x8 cores) over the Naples Dies (1x 8core + I/O)

Pretty impressive.

The I/O die might only be so large due to the number of IF links and purported L4 cache.

Most of the Ryzen CPU is comprised of the cores. I/O and other parts look to be a bit less than half the chip,so unless a larger L4 cache is central to the design,a similar I/O controller chip for a consumer Ryzen CPU might not be that large if they choose to go that way OFC.

I think you guys are forgetting the costs of 7nm wafers. You think that if the chip is smaller it costs less for AMD to buy from TSMC. The costs of 7nm chip is high, especially when AMD goes me first with GPU and CPU this time, so they are not sparing any expenses.

So in the end if they do 16 core mainstream, their ASP will not improve. And again, you guys so easy to spoil, we had mainstream 8 cores for only 1.5 years, and now you are dreaming of cheap 16 cores. You can only go so far in mainstream with core count war.

Intel 10nm? Are we still on that joke? By the time they bring 10nm+ to the market AMD will have mature 7nm+ chips or even something further.

Are we still on that joke? By the time they bring 10nm+ to the market AMD will have mature 7nm+ chips or even something further.

Software developers by your logic @drunkenmaster will cater dual or 4 cores, since there are plenty of them in craptops. Moving to 16cores will not change their mindset.

And its amazing, that all of you forget such chip like Threadripper, you know, 16 cores last year, 32 cores this year. I think AMD is safe in the core count war. I for one don't want any additional software and a reboot to go and play a game which refuses to start due to too many cores in the system, and we have quite a few of those.

All in all, guys, give AMD a frikkin break to earn some money to pump back into R&D, and to balance their sheets. It has been only couple years back since AMD was considered as dead in the water, and now you are pushing them to maintain chumpchange margins while pleasing 2 enthusiasts who cannot be bothered to go proper HEDT?

Remember, even though Intel is broken at the moment, nVidia is in full swing, AMD need every penny of R&D to get themselves in track and keep themselves afloat once Jim Keller and Raja gets Intel on track.

So in the end if they do 16 core mainstream, their ASP will not improve. And again, you guys so easy to spoil, we had mainstream 8 cores for only 1.5 years, and now you are dreaming of cheap 16 cores. You can only go so far in mainstream with core count war.

Intel 10nm?

Are we still on that joke? By the time they bring 10nm+ to the market AMD will have mature 7nm+ chips or even something further.

Are we still on that joke? By the time they bring 10nm+ to the market AMD will have mature 7nm+ chips or even something further.Software developers by your logic @drunkenmaster will cater dual or 4 cores, since there are plenty of them in craptops. Moving to 16cores will not change their mindset.

And its amazing, that all of you forget such chip like Threadripper, you know, 16 cores last year, 32 cores this year. I think AMD is safe in the core count war. I for one don't want any additional software and a reboot to go and play a game which refuses to start due to too many cores in the system, and we have quite a few of those.

All in all, guys, give AMD a frikkin break to earn some money to pump back into R&D, and to balance their sheets. It has been only couple years back since AMD was considered as dead in the water, and now you are pushing them to maintain chumpchange margins while pleasing 2 enthusiasts who cannot be bothered to go proper HEDT?

Remember, even though Intel is broken at the moment, nVidia is in full swing, AMD need every penny of R&D to get themselves in track and keep themselves afloat once Jim Keller and Raja gets Intel on track.

I've taken the liberty to do a comparison shot of the dies, and put an overlay of the Rome die (2x8 cores) over the Naples Dies (1x 8core + I/O)

Pretty impressive.

Indeed, AMD struck gold with their modular approach