Apologies to everyone else, this is going to be a long post.

These are all made up things by you, BTW. Fog has nothing to do with framerate. Every single nvidia card produces fog.

So you accuse me of making things up. Well, let's see who is right and who is wrong shall we? I am going to post the things you say I am making up in BOLD and directly quote where you said it. We start now.

You thought TVs filled in missing details in the picture. Then posted up settings that were to do with SD TV.

Your TV has its own processor that adjusts the picture quality - changes colours, fixes pixels lack, etc.

You thought TVs filled in missing details and then you posted the following.

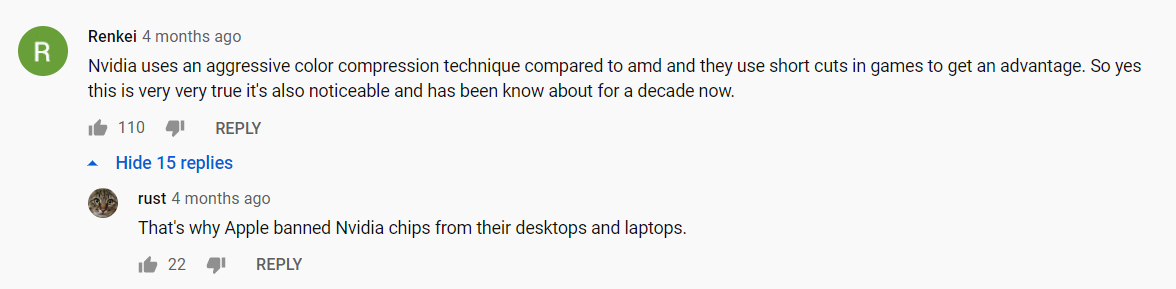

I read all kinds of comments. Look, people know it best:

My Panasonic 4KTV has a Studio Master Colours processed by a Quad Core Pro.

And in the menu, it have selected the following:

*Noise Reduction is set to Max

*MPEG Remaster is set to Max

*Resolution Remaster is set to Max

*Intelligent Frame Creation is set to Max

Now, explain what these functions are for?!

You didn't have a clue what any of those setting were for.

Next proof.

You claimed Nvidia used poor texture compression called ASTC and that proved Nvidia had bad image quality.

nvidia uses very bad texture compression which results in poor image quality. The texture compression is called ASTC and you can see what it does here:

Using ASTC Texture Compression for Game Assets

https://developer.nvidia.com/astc-texture-compression-for-game-assets

"Since the dawn of the GPU, developers have been trying to cram bigger and better textures into memory. Sometimes that is accomplished with more RAM but more often it is achieved with native support for compressed texture formats. The objective of texture compression is to reduce data size, while minimizing impact on visual quality."

More laughs for me, ASTC texture compression is only for mobile processors. Next.

You say you are sensitive to GeForce images, but when Vince put up two screenshots from his Nvidia and AMD Laptop you couldn't tell the difference

I get you. The point here is that some people may be sensitive to their monitors' outputs, for example I can not stand GeForce images, maybe only photos viewing is somewhat normal but reading news articles with their specific fonts, or playing games with that whitish fog and missing details, thanks but no

Vince in post no. 889 put up two screenshots, you didn't even try to guess which was which even though you claim your "sensitive to Geforce images" Which leads to my next proof.

You were using settings that your 8400 couldn't handle in Counter Strike which is the reason there was fog while you were playing. But you blame Nvidia for bad image quality.

In the last statement I quoted from you, you complained about whitish fog, when Stooeh asked you what whitish fog you replied with two screenshots from counter strike.

In the centre of the first image and everywhere on the second.

Check post no. 924 for the actual screenshots. The whitish fog on your 8400 Laptop isn't because Nvidia has bad image quality, it's because your laptop can't handle that game using Dx9.0.

And lastly.

You didn't have your external monitor setup with the correct resolution. But you blame Nvidia for bad image quality.

A couple of days ago you claimed that the Nvidia's image quality was terrible when you connected your laptop to an external monitor. I said it was more than likely user error and that you used the wrong resolution or made some other mistake. Your reply was.

Probably, but it doesn't excuse the low quality because the Radeon doesn't behave like that when lower resolution is chosen.

I didn't make up anything, it's just another day where you are completely wrong.