There is one thing i want to say about AMD.

Tock Tick Tock Tock.

Some of you will get that.

It's very bad:

https://en.wikipedia.org/wiki/Tick–tock_model

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

There is one thing i want to say about AMD.

Tock Tick Tock Tock.

Some of you will get that.

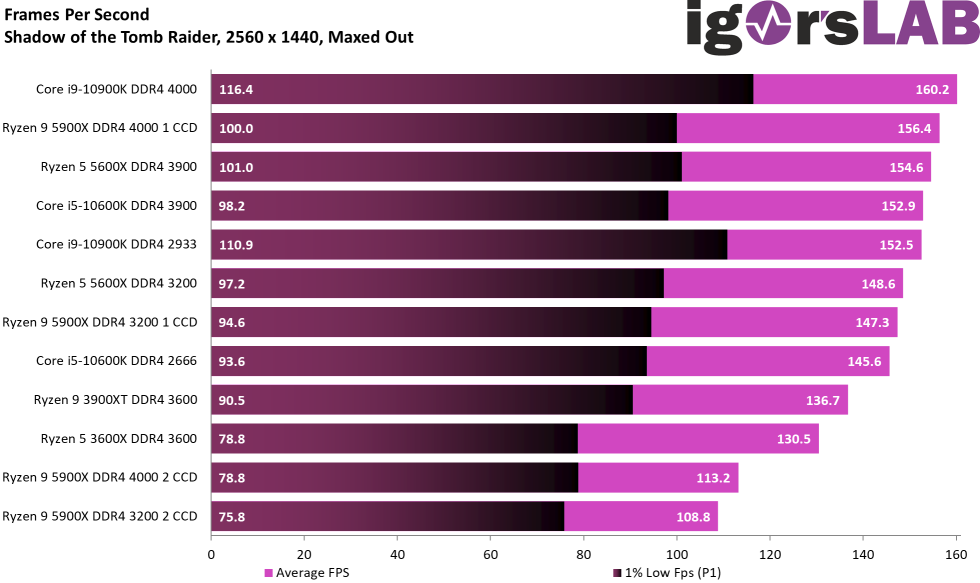

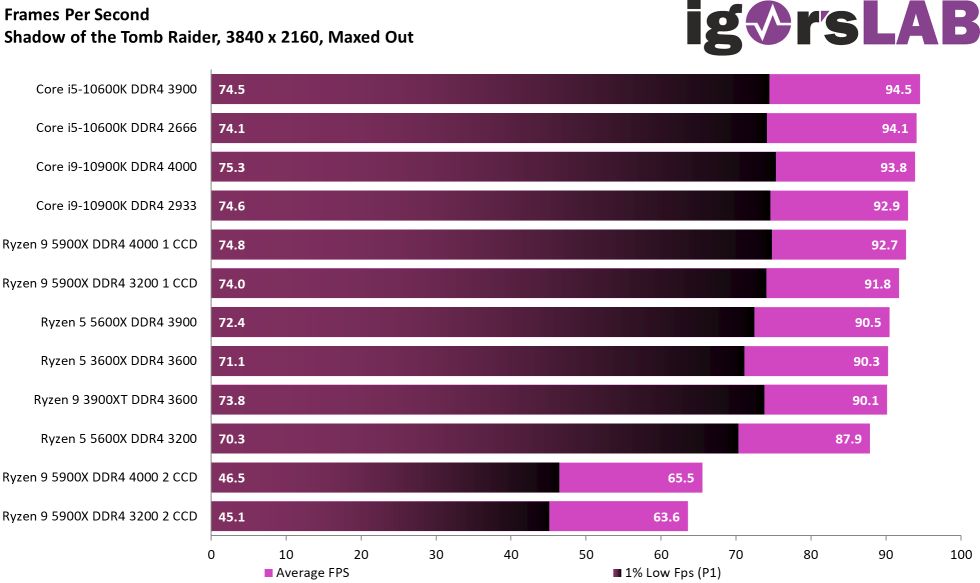

It's not 'worrying' as his results make no logical sense. A 5900x gaming 30% slower than a 3900x despite being 20% more powerful with lower latency? It's clearly a problem on his side or with the game.What is going on there? Every other review I've seen shows the 5900X matching the 10900k in all games, yet he's showing a few games the 5900X gets absolutely trounced by everything (even a 3600X) unless you completely cripple it by disabling one CCD?

A bit worrying...

A bit worrying...

I was all set to swapover to one of the new chips, i was pretty sad to see that 4k benchmarks there was basically no difference over the 3900/3950x, I presume is that because at that res everything is totally gpu limited? Ill wait and see benchies of it running compared to a 3900/3950 when the new AMD cards dropped before deciding whether its a worthwhile upgrade or not.

There is one thing i want to say about AMD.

Tock Tick Tock Tock.

Some of you will get that.

Every clock starts with a tick!

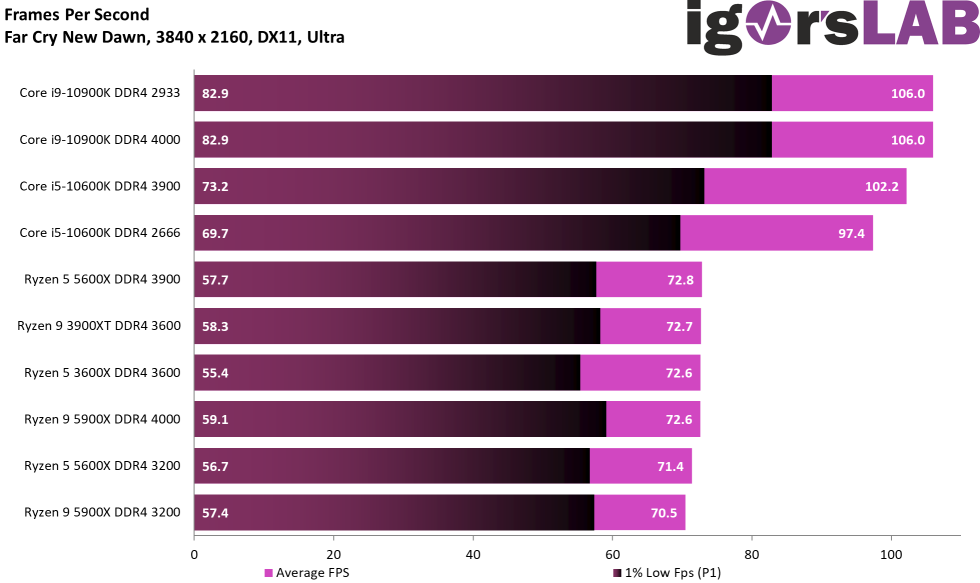

Every clock starts with a tick!It’s not just Far Cry, the Shadow of the Tomb Raider results are equally as bad. 2/5 games tested showing unexplained results.It's only Far Cry New Dawn, not a game to worry about

Disagree. It is worrying. When disabling 1 CCD his FPS (in SotTR) matched the 10900k, but with both enabled it was crippled, if he doesn't have a faulty chip the new CCD/CCX topology used for the 5900X could be introducing problems under certain situations. He's an experienced benchmarker and can't explain the results, so it'll be interesting to see if others replicate it. The translated article is a bit hard to read, but he seems to suggest it's happening under certain (non-game) workstation loads too.It's not 'worrying' as his results make no logical sense. A 5900x gaming 30% slower than a 3900x despite being 20% more powerful with lower latency? It's clearly a problem on his side or with the game.

It’s not just Far Cry, the Shadow of the Tomb Raider results are equally as bad. 2/5 games tested showing unexplained results.

Disagree. It is worrying. When disabling 1 CCD his FPS (in SotTR) matched the 10900k, but with both enabled it was crippled, if he doesn't have a faulty chip the new CCD/CCX topology used for the 5900X could be introducing problems under certain situations. He's an experienced benchmarker and can't explain the results, so it'll be interesting to see if others replicate it.

https://www.igorslab.de/en/amd-ryze...-wird-intels-10-generation-jetzt-obsolet-2/6/

He said he's tried multiple motherboards with every BIOS provided to him.If all the other reviewers are having different results (that agree with each other) then there's something wrong with Igor's Lab results. Maybe he's not using a new BIOS he's supposed to be using.

So is Hardware Unboxed and they don't show any of the issues Igor does....whereas Igor is using a 3090.

But Hardware Unboxed is using a 5950X which has a different CCX/CCD layout to the 5900X unless I missed another review they've done? Are there any other reviews of the 5900X with a 3090?So is Hardware Unboxed and they don't show any of the issues Igor does.

What is going on there? Every other review I've seen shows the 5900X matching the 10900k in all games, yet he's showing a few games the 5900X gets absolutely trounced by everything (even a 3600X) unless you completely cripple it by disabling one CCD?

A bit worrying...

Eh? They're both dual chiplet designs, the only difference is the 5900X has 2 cores disabled in each chiplet to be 12 cores. It's the same CCD layout and the same CCX layout, just fewer cores in the CCX.But Hardware Unboxed is using a 5950X which has a different CCX/CCD layout to the 5900X

None of those tested the same situation where Igor hit the problems unless I'm mistaken. 5900X, 3090, 1440p/4K ultra, Shadow of Tomb Raider or Far Cry New Dawn (but no doubt there will be others). Hardware Unboxed tested the 5950X, Gamer Nexus only tested 1080p, hardware canucks/optimum tech didn't test those games.simethibg wrong there. Linus, hardware unboxed, gamers nexus, hardware canucks, optimum tech - I've looked at all these reviews today and they show the 5900x beating intel in those games especially tomb raider

It affected ONLY the 5900x with 2x CCD's enabled, same problem on mutiple otherboards, and no other reviews that I have seen have had this issue at 4k in games. Seems like a defect or some other abberation.Disagree. It is worrying. When disabling 1 CCD his FPS (in SotTR) matched the 10900k, but with both enabled it was crippled, if he doesn't have a faulty chip the new CCD/CCX topology used for the 5900X could be introducing problems under certain situations. He's an experienced benchmarker and can't explain the results, so it'll be interesting to see if others replicate it. The translated article is a bit hard to read, but he seems to suggest it's happening under certain (non-game) workstation loads too.

https://www.igorslab.de/en/amd-ryze...-wird-intels-10-generation-jetzt-obsolet-2/6/