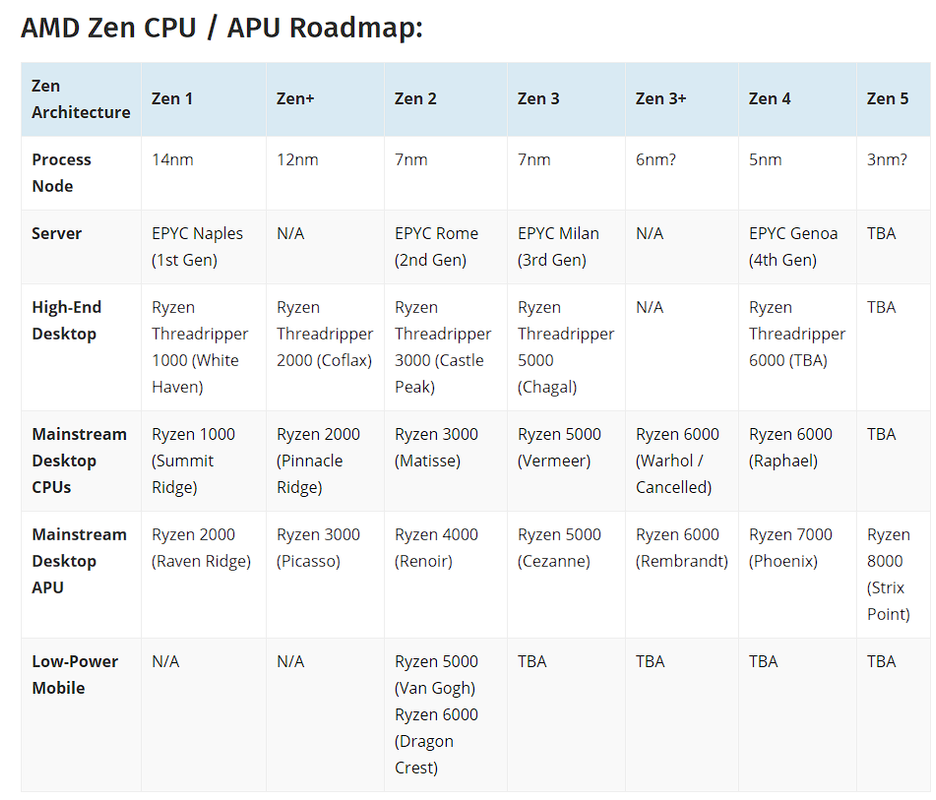

Not sure it makes much (business) sense to have 10/12/16 core chiplets, they already provide 6 to 64 cores with an 8-core chiplet, and rumours are they'll bump that to 96 cores maximum just by extra chiplets. Increasing the size of the chiplet makes it unfeasible to sell the lower chips, if you've got a 12 core chipset you're not going to want to fuse off half of it to sell a 6 core chip... And whilst as a consumer it'd be great if the low end bumped up to 8 or 10 cores there's no 'need' for it and it just increases costs/decreases the cpus they can make...

Hell, you even are comparing them to Intel and the competitiveness with Alder Lake, Intel aren't due to have more than 8 'P-Cores' for several generations.

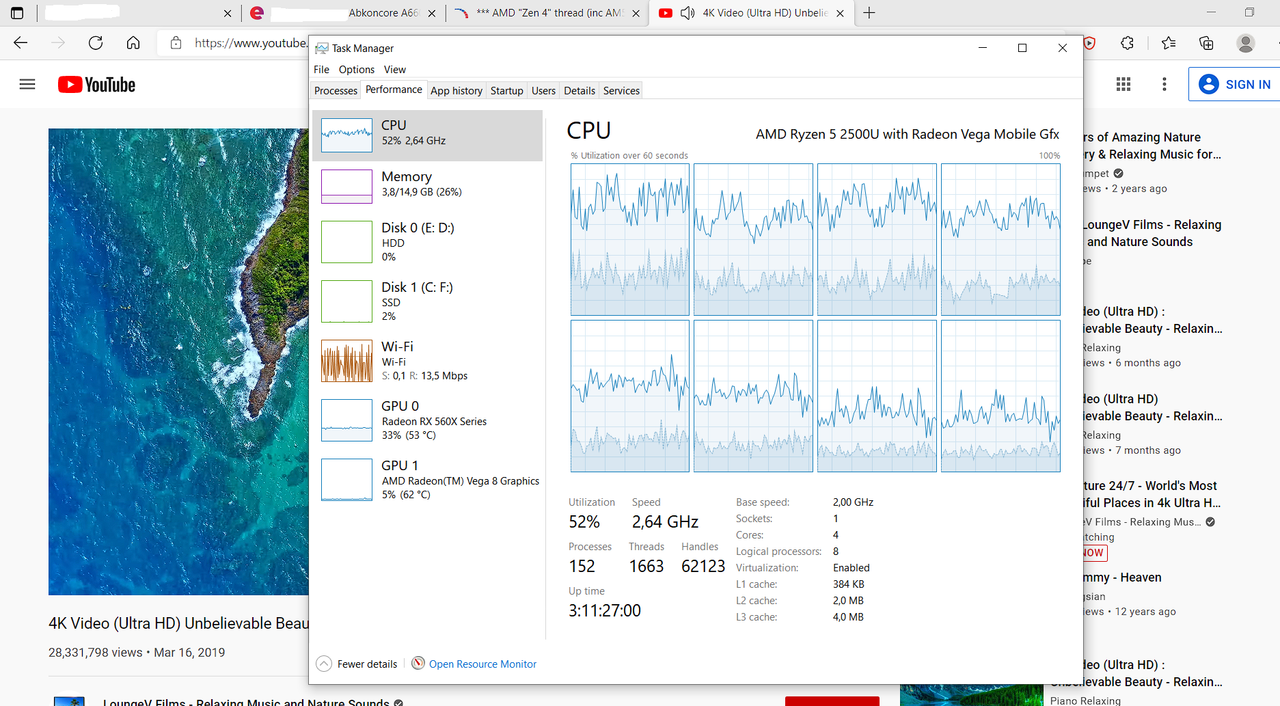

As for die shots, that's not what this shows:

https://wccftech.com/amd-ryzen-5000g-cezanne-apu-first-high-res-die-shots-10-7-billion-transistors/ that's not 40% of the die area...