Beyond Earth

http://www.gamespot.com/articles/beyond-earth-takes-civilization-to-the-stars/1100-6418906/

http://www.gametrailers.com/news-post/73123/sid-meiers-civilization-goes-sci-fi-with-beyond-earth

http://www.techspot.com/news/56376-...-is-sid-meiers-civilization-beyond-earth.html

http://www.gamespot.com/articles/beyond-earth-takes-civilization-to-the-stars/1100-6418906/

http://www.gametrailers.com/news-post/73123/sid-meiers-civilization-goes-sci-fi-with-beyond-earth

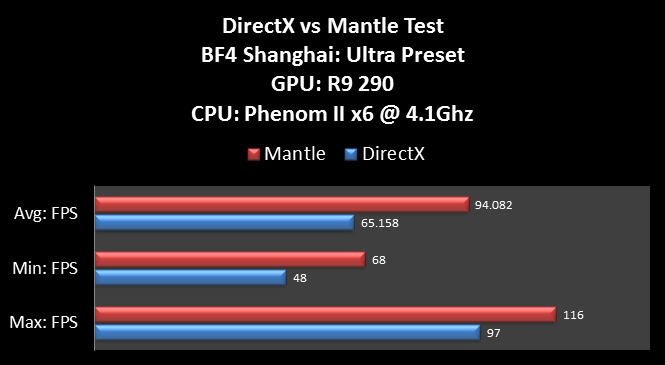

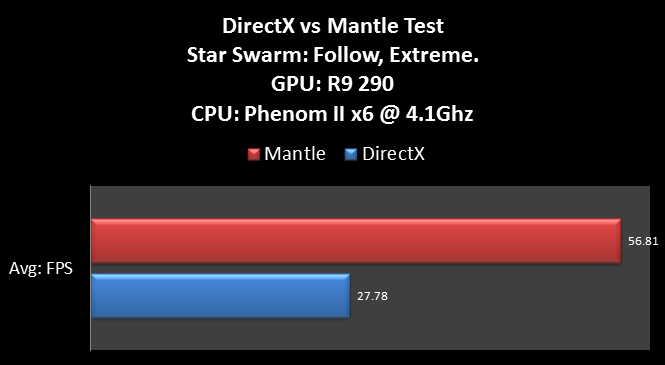

In addition to its use of DirectX 11, Sid Meier’s Civilization: Beyond Earth will be among the first wave of products optimized for the latest in AMD graphics technologies, including: the new Mantle graphics API, for enhanced graphics performance; AMD CrossFire™, for UltraHD resolutions and extreme image quality; and AMD Eyefinity, which allows for a panoramic gameplay experience on up to six different HD displays off of a single graphics card.

http://www.techspot.com/news/56376-...-is-sid-meiers-civilization-beyond-earth.html

AMD has scored another win for their Mantle API, with upcoming title Sid Meier's Civilization: Beyond Earth to support it alongside DirectX 11. Previous Civilization titles, such as Civilization V, were reasonably CPU intensive, so the reduced CPU overhead and lower-level features of Mantle should help gamers run the game on entry-level hardware including AMD's own APUs.

Last edited:

Shrewd, no? You should read Dan’s comments in this

Shrewd, no? You should read Dan’s comments in this