Known issue on AM5 Motherboards only with RTX 4090,I also knew of the problem before I bought the gear.BIOS were updated to fix the RTX 4090. From what I read.

No problems with any other video card.

For my issue with Asrock motherboard. I was unable to get a post with RTX 4090.HDMI/Displayports did not work.System not stable on rare occasion I did get it to post with RTX 4090.If when working a restart system would go back to no post/black screen/hang.No DX12,No HDR working.Tried 3 different HDR monitors and 1 non-HDR

After reformatting,remove the RTX 4090 ,put in RTX 3090 test to see if it works for around 8Hrs of troubleshooting ,I made some headway.

I was able to get HDMI working with RTX 4090 by doing this.

Plug HDMI cable into IGPU on motherboard,reformatt again through HDMI only on LG OLED .

Then installed the Nvidia drivers

Then Install RTX 4090 would post and run on HDMI but no display ports on second monitor on system.

To get displayports working again, another install through IGPU over HDMI but on a different monitor attached to the system.

Then install Nvidia drivers the install RTX 4090 and display ports worked.

So about 10hrs-11hrs total troubleshooting to get working system.

Now when working some games would work awesome such as Cyberpunk minus drivers bugs on RTX 4090.

Other games such as Spiderman Remaster not so goood,RTX 3080/RTX3090 out performed the RTX 4090.

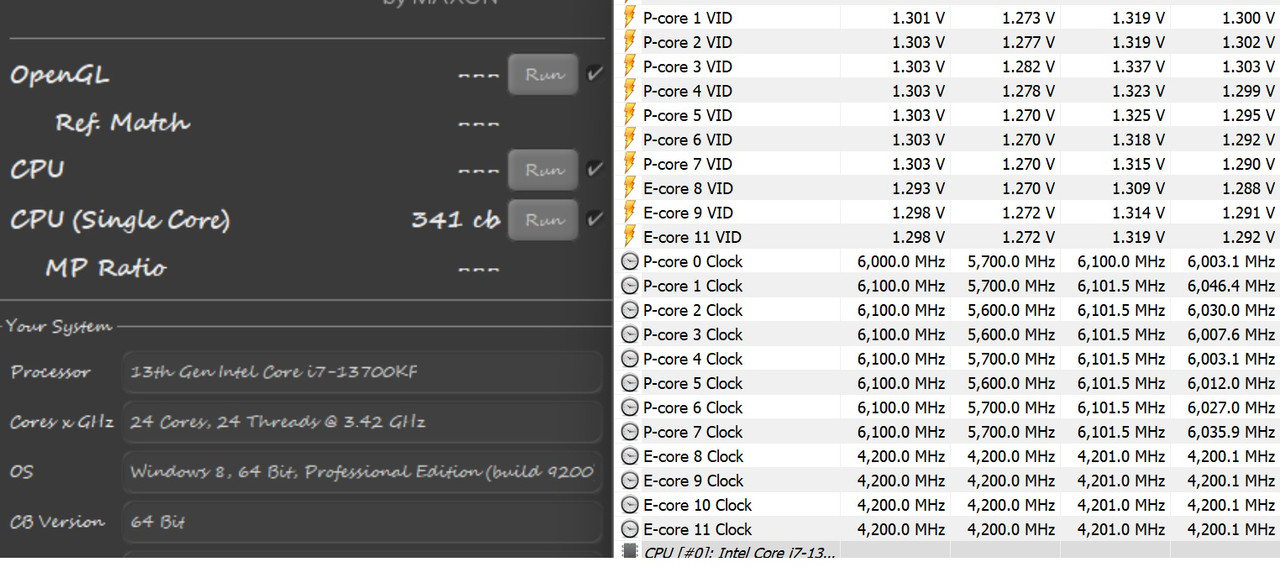

Said screw it bought another Intel system so in two weeks went from 12900K to awesome 7700x to 12600K and next week most likely a 113900K lol.

Shadow of the tomb raider no DX12 HDR First problem after many hours trouble shooting.Games like Spiderman got half FPS with RTX 4090

AMD 7700X RTX 4090 when working was fine in Cyberpunk

Intel Cyberpunk RTX 4090 a couple FPS less than AMD 7700X but all good