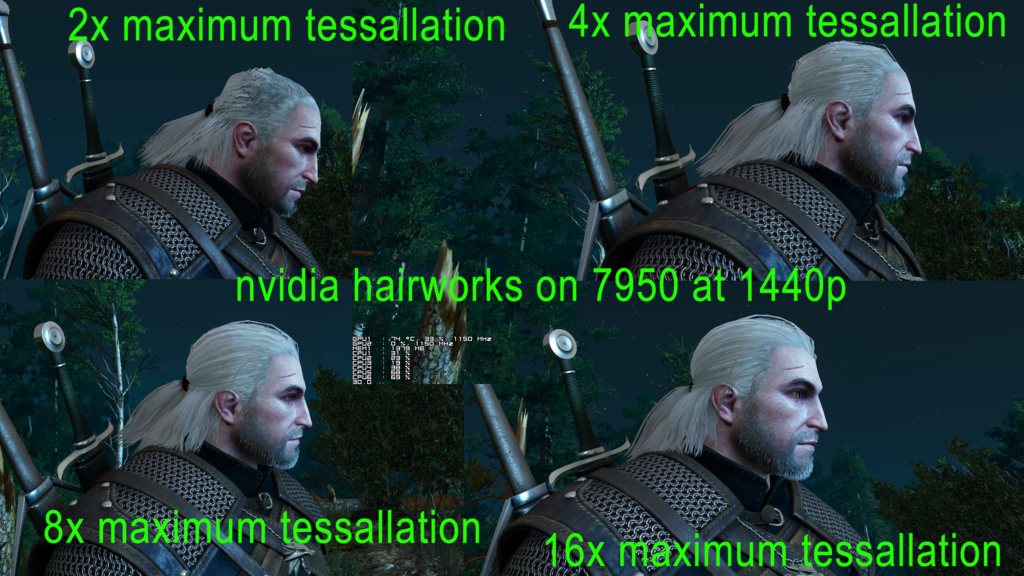

To see judge properly you would need the same shot in them all not different angles

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

CPR W3 on Hairworks(Nvidia Game Works)-'the code of this feature cannot be optimized for AMD'

- Thread starter tommybhoy

- Start date

More options

Thread starter's postsRemember you aren't really, not really, meant to say things like that without any evidence to back it up any more, Matt (because it's a baseless lie).

That could have quite easily been avoided (along with other tweaks) if there was a driver released in time for game launch. Quite a big game launch at that.

Have you seen this? if AMD are wrong then surely Nvidia could sue them for defamation?

Matt isn't responsible for driver releases, it isn't fair to shoot the messenger.

Soldato

- Joined

- 5 Sep 2011

- Posts

- 12,884

- Location

- Surrey

He is not defaming at that time - because he is merely implying they cannot, or could not optimise from source code. So it's a safe angle to take without getting into too much legal trouble.

What he is saying is true on certain levels but he also said that they will ask for as much source code as possible - and this is true, but what he is not telling you is that they more often than not will not get it.

The key difference now is that licensed developers, or companies in general are able to pay for their licence and get access to source code.

If AMD were denied access to source code via being denied a licencing agreement, they would be the very first people to state that outright. Because that would actually give them grounds for a legal agenda.

So NVIDIA have taken certain steps.

Also nobody is forcing companies to use these libraries - as I've mentioned in the Witcher 3 thread, there is nothing stopping AMD compiling libraries and in game shader technologies, nothing at all. As there is also no reason why two vendor written technologies cannot coexist in the same game. Which is besides the point anyway, because deliberately crippling again - is a baseless accusation that has not been proven once.

What he is saying is true on certain levels but he also said that they will ask for as much source code as possible - and this is true, but what he is not telling you is that they more often than not will not get it.

The key difference now is that licensed developers, or companies in general are able to pay for their licence and get access to source code.

If AMD were denied access to source code via being denied a licencing agreement, they would be the very first people to state that outright. Because that would actually give them grounds for a legal agenda.

So NVIDIA have taken certain steps.

Also nobody is forcing companies to use these libraries - as I've mentioned in the Witcher 3 thread, there is nothing stopping AMD compiling libraries and in game shader technologies, nothing at all. As there is also no reason why two vendor written technologies cannot coexist in the same game. Which is besides the point anyway, because deliberately crippling again - is a baseless accusation that has not been proven once.

Last edited:

Exactly the reason that slider was introduced, to stop things from being needlessly over tessellated in order to cripple performance. This includes things on and off screen.

Then why not tell people instead of a 'user' finding it and letting people know ?

Whether or not Nvidia are deliberately over Tessellating its not going to affect AMD going forward given that GCN 1.2 (Tonga) has the same Tessellation performance as Maxwell.

Meanwhile if like me the user has a pre GCN 1.2 Card they can just reduce the Tessellation to 8x in CCC.

Infact its probably a good idea to do that anyway even if you are on a GCN 1.2 or later card as 8x is plenty, certainly you would have to look very hard to see a difference between 8x and 16x.

Meanwhile if like me the user has a pre GCN 1.2 Card they can just reduce the Tessellation to 8x in CCC.

Infact its probably a good idea to do that anyway even if you are on a GCN 1.2 or later card as 8x is plenty, certainly you would have to look very hard to see a difference between 8x and 16x.

Soldato

- Joined

- 5 Sep 2011

- Posts

- 12,884

- Location

- Surrey

Another thing that gets me from that video last year is it's all very well introducing a programme for 'OpenFX' which is by and large a good step in the right direction as far as we are concerned - but it doesn't really solve anything. And that's because half the reason the industry allows GameWorks to exist is because developers do not have the resources or time to produce these technologies themselves. NVIDIA are creating these libraries themselves and licencing them under their program and then with that protecting their intellectual property.

Simply handing this over to unfunded organisations ends in slow (or no) uptake, and we then get left with dwindling support once more.

Simply handing this over to unfunded organisations ends in slow (or no) uptake, and we then get left with dwindling support once more.

I love how they have to explain themselves though.

Nvidia : I've got a 4 bedroom house

AMD : Mine is 3 bed

AMD : Give me the keys to your house, I want to use it

Nvidia : Erm, no.

AMD : Thats not fair, you have 4 bedrooms and we only have 3, we want to use them.

Nvidia : Goodbye

Nvidia : I've got a 4 bedroom house

AMD : Mine is 3 bed

AMD : Give me the keys to your house, I want to use it

Nvidia : Erm, no.

AMD : Thats not fair, you have 4 bedrooms and we only have 3, we want to use them.

Nvidia : Goodbye

To see judge properly you would need the same shot in them all not different angles

Wouldn't matter, its already been proved in the past that you get to a point where there's no visual difference regardless of how much tessellation is applied.

Wouldn't matter, its already been proved in the past that you get to a point where there's no visual difference regardless of how much tessellation is applied.

Where is that point ? If AMD apply x8 for example and Nvidia apply x8 - are they both applying the same amount of tess ?

Not wanting to start an argument, but your example of NVidia rendering stuff at a lower resolution, wouldn't be the same thing at all. That would involve NVidia not actually rendering the same scene as AMD. It would be like saying, you have a scene with two characters fighting, but one card only renders one of them to make it faster.

errrm, that's exactly what AMD are doing with their tessellation override, the fact that users can't notice it with their eyes is irrelevant. I can't tell the difference between high and ultra textures in games but do I want a driver setting which ignores my requests for ultra textures and forces the use of high instead? hell no.

If a game calls for level 32 tessellation and people are using AMD drivers to force 8 or 16, they are not rendering the same scene as NVidia and seeing the game as the developer intended, simple as. AMD can make as many excuses as they want but the fact is if their tessellation performance was good enough they wouldn't have gone anywhere near such a thing.

Last edited:

Soldato

- Joined

- 5 Sep 2011

- Posts

- 12,884

- Location

- Surrey

Where is that point ? If AMD apply x8 for example and Nvidia apply x8 - are they both applying the same amount of tess ?

The argument is that AMD's tessellation pipeline is still worse than NVIDIA's so the performance hit is less severe. Unfortunately the boundaries between "do we need to use that much tessellation?" and tessellate ALL the buildings!" is blurred by this debate about what's fair for AMD and it's consumers, and what is needed to improve graphical fidelity.

Take the 'downgrade' accusations thrown at CDRed Projekt.

On one hand you've got people saying, "but why did you remove tessellation???"

Well, hang on a minute. Wasn't the arguement a few months ago that GameWorks effects enables NVIDIA to purposefully use line tessellation to cripple AMD hardware over theirs as their tessellation performance is still inferior? Now all of a sudden people are moaning there is no tessellation on the buildings or the trees?

You see how farcical it all is.

AMD can make as many excuses as they want but the fact is if their tessellation performance was good enough they wouldn't have gone anywhere near such a thing.

And if nvidia didn't go over the top hurting their own performance just to hurt amd performance even more with tessellation amd wouldn't need that implementation in their driver either.

Caporegime

- Joined

- 8 Jul 2003

- Posts

- 30,080

- Location

- In a house

The consoles were downgraded as well.

http://wccftech.com/the-witcher-3-retail-patch-comparison-downgrades/

http://wccftech.com/the-witcher-3-retail-patch-comparison-downgrades/

Caporegime

- Joined

- 8 Jul 2003

- Posts

- 30,080

- Location

- In a house

Caporegime

- Joined

- 9 Nov 2009

- Posts

- 25,802

- Location

- Planet Earth

Can of worms opening time:

http://arstechnica.co.uk/gaming/201...s-completely-sabotaged-witcher-3-performance/

Supposedly,earlier builds of the game worked fine until two months before release the Hair works libraries were included which lead to the controversy now.Apparently, AMD did offer TressFX but by then it was supposedly too late.

http://arstechnica.co.uk/gaming/201...s-completely-sabotaged-witcher-3-performance/

Supposedly,earlier builds of the game worked fine until two months before release the Hair works libraries were included which lead to the controversy now.Apparently, AMD did offer TressFX but by then it was supposedly too late.

Can of worms opening time:

http://arstechnica.co.uk/gaming/201...s-completely-sabotaged-witcher-3-performance/

Supposedly,earlier builds of the game worked fine until two months before release the Hair works libraries were included which lead to the controversy now.Apparently, AMD did offer TressFX but by then it was supposedly too late.

Wasn't it a similar story with one of the batman games? Performance and aa suddenly got broken prior to release when beforehand it was fine.

Wasn't it a similar story with one of the batman games? Performance and aa suddenly got broken prior to release when beforehand it was fine.

AA worked for ATI/AMD cards in the beta/demo but was disabled for ATI/AMD cards in the final game, the AA for ATI/AMD cards was re enabled in the GOTY version nearly 10 months later.

I tried applying the tessellation tweak on my 290x last night, these are rough numbers that I can remember:

Hairworks off: 55 FPS

Hairworks on (64x): 37 FPS

Hairworks on (6x): 48 FPS

That was without any beasts on the screen, but performance doesn't noticeably drop when fighting beasts with hair. I turned a few other settings down (foliage distance and shadows to high, SSAO) and I get a smooth 50-60 FPS out in the wilderness, and drops down to 40-50 when in populated areas. Overall fairly happy with performance, hopefully a driver update will come soon to improve stuff a bit more!

Hairworks off: 55 FPS

Hairworks on (64x): 37 FPS

Hairworks on (6x): 48 FPS

That was without any beasts on the screen, but performance doesn't noticeably drop when fighting beasts with hair. I turned a few other settings down (foliage distance and shadows to high, SSAO) and I get a smooth 50-60 FPS out in the wilderness, and drops down to 40-50 when in populated areas. Overall fairly happy with performance, hopefully a driver update will come soon to improve stuff a bit more!