I don't think it's ever been a thing in tech, tbh. I started with a 486 SX25 and I don't think I've ever bought anything that was "future proof".

You just upgrade or you turn the "quality" sliders down (quotes because who knows what they actually do a lot of the time...)

I would say it was somewhat achievable a while ago, mostly before dx 12 came along (we're only just now starting to see dx 12 in a better shape but now we're kind of back to square 1 with UE 5.....). Alex and Richard summed it up well, you can have the best hardware there is but it's going to do jack **** in games which aren't optimised properly

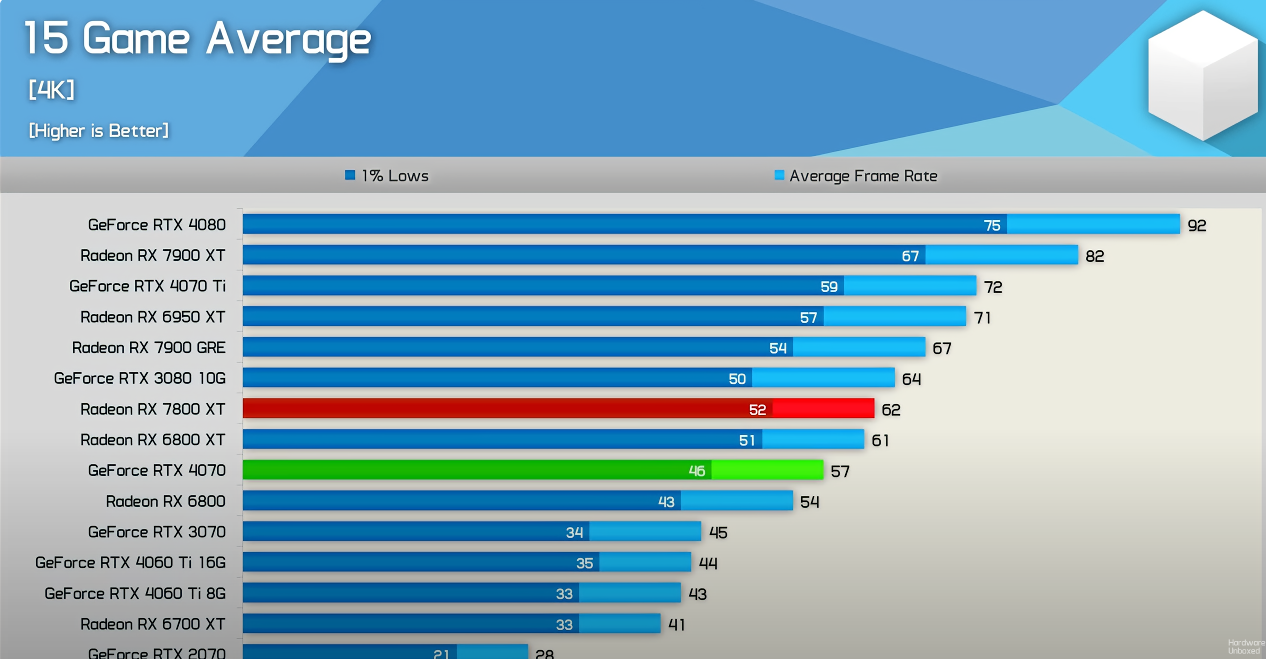

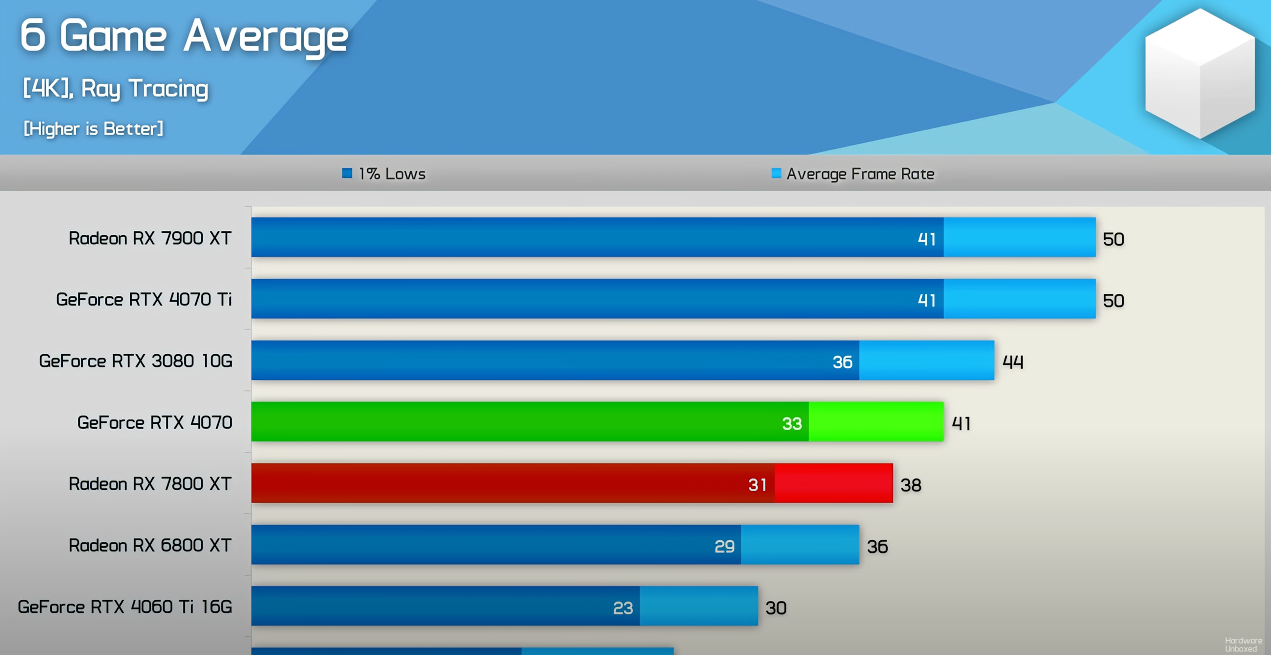

Ultimately, the real future proofing nowdays is as shown, wait for said games to get patches or rely on tech like upscaling and frame gen to bypass said issues on day 1 of playing.

4 MB SLI for the win!

4 MB SLI for the win!