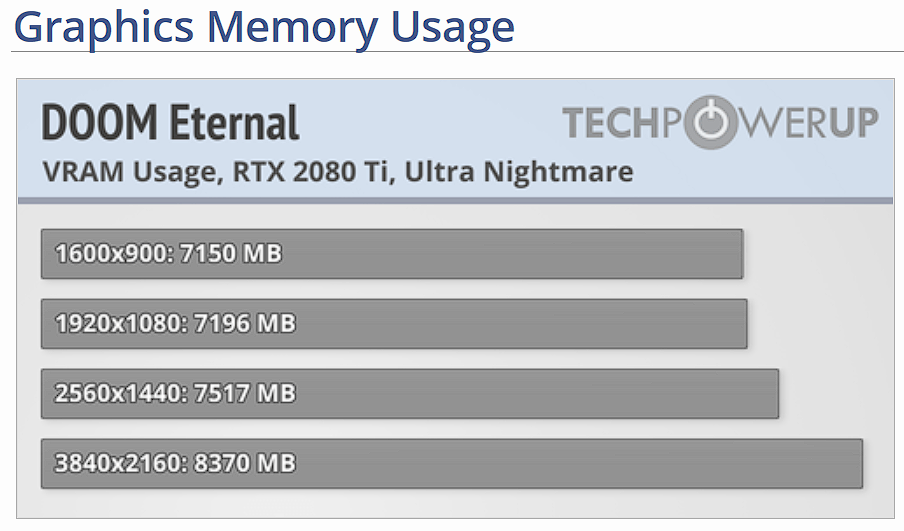

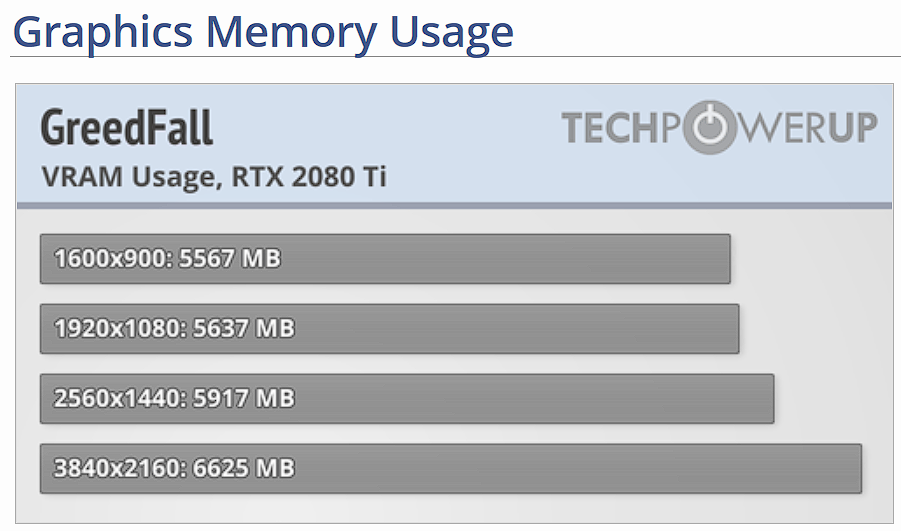

In the latest hardware unboxed review of the 3070, 3070 lost out 20 frames because ultra nightmare textures used 9gb of memory at 4k, he had to reduce them to ultra to bring the usage down to 7gb...I showed this several pages back, Doom eternal real memory usage at 4k Ultra Nightmare is 7Gb roughly, for me slightly under and of that usage most of it was wasted texture pool size which wasn't being used for anything useful. It literally just reserves 4.5Gb of vRAM up front for texture streaming but provides no benefit to doing so. When low or high at about 1Gb is fine.

Flight simulator when measured correctly (real usage) tops out at about 9.5Gb but nothing can run it at those settings even a 3090 chokes on frame rate. In order to get FS2020 to be playable you have to drop the settings down, not because of vRAM limits, even the 24Gb 3090 chokes, but because the demand on the GPU is so high.

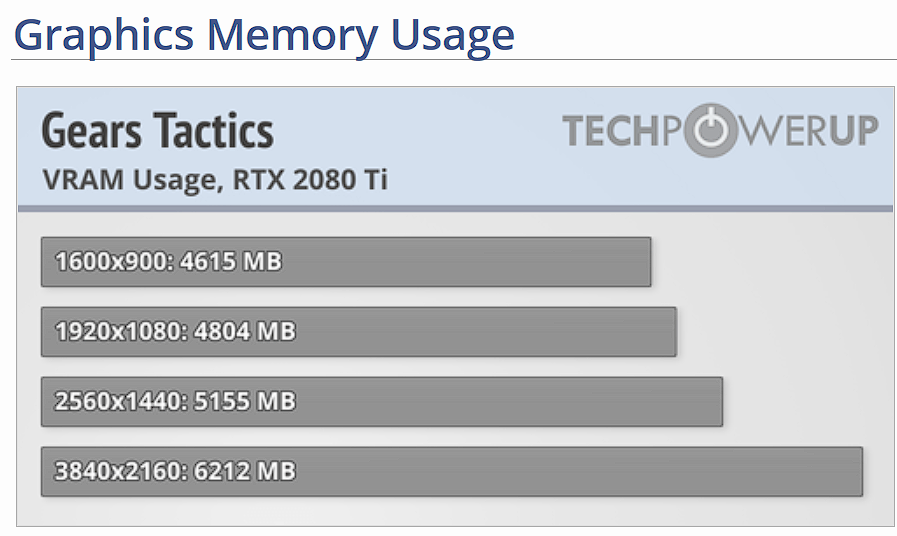

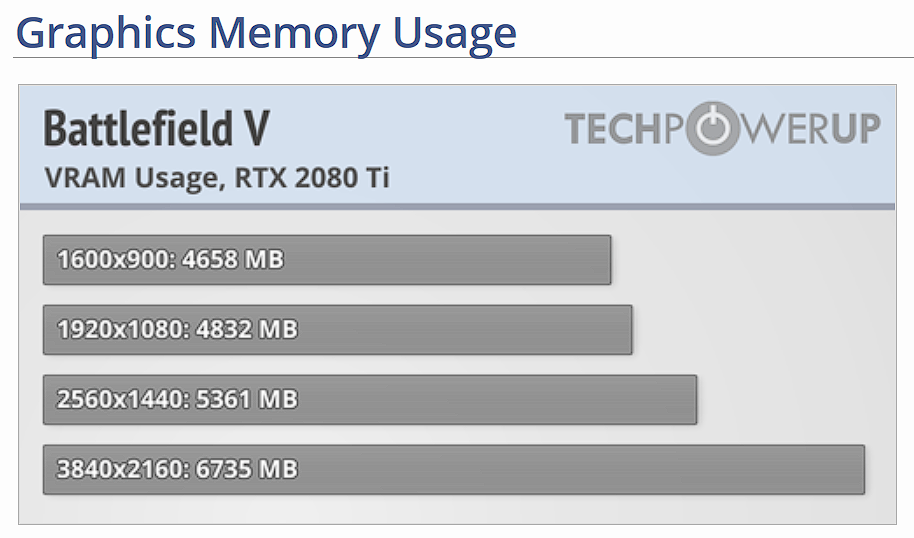

I know there is no difference in ultra and ultra nightmare textures, just saying 8gb is definitely not enough going forward into next generation of games at 4k. I mean it will probably give issues in 3 out of 10 games but that is still quite a lot if those are games you plan on playing at maxed settings.

Last edited: