Yes it does, but it's not the restriction you believe.

The point was that it was *a* restriction, and it seemed you were unwilling to accept that it was a restriction.

For a good 10+ years we have had home cinema projectors operating at 1280x720. We have HD ready TVs operating at 1366x768. All are capable of displaying a perfectly proportioned 1080p image, albeit at a lower resolution. Further more, if you owned a 720p projector then that's the resolution at which you'd be watching your full frame 16:9 1080p images. Letterboxed Blu-rays display at a lower resolution: 1280x533 pixels

This is all well and good but it's irrelevant when we're talking about not utilising the available pixels.

The example should be about not utilising a 720P projector's full pixel count, as this is the argument.

Now, you tell me...have 720p projectors suddenly stop working with 1080p sources?

Do owners of 720p projectors and 768 HD Ready TVs find Blu-ray completely unwatchable?

None of these examples were made, so you're responding to things no one said again.

It's different when you don't have the available resolution in the first place, than it is having the resolution there and opting not to use it.

It entirely depends on what you watch, I watch a lot more TV series than movies so the vast majority of my content is 16:9, I even have a fair amount of movies in 16:9 too.

You seem to think I have an issue with 2.40:1, I don't, I think a screen at 2.40:1 looks great, that's not really my argument though.

It's not irrelevant. In fact it's quite important to understand the origins of anamorphic projection.

I do understand the origins of anamorphic projection, but I don't think this is the issue that's really at hand here.

Incidentally, the "luxury" of using optics to stretch and recompose an anamorphic image is one of the best ways of achieving a scope screen with maximum panel resolution retained for the home user too. What's your point?

My point is that using an anamorphic lens is significantly better than the method you proposed, ignoring the costs.

I was simply stating that caged was saying that the trade-offs you were suggesting were not worth having a 2.40:1 screen.

I have taken great length to point out the three principle methods of achieving a scope screen and their advantages so that the uninitiated reader appreciates how each differs.

I may have been over zealous in the face of what appeared to me to read as the inability to grasp the advantages of a maximised 2.40:1 image, and for that I apologise.

You have chosen to go to those lengths because you were assuming we weren't understanding you because we were pointing out that those trade offs weren't worth it.

Well you seem to be driven by this idea that ever pixel must count. You're concentrating on entirely the wrong things. You should be thinking about the impact of the image. This brings me to your next statement...

I'm not driven, I simply like to maximise the resolution on a display that I have for the best image quality. I wouldn't like to trade off one for the other, I'd be buying a 1920x1080 projector to make use of its pixel count.

My dear fellow, it's entirely different. Just step out of the obsession with resolution and consider picture size and source quality for a while.

It's not an obsession with resolution, it's making full use of something I've paid for. I don't see your solution as an acceptable trade off. 1920x1080 is low enough as it is when projected, never mind on a 24" monitor.

Your example of the TV misses one crucial factor in relation to the discussion about nightrider1470's solution: Optical zoom.

Masking the TV is no different than running a projector with a 16:9 projection screen. In that situation you are dealing with a CIW system. So really there's absolutely no benefit to creating a windowed 16:9 image inside the 2.40:1 ribbon. The pictures in that scenario are getting smaller. So your TV example really shows that you haven't understood the concept.

I have understood it, you seemingly don't like my response to it so are claiming I don't understand. I understand perfectly well, this is not an issue at all.

My TV example IS the same because you are making a choice to crop the available image down to fit an ultra wide aspect ratio and then cropping all further content to fit in to that small cropped space. As I've said, I've been saying that it isn't really a good solution.

What we want is a bigger image, not a smaller one. But it is being done in a very specific way. If you want to use a TV example then it' should really be the Philips 21:9

I understand what you're saying, and no the Phillips 21:9 TV is not valid in this example, because the projectors being discussed are 16:9.

Your example would work if we're talking about an ultra wide projector, like one with a 2560x1080 display, but because we're not it's not really valid.

The difference is that the image size is constrained by the display's pixel count. If you aren't using all the available pixels, then you're not using the largest image available.

It's not an obsession with pixels but a clear understanding that if you aren't using the whole of something, you're not getting full use out of what is available. It's a rather simple concept.

You are talking about area of picture basically, but a 2.40:1 image is not giving you the largest area of picture coverage on a 16:9 display,

What we are doing with the scope screen is something very similar. We are taking an already large 16:9 image and we are adding extra space to the left and right. We are growing the projection screen horizontally. We are doing this because it matches the prime source. It gives the largest viewing experience when playing media that needs, and craves, and deserves the wow factor of a very large screen.

The issue under contention is that it's quite a compromise, but you're taking the fact that people are objecting to that being a good solution as them not understanding

The solutions others have proposed is basically "get a bigger 16:9 screen". But that only works to a point.

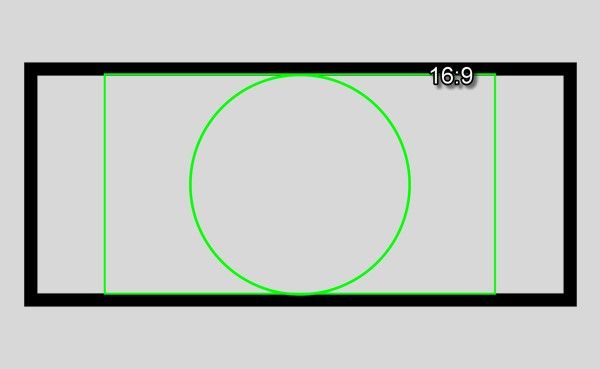

So we start with the traditional average size 16:9 screen and the problem that 2.40:1 just looks lost.

Can you define what "looks lost" actually means? Why not use simple to the point terms? Such as "I'd prefer the image to be bigger".

This is what I was referring to in the other thread when I was commenting on the terminology audiophiles like to use, a lot of it just seems unnecessary.

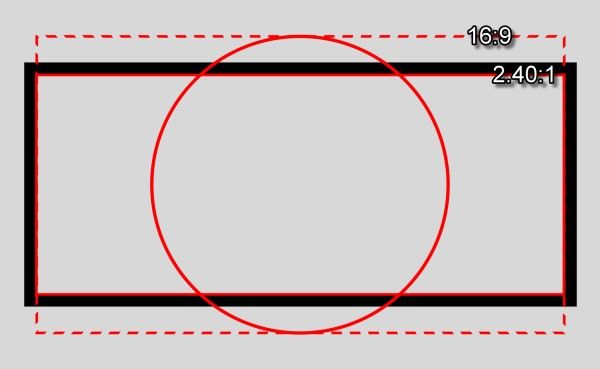

So let's say that the owner's objective is to fill the width of the room with a very large image from his 2.40:1 films. Everyone else here is saying "get a bigger screen". Well let's have a look how that would pan out....

Do you see the problems now? The room is now dominated by a huge screen. There's acres of unused screen surface above and below the 2.40:1 image. This saps perceived contrast. There's also considerations about existing equipment getting in the way of the projected image. So changes to the screen then could mean changes to the audio gear too. What happens if you have very nice floorstander speakers. Are you prepared to downgrade? Over all it's not a good solution really.

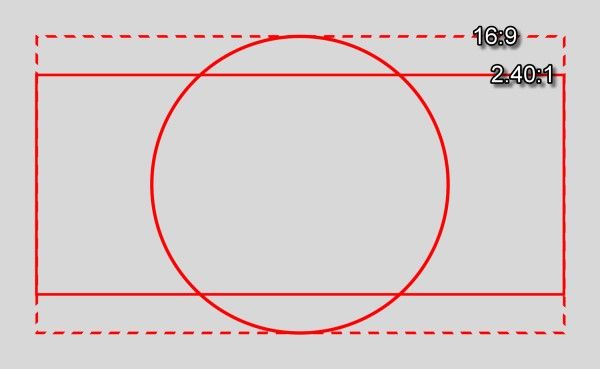

Now look what happens if we keep the same image height as the 16:9 screen, but go for something wider....

Both the 16:9 and 2.40:1 images are the same width and take up the same amount of horizontal space. The difference isn't so huge that one will "dominate" whilst the other doesn't.

Additionally, why not simply have the screen at 16:9 filling the width and mask it to 2.40:1 for ultra wide content, with masks you also have the luxury of being able to mask 16:9 down to any other aspect ratio with the minimum of fuss.

With masking being so easy, I am very confused as to why anyone would go for your solution unless they ONLY watched 2.40:1 content.

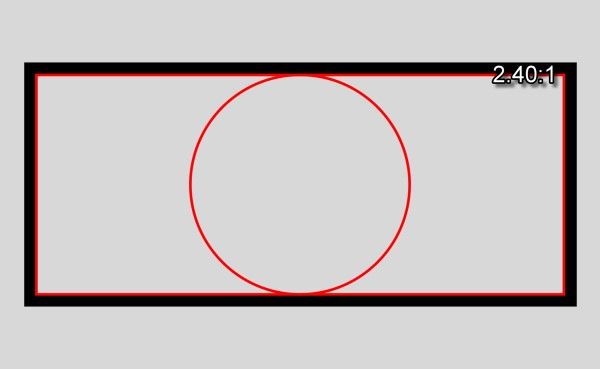

This is very close to how it would be for nightrider1470. The 2.35- and 2.40:1 images are framed correctly now. The image has maximum impact. There's a trade-off with resolution for 16:9 stuff, but it's still better than our previous 720p projector and that's okay until we can upgrade to a projector with lens memories. In the meantime our very clever scaler does a superb job of rescaling 1080p, 1080i, 576p, 576i and any gaming resolutions in to the available 1422x800 pixel space. Happy days.

What, on the same panel without a scaler? Of course they're not. That's not what I have been saying.

As above, masking makes more sense, is cheaper and is less fuss, as well as I mentioned before, 1920x1080 is quite a low resolution anyway on a massive screen, so why you'd advocate making it even less JUST for 2.40:1 content doesn't make much sense.

But when you add a scaler, and then rescale a 1920x1080 resolution image down to a 1422x800 pixel window, then that's your 1080p image displayed inside a 2.40:1 ribbon. The resolution is different, just as it is when that image is displayed on a 720p projector or a 768p HD Ready TV, but the source is still 1080p. I truly hope this puts all matters to bed because I'm getting very tired and bored of explaining this.

Again, as above. Additionally, it's not putting matters to bed, your stance seems to be anyone who understands the concept will not have an issue with it, so anyone that has an issue with it doesn't understand.

You also say we're fixating on resolution, but it seems you've undermined yourself as you keep mentioning it, as per your arguments it's all about image size and ratio, so it should be 16:9 being scaled down, not 1080P, no?

So before you respond, we ALL understand it, so please don't attempt to explain it again with increasingly elaborate picture demonstrations.