Very pessimistic from you, I didn't expect that!

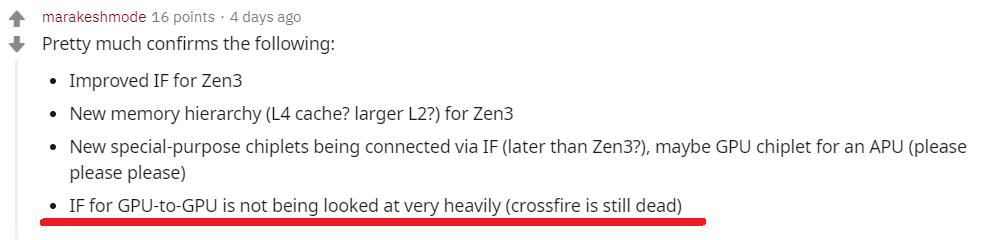

"IF for GPU-to-GPU is not being looked at very heavily"

https://www.reddit.com/r/AMD_Stock/comments/ehmg4c/an_interview_with_amds_cto_mark_papermaster/

He is talking about ZEN CPUs. A Zen 2 chip is 74mm2 at 7nm not 500mm2!!! And AMD would struggle at 5nm if it doesn't make multiple quad cores cutting the size of the chip further because TSMC has already stated 32% yields at 100mm2 chip and 80-90% at 17.5mm2.

So if AMD needs to maintain same profit and prices has to cut the chip down.

GPUs forget about them at 5nm on their current sizes. Need to be reduced to multi chip otherwise the yield of a 500mm 5nm GPU would be 5%. So maybe 2 GPUs per whole waffer!!!!!!!