Dlss is fine most of the time

Erm... You loved it when it was still dlss 1.0

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

Dlss is fine most of the time

I don’t use FG at all on my 4090 as it’s garbage. MFG is even worse from what I’ve seen as it adds even more artifacts and latency.

Much easier to make up gaps in the data you have then to predict the futureDlss is fine most of the time, frame gen is still ****** to date

Nvidia is one the biggest companies in the world. Why can't they mass produce their cards rather than few FE editions and leave gamers by the mercy of these AiB partners ? Imagine if Sony/Microsoft decided to limit their own consoles and left it to brands like Asus and Gigabyte

If this is in response to my post...

hwbusters.com

hwbusters.com

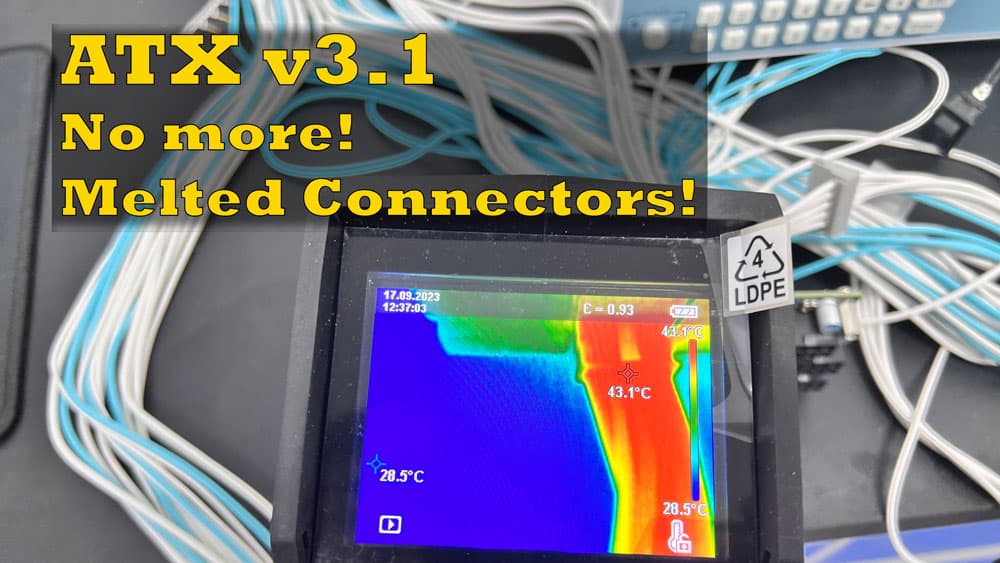

A modular power supply supporting detachable 12V-2x6 cables, must configure the SENSE0 and SENSE1 pins only within the power supply, for all power levels, to ensure correct operation when interchangeable “double-ended” 12V-2x6 cable assemblies are used.

From what I can gather from reading up is anything over 360w is a *danger* zone and anything below should be fine.And these 12VHPWR cables... would we reckon the fire/melting risk is less on lower power cards like 5070ti and 5080? Is there any implementation of these where they can be safely used? I ignored the 12VHPWR cable that came with my PSU, but if a future GPU upgrade forces me to use it... I should probably take a closer lolook.

A 5060 maybe.And these 12VHPWR cables... would we reckon the fire/melting risk is less on lower power cards like 5070ti and 5080? Is there any implementation of these where they can be safely used? I ignored the 12VHPWR cable that came with my PSU, but if a future GPU upgrade forces me to use it... I should probably take a closer look.

From what I can gather from reading up is anything over 360w is a *danger* zone and anything below should be fine.

I power limit my 4090 to 70% (315w) and have done for some time (was 80-90% PL before that). Having said that, I do not want to pull the power connector out to check the endFrom what I can gather from reading up is anything over 360w is a *danger* zone and anything below should be fine.

Caseking prices are absolutely shocking, so expect the same from OCUK....MicroCenter confirms GeForce RTX 5070 Ti prices: one MSRP model and $930 average price.

It may help when you know the difference between a short circuit and open circuit ; a short circuit essentially means two things are connected whereas an open circuit is the opposite.I’m currently sat here thinking… “but what does it all mean, Basil?!”

I understand that the new pin lengths help ensure a more robust connection (hence H++ being better) but I’m ultimately trying to understand if the info I’ve set out in this post (re: SENSE0 and SENSE1) is, or isn’t, an additional reason to be weary of using a H+ socket with a 50 series card. It might be a total nothing-burger, but I’m not sure.

Any thoughts, oh wise ones?

Real flames at least?Fake frames, fake resolution, is anything real at this point?

What you're struggling with is why IMO calling them sense pins is a bit misleading as they're a way to tell the GPU if a cable/PSU is connected and if so what's the maximum wattage that can be drawn from the PSU or though the cable.Thanks @Murphy - all noted. The bit I’m struggling with is that I presume what determines the power being ‘drawn’ is… surely, the GPU. That’s the part which needs more or less power, depending on what your PC is doing at a given time.

In which case, are these pins movable or fixed in the PSU? If movable, how does the PSU know when to change them. And what scenario are these changes in specs looking to ‘solve’? Asking with full humility and acknowledgement of my current ignorance.

Sorry for the ‘additional questions’ - I didn’t want to massively overload my initial post.

What you're struggling with is why IMO calling them sense pins is a bit misleading as they're a way to tell the GPU if a cable/PSU is connected and if so what's the maximum wattage that can be drawn from the PSU or though the cable.

The sense pins, what is or isn't connected to what, would be set when the PSU or cabled is manufactured. It's kind of like how the old 6 & 8 pin PCI-E power connectors had the same number of 12v sources (3) but the 8 pin added a couple of extra pins to indicate to devices that 'hey I'm a better PSU/cable so you can draw more power from/through me'

Fake frames, fake resolution, is anything real at this point?

It does a bit and it's only something that occurred to me after you brought this up so thanks....Edit: … and if all of that is right, it seems ridiculous that there was never a 0w state in the initial spec?!