Soldato

Thought this video was worth a thread. Like the guy uploading the video I was also quite surprised by some of the results. Has expected the 2080 wins in most of the games but not by has much I was expecting.

From video description

Radeon RX Vega 64 vs. GeForce RTX 2080 2019 update A like or share (e.g Reddit) helps a lot. Thank you. all 1440p

00:01 - Assassin's Creed Origins | very high

02:11 - Battlefield 1 | high

02:57 - Battlefield V | high

04:06 - Civilzation 6 | max

04:36 - Call of Duty: Black Ops 4 | max

05:26 - Deus Ex: Mankind Divided | very high

07:04 - Far Cry 5 | high (HD textures)

08:00 - Fortnite: Battle Royale | high

09:21 - Forza Horizon 4 | ultra

10:50 - Monster Hunter: World | high

12:20 - PUBG | high

13:13 - Shadow of the Tomb Raider | highest

14:41 - Tom Clancy's Rainbow Six: Siege | very high

15:29 - Total War: Warhammer II | high

16:31 - Wolfenstein 2 | max

General Notes: Thought it's time to compare the two post Adrenalin- and pre Vega VII launch and see where they stand and how the performance delta is now in certain games.

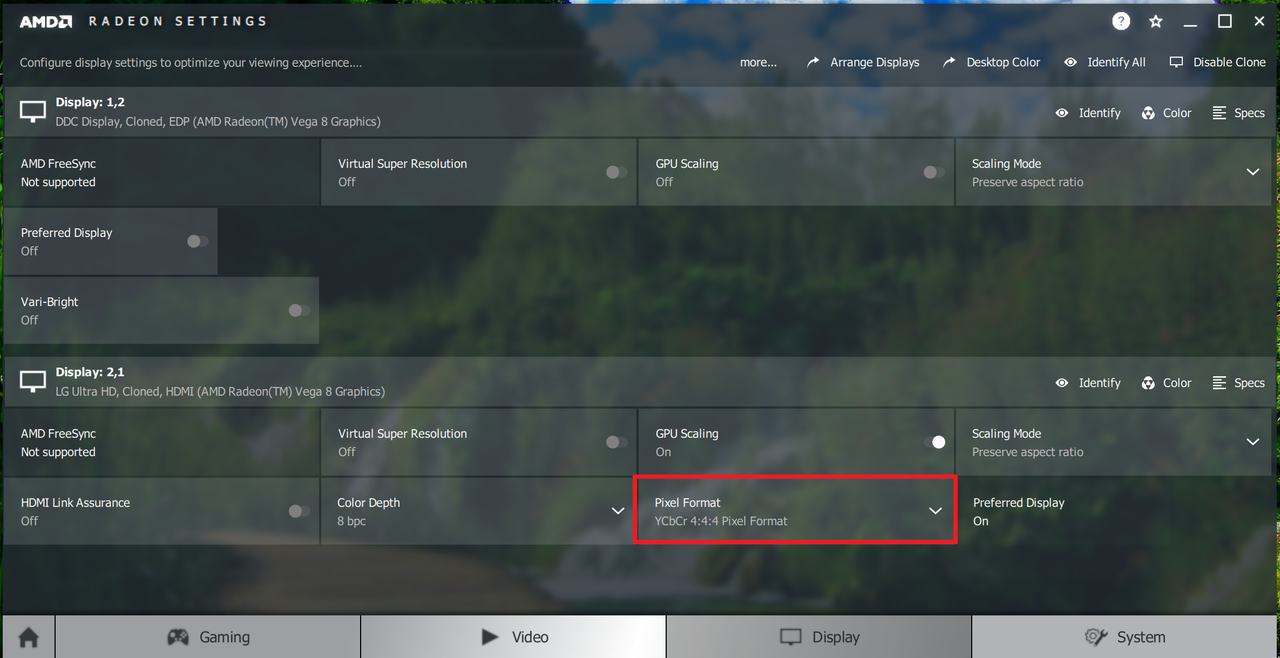

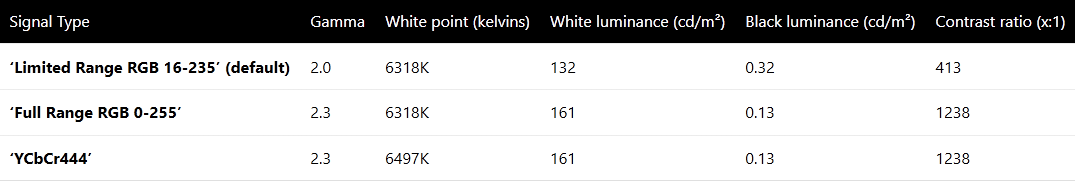

The colors are different because of Nvidia's default hdmi color range settings (can be changed to full, but I forgot)

I tend to set presets to max only in well optimized games, where the visual benefits outweigh the loss in performance (is rarely the case).

Since the RTX is factory OC I gave the RX Vega 64 a easy OC that everybody should be able to achieve with a few clicks: Undervolted the P6 state by 50mV, increased the PT by 25% and overclocked HBM by 100Mhz. I expect many Vega buyers do that anyway.

Game Notes:

Despite BFV being a "Nvidia game" Vega 64 comes much closer to the 2080 than in BF1. Surprising.

Surprise win for Vega in Civilization 6.

Far Cry 5: As to be expected both cards are not that far apart.

Fortnite: Something is wrong here. AMD GPUs do not well in this UE game in general, but it seem there is a terrible software bottleneck now. I will have a closer look again (could be the latest patch)

Forza Horizon 4: Just like with prior Forza games, Nvidia has improved the performance post launch significantly. Almost all tests online comparing Nvidia and AMD have been done with pre-game-ready Nvidia drivers and early game builds.

Wolfenstein II implements Nvidia's Content Adaptive Shading which helps the 2080 to pull ahead further. The RX still performs great here nonetheless.

Hope you liked that video.

Intel Core i9-9900K at 4.0Ghz 2x8GB DDR4-3400 Palit GeForce RTX 2080 Gaming Pro OC Sapphire Radeon RX Vega 64 Limited Edition

Lets look at RE2 Demo "YEAH I KNOW ITS A DEMO"

VEGA shows good gains here and its defo not running OC!!

Again more

Look at the Battlefield 5 performance! Its clear to see that VEGA can do really well when either the game is optimised for VEGA or AMD is just getting more out of VEGA lately. I tell you what its interesting to keep an eye on VEGA and see how well it ages.

Ryzen 5 was used this time

AMD Ryzen 5 2600X

X470 ASRock Master SLI

GSkill F4-3200C15D-16GTZKO

SAPPHIRE NITRO Radeon RX 64 -

MSI RTX 2080 TRIO Corsair AX 860

1440p high

This guy understands

MiauX2 months ago

Factory OC 2080 vs full stock Vega 64 and Vega its still competitive with a 900€ GPU. Not a bad buy in my book

Edit

And point proven again this time with HBM OC and Ultra BF5 1440p

From video description

Radeon RX Vega 64 vs. GeForce RTX 2080 2019 update A like or share (e.g Reddit) helps a lot. Thank you. all 1440p

00:01 - Assassin's Creed Origins | very high

02:11 - Battlefield 1 | high

02:57 - Battlefield V | high

04:06 - Civilzation 6 | max

04:36 - Call of Duty: Black Ops 4 | max

05:26 - Deus Ex: Mankind Divided | very high

07:04 - Far Cry 5 | high (HD textures)

08:00 - Fortnite: Battle Royale | high

09:21 - Forza Horizon 4 | ultra

10:50 - Monster Hunter: World | high

12:20 - PUBG | high

13:13 - Shadow of the Tomb Raider | highest

14:41 - Tom Clancy's Rainbow Six: Siege | very high

15:29 - Total War: Warhammer II | high

16:31 - Wolfenstein 2 | max

General Notes: Thought it's time to compare the two post Adrenalin- and pre Vega VII launch and see where they stand and how the performance delta is now in certain games.

The colors are different because of Nvidia's default hdmi color range settings (can be changed to full, but I forgot)

I tend to set presets to max only in well optimized games, where the visual benefits outweigh the loss in performance (is rarely the case).

Since the RTX is factory OC I gave the RX Vega 64 a easy OC that everybody should be able to achieve with a few clicks: Undervolted the P6 state by 50mV, increased the PT by 25% and overclocked HBM by 100Mhz. I expect many Vega buyers do that anyway.

Game Notes:

Despite BFV being a "Nvidia game" Vega 64 comes much closer to the 2080 than in BF1. Surprising.

Surprise win for Vega in Civilization 6.

Far Cry 5: As to be expected both cards are not that far apart.

Fortnite: Something is wrong here. AMD GPUs do not well in this UE game in general, but it seem there is a terrible software bottleneck now. I will have a closer look again (could be the latest patch)

Forza Horizon 4: Just like with prior Forza games, Nvidia has improved the performance post launch significantly. Almost all tests online comparing Nvidia and AMD have been done with pre-game-ready Nvidia drivers and early game builds.

Wolfenstein II implements Nvidia's Content Adaptive Shading which helps the 2080 to pull ahead further. The RX still performs great here nonetheless.

Hope you liked that video.

Intel Core i9-9900K at 4.0Ghz 2x8GB DDR4-3400 Palit GeForce RTX 2080 Gaming Pro OC Sapphire Radeon RX Vega 64 Limited Edition

Lets look at RE2 Demo "YEAH I KNOW ITS A DEMO"

VEGA shows good gains here and its defo not running OC!!

Again more

Look at the Battlefield 5 performance! Its clear to see that VEGA can do really well when either the game is optimised for VEGA or AMD is just getting more out of VEGA lately. I tell you what its interesting to keep an eye on VEGA and see how well it ages.

Ryzen 5 was used this time

AMD Ryzen 5 2600X

X470 ASRock Master SLI

GSkill F4-3200C15D-16GTZKO

SAPPHIRE NITRO Radeon RX 64 -

MSI RTX 2080 TRIO Corsair AX 860

1440p high

This guy understands

MiauX2 months ago

Factory OC 2080 vs full stock Vega 64 and Vega its still competitive with a 900€ GPU. Not a bad buy in my book

Edit

And point proven again this time with HBM OC and Ultra BF5 1440p

Last edited: