Even raster will be lower. Mark my words.

Do you have benchies of the new AMD cards? Please share if so !

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

Even raster will be lower. Mark my words.

What about when we see RT games where SER is being used/applied though? I can't remember exactly but haven't nvidia stated 40-50% improvement with this on 40xx cards?I can.

Look at TPU who measure the % FPS reduction when turning on RT. The delta between what a 3090Ti loses and what the 4090 loses is not that great.

Provided the 7950 does indeed have a 420W TBP with 1.5x the perf/watt it is 2.1x faster than a 6900XT, that would put it 10-20% faster than the 4090 depending what review you use. All AMD need to do is match the Ampere % FPS drop and that raster advantage will be enough to see them tie / beat the 4090 in RT.

Now everybody is saying it will come in between Ampere and Lovelace so what I presume they mean is that the % dropoff is greater than Ampere but the raw raster means the final FPS is still faster. I can see that happening as well.

You've misread/misunderstood. The jittered frames are one of the inputs to the AI model - it then outputs the AI generated pixels.So, from that document, one of the most important bits is: "DLSS is not designed to enhance texture resolution Mip bias should be set so textures have the same resolution as native rendering". This, together with few other bits tell me one thing - AI is only used to do AA and stacking frames indeed (especially with jitter introduced). All the extra pixels you get not from AI but from existing jittered frames, when you stuck them together, they fill in the blanks.

Well yes, but that's because you can't get motion vectors from one frame. All techniques that use motion vectors (TAA, SMAA multi, DLSS, FSR2.0 etc.) need input from more than one frame.Which means you can't get good image from just 1 frame, you have to stack a bunch of them first.

No, that's incorrect. What you're describing is basically TAA type upscale without use of a neural net model. DLSS2.0 absolutely reconstructs/hallucinates - they show that quite clearly - but it does so with many more inputs to the model than DLSS1.When NVIDIA talks about hallucinating pixels in the image they talk about ML in general and that is how DLSS 1 worked - which was just as bad in quality as all the other AI examples the shown there. That is why they had to redo the whole thing in DLSS 2 and instead of letting AI run widely hallucinating pixels (and usually doing it wrong), they gave it much simpler and more strict job, which also did not require huge training per game (and is generic now). This is consistent with what I heard from people who supposedly read leaked source code of DLSS 2.

In short words, AI in DLSS 2.0 (as of the time that document was written) doesn't seem to be filling in any missing pixels (sans for AA reasons), and it doesn't improve textures quality and details either (as NVIDIA says themselves). It stacks frames in a bit better way than Temporal upscaling (though this evolved too - as I believe that's what FSR 2 is based on, with no AI involved at all) but is still prone to errors (no AI is perfect). Nicely looking textures are effect of proper Mip Bias setting of textures and not DLSS itself (as per own NVIDIA's words in that doc).

That's also incorrect. FSR2 is a TAA type upscale, not DLSS 2.0 (and XESS for completeness) type. Remember DLSS doesn't do AA, unless you downsample afterwards (and then it's called DLSSAA) - but you might get better pixels from the model that look AA'd.Now, FSR2 doesn't seem much different from DLSS 2, aside the fact that the latter uses AI for AA (and does a very good job at that, along with regenerating thin lines). In such simple work, generic algorithms if chosen well should be good enough.

I thought we were the ones being peed on because of the pricing.

You've misread/misunderstood.

Thanks for the breakdown. Its like what the resident thumber aspires to be but cant quite articulate as well as you!

"available by q1 2023"

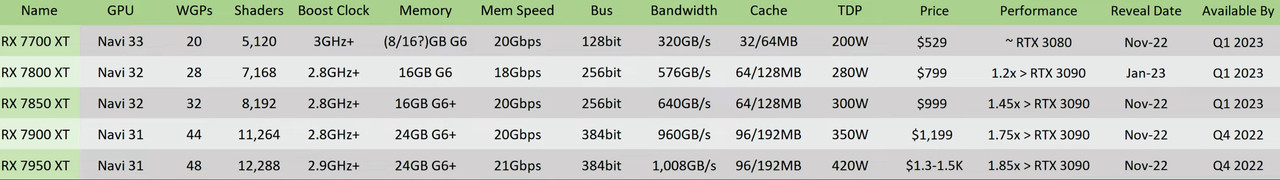

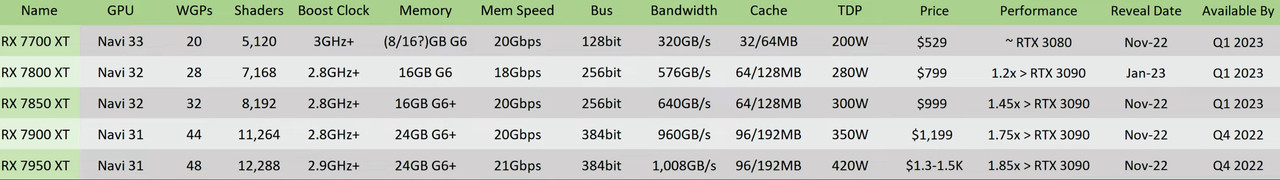

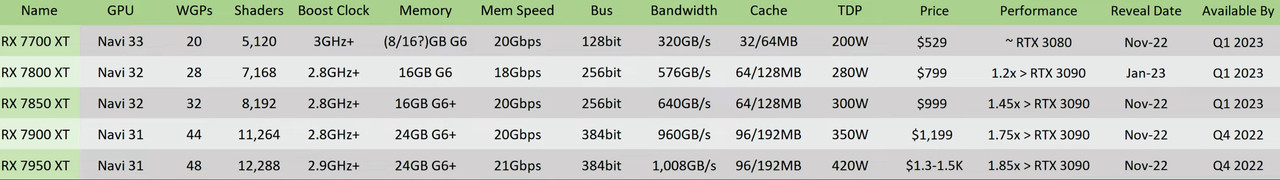

The current leaks/rumors so far.

just rumors, but it looks logical, they still need to sell old 6xxx first.Maybe you can learn a thing or 2 rather than posting the same old drivel and actually address the topic

"available by q1 2023"

......

Source?

I can.

Look at TPU who measure the % FPS reduction when turning on RT. The delta between what a 3090Ti loses and what the 4090 loses is not that great.

Provided the 7950 does indeed have a 420W TBP with 1.5x the perf/watt it is 2.1x faster than a 6900XT, that would put it 10-20% faster than the 4090 depending what review you use. All AMD need to do is match the Ampere % FPS drop and that raster advantage will be enough to see them tie / beat the 4090 in RT.

Now everybody is saying it will come in between Ampere and Lovelace so what I presume they mean is that the % dropoff is greater than Ampere but the raw raster means the final FPS is still faster. I can see that happening as well.

The current leaks/rumors so far.

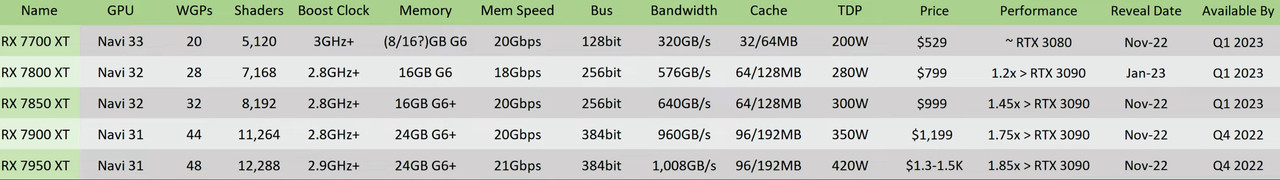

The current leaks/rumors so far.

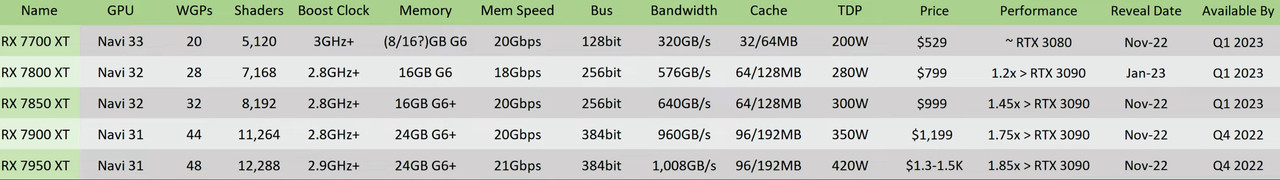

The current leaks/rumors so far.

that assessment doesnt make a lot of sense.. because thats independent of architecture, its all about allocating the transistor budget between RT and non-RT.. if nvidia allocates more transistors to RT that % difference would reduce, you dont need some ground breaking innovation to reduce the gap.. all that it needs is just the shuffling of transistors. maybe its just part of strategy

last i heard the 7950 could easily hit 4ghz

Even raster will be lower. Mark my words.

Nah that’s called digging your own grave. Hopefully they don’t have a repeat of thati had a different experience last time.. wasnt frank azor mouthing off on twitter,

Those prices are ****

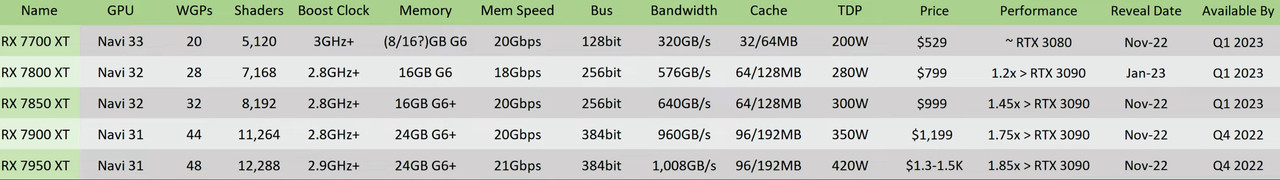

The current leaks/rumors so far.

We don't know what AMD have done RT wise in RDNA3. They could have allocated a bit more transistor budget to it vs RDNA2 and brought down the impact of turning RT on, all I am saying is if they have matched Ampere in that then provided the top card is really 420W and AMD indeed hits their advertised 1.5x perf/watt claim (they exceeded it for Vega - > RDNA and again for RDNA - > RDNA 2 so I see no reason they wouldn't match or exceed it a 3rd time) the performance gain over the 6900XT is 2.1x which would put the raster perf about 10-20% ahead of the 4090.

So the maths would be (based on RT costing the 4090 35% of the frames and it costing the 7950 40% of the frames like it costs Ampere)

| RT off | RT on

4090 |100 | 65

7950 |110-120 | 66 - 72

So even with worse RT performance (ie a larger cost in FPS) with enough of a raster advantage it won't matter.