How To Cripple Zen 4 In Gaming Benchmarks: AMD Zen 4 vs. Intel Raptor Lake Memory Scaling

Support us on Patreon: https://www.patreon.com/hardwareunboxedJoin us on Floatplane: https://www.floatplane.com/channel/HardwareUnboxedBuy relevant products ...youtu.be

Ok, good video.

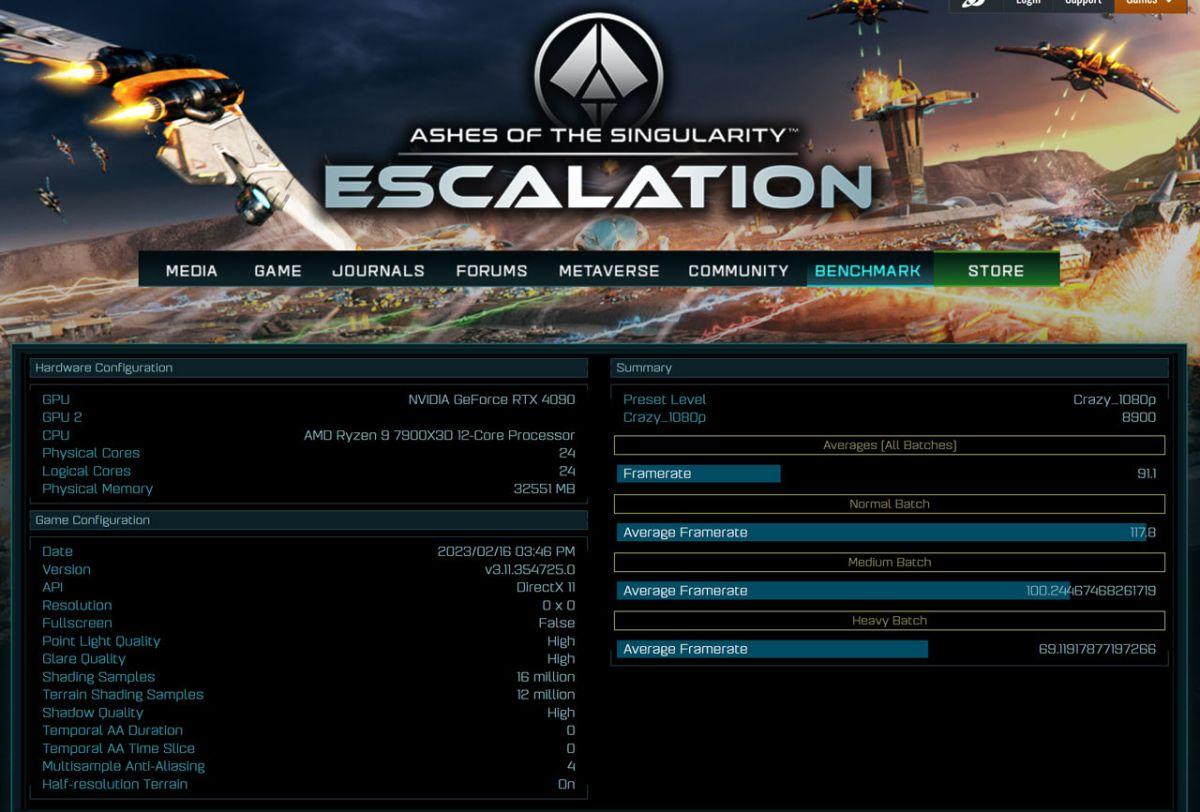

7700X: 4800MT/s vs 6000MT/s +20% performance.

13900K: 4800MT/s vs 6000MT/s +9% performance.

Fair enough, 9% vs 20% scaling at the same memory speed i don't think is anything like extreme. There is a difference, sure, but i still think its made a bigger issue out of than it actually is.

Last edited:

You're just as bad as Bencher; both of you should probably be thread banned!

You're just as bad as Bencher; both of you should probably be thread banned!