Don't tempt.Didn't you just get a reminder pop up in your calendar for that blood test you need to go for at 13:30?

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The thread which sometimes talks about RDNA2

- Thread starter CAT-THE-FIFTH

- Start date

- Status

- Not open for further replies.

More options

Thread starter's postsI wonder how long it will be before AMD release their version of dlss?

At least at the moment you can enable ray tracing on nvidia cards and claw back the fps by enabling dlss.

AMD really need to get their equivalent out ASAP so the hit to performance isn't so harsh

It's impossible to say, but I bet it's one of AMD's top priorities right now.

Associate

- Joined

- 7 Apr 2017

- Posts

- 1,762

I think I'll just take my chances and go for the 6800xt, as I would like a faster card as this one for me doesn't cut it at 1440p ultrawide. Also the shady Ampere price gouging etc has left a sour taste, so I'm not sure whether I want to do business with OCUK again.

14:00: 6800XT reviews are released and it best the 3080.

14:05: Willhub frantically rips out his 3080FE and runs down to CEX before the prices change.

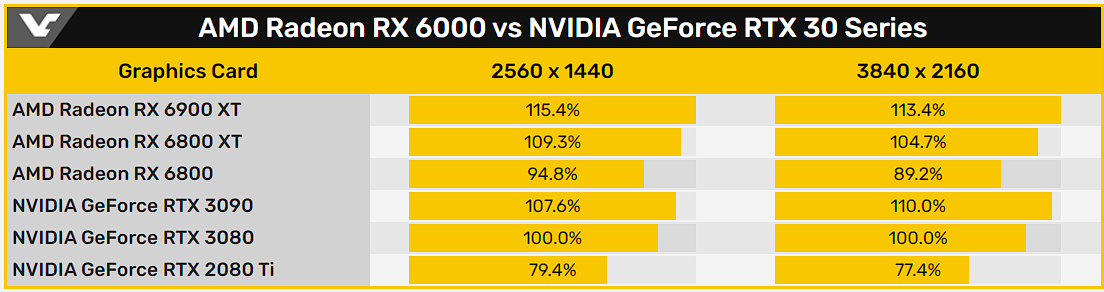

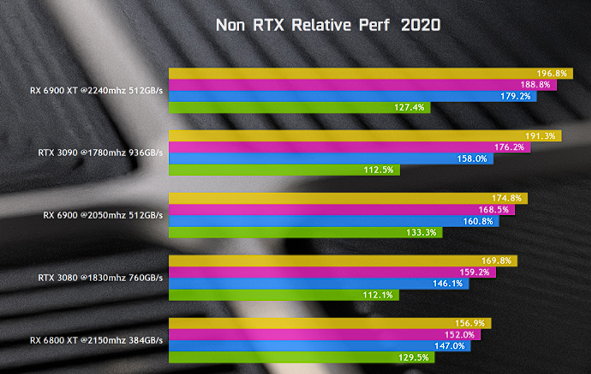

Performance known until now:

AMD discloses more Radeon RX 6900XT, RX 6800XT and RX 6800 gaming benchmarks

https://videocardz.com/newz/amd-dis...900xt-rx-6800xt-and-rx-6800-gaming-benchmarks

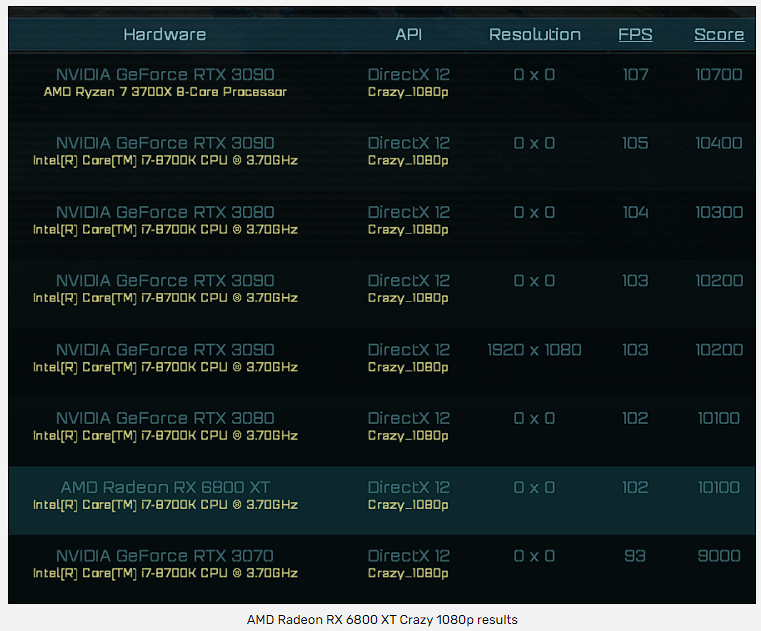

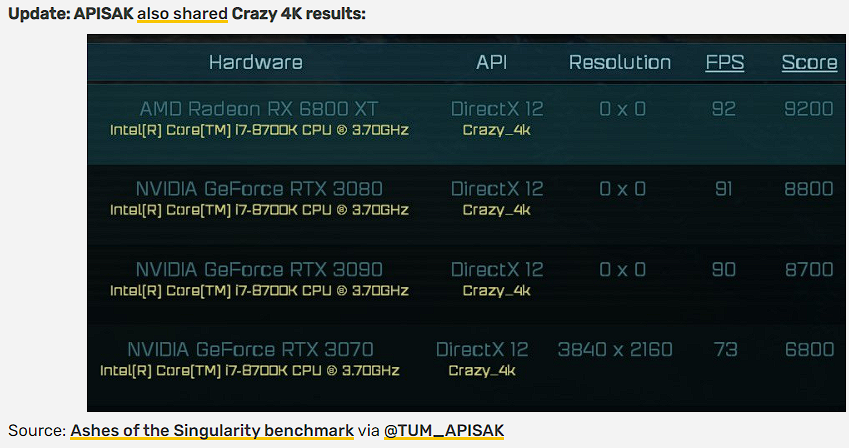

AMD Radeon RX 6800 XT Ashes of the Singularity benchmark leaks

https://videocardz.com/newz/amd-radeon-rx-6800-xt-ashes-of-the-singularity-benchmark-leaks

https://www.chiphell.com/thread-2264233-1-1.html

Read allot saying the same thing, myself included however OC will still sellout of all stock and take pre orders.I think I'll just take my chances and go for the 6800xt, as I would like a faster card as this one for me doesn't cut it at 1440p ultrawide. Also the shady Ampere price gouging etc has left a sour taste, so I'm not sure whether I want to do business with OCUK again.

Performance known until now:

AMD discloses more Radeon RX 6900XT, RX 6800XT and RX 6800 gaming benchmarks

https://videocardz.com/newz/amd-dis...900xt-rx-6800xt-and-rx-6800-gaming-benchmarks

AMD Radeon RX 6800 XT Ashes of the Singularity benchmark leaks

https://videocardz.com/newz/amd-radeon-rx-6800-xt-ashes-of-the-singularity-benchmark-leaks

https://www.chiphell.com/thread-2264233-1-1.html

I am curious how NVIDIA will respond to the 6800 XT. The rumored RTX 3080 Ti at 999 won't solve their problems as per these benchmarks as the 6800 XT is already beating the 3090 at 1080p and 1440p. They would need to do price cuts on the 3080 and 3070 and bring in the Ti at 699, although even then the 6800 XT beats that.

Associate

- Joined

- 7 Apr 2017

- Posts

- 1,762

Read allot saying the same thing, myself included however OC will still sellout of all stock and take pre orders.

Oh I'm sure they will and will also profit handsomely. I've always been of the opinion that business' are here to make money, but after watching the prices of Ampere just inflate exponentially vs other retailers it just made them look greedy. If it was another company doing it then I'd not bat an eyelid, but OCUK have this forum and appear to want to create a sense of community and constantly remind us of them being the biggest retailer etc etc, but the Ampere launch showed a total disdain for their customers.

At present, the game devs are just tacking on RTX as an after thought. I was watching the Digital Foundry videos of next gen RTX implementation on consoles and it looked much more polished and impressive compared to the lame implementations on PC we got so far. Going forward, it will be a feature which devs will take seriously.It's not going to be "heavily featured" if you mean in terms of implementation. The hardware specs of the current generation of consoles (and GPU's) do not support that.

It's going to be "heavily marketed" though, that's for sure.

I wonder how NVIDIA will respond to the 6800 XT

I am curious how NVIDIA will respond to the 6800 XT. The rumored RTX 3080 Ti at 999 won't solve their problems as per these benchmarks as the 6800 XT is already beating the 3090 at 1080p and 1440p. They would need to do price cuts on the 3080 and 3070 and bring in the Ti at 699, although even then the 6800 XT beats that.

Change manufacturing node, while they can't get wafers due to TSMC being overloaded right now launch a campaign in Q1 with clever marketing perhaps some msrp unicorns and back to the pre order, blame Covid and unprecedented demand while actually Ti's drop backend Q2.

I doubt it'll be very long if it helps consoles provide extra performance!

It's impossible to say, but I bet it's one of AMD's top priorities right now.

I think I'll hold fire and see how things pan out.

Currently trying to find a decent UW monitor that supports VRR on both GPU vendors equally, without being ridiculously priced, as I have a gsync only monitor atm.

Plus my setup isn't really struggling as I'm way behind on current games, so I think it would be sensible to wait and see how AMDs super resolution turns out

True, they even hoiked the 5700 AMD card prices by £100 the last day or so... I guess hoping to cash in on miss clicked purchases or desperate buyers, its a really scummy optic.Oh I'm sure they will and will also profit handsomely. I've always been of the opinion that business' are here to make money, but after watching the prices of Ampere just inflate exponentially vs other retailers it just made them look greedy. If it was another company doing it then I'd not bat an eyelid, but OCUK have this forum and appear to want to create a sense of community and constantly remind us of them being the biggest retailer etc etc, but the Ampere launch showed a total disdain for their customers.

While they wont suffer or go bust OC did loose tens of thousands of £££££ future business in the coming years from this client, and a few others.

I think I'll hold fire and see how things pan out.

Currently trying to find a decent UW monitor that supports VRR on both GPU vendors equally, without being ridiculously priced, as I have a gsync only monitor atm.

Plus my setup isn't really struggling as I'm way behind on current games, so I think it would be sensible to wait and see how AMDs super resolution turns out

I got a Dell S3220DGF 31.5", honestly couldn't be happier for the price performance and its been beautiful and faultless. Unless you want to spend an extra grand it's perfect, and running G-sync on my RTX card atm @165mhz (it's Free Sync 2 monitor).

I always turn ray tracing on but it hardly is noticeable in fairness apartment from looking at oneself in a mirror

RT has been kept at a minimum due to Turing being so bad at it. Ampere's performance is ~30%+ better. So we should get to see more RT in games where Nvidia offers better IQ, while AMD keeps up doing 1/2 or 1/4 resolution. There's a prety decent DF video covering Spiderman on the PS5 which shows how AMD will scale back IQ to maintain performance, a decent approach.

DF also covers Control, which has a few static shadows such as under desks. Destroy the desk and the shadow still remains. Better RT performance will allow developers to resolve such corner cutting.

I'm amazed why people think AMD have to win against NVIDIA on the first attempt on Ray Tracing further more they were so behind performance wise and had so many other desavantages... you can solve all in one go.

The only reason I'm upgrading my 1080Ti is for next gen RT. I was hoping AMD had held something back for RT.

The performance of console hardware this generation is not enough to heavily implement it in consoles (never mind high-end GPU's). Even if devs really take it seriously and factor it in from the start of development they are still going to have to use it very judiciously so as not to tank performance.At present, the game devs are just tacking on RTX as an after thought. I was watching the Digital Foundry videos of next gen RTX implementation on consoles and it looked much more polished and impressive compared to the lame implementations on PC we got so far. Going forward, it will be a feature which devs will take seriously.

So much click bait on Youtube at the moment... Some many channels to block ^-^ "The ultimate $2000 6800xt build" with thumbnail of something amazing looking followed by a video of a guy saying,

"This is what we wish we could make" and no actual parts or build or even a 6800. So many of these around its like O-o

"This is what we wish we could make" and no actual parts or build or even a 6800. So many of these around its like O-o

I think I'll hold fire and see how things pan out.

Currently trying to find a decent UW monitor that supports VRR on both GPU vendors equally, without being ridiculously priced, as I have a gsync only monitor atm.

Plus my setup isn't really struggling as I'm way behind on current games, so I think it would be sensible to wait and see how AMDs super resolution turns out

I can recommend the iiyama GB3461WQSU. 34" UW IPS

So much click bait on Youtube at the moment... Some many channels to block ^-^ "The ultimate $2000 6800xt build" with thumbnail of something amazing looking followed by a video of a guy saying,

"This is what we wish we could make" and no actual parts or build or even a 6800. So many of these around its like O-o

Yeah, it's the favorite youtube business model. Sadly I don't think it will go away anytime soon.

I am a heavy Youtube user (signed up for Premium with a VPN via India and its only a few quid per month... no ads, woohoo!) and have been on an absolute channel blockathon in the last few months getting rid of all of the Mickey Mouse techtubers vying for attention. 99% are complete garbage.So much click bait on Youtube at the moment... Some many channels to block ^-^ "The ultimate $2000 6800xt build" with thumbnail of something amazing looking followed by a video of a guy saying,

"This is what we wish we could make" and no actual parts or build or even a 6800. So many of these around its like O-o

Associate

- Joined

- 19 Jun 2017

- Posts

- 1,029

I just took a step back to look at the situation:

- cards look evenly matched at 4k

- Nvidia can still provide non-linear gains through DLSS at 4k

- But AMD has promised Super Sampling to counter DLSS

- AMD can provide low single digit gains through SAM but as of now will be only limited to a narrowly defined platform

- Nvidia has promised something similar that will be more widely applied

Seems RT is the only tie-breaker here.. if nvidia can hit 120fps on 1440 even with DLSS on, it suddenly starts looking OK

I would also add DLSS in the mix for its non-linear potential and..

.. can only postulate it would be faster on nvidia due to dedicated tensor cores & more refined model parameters

So if the economics of both products are comparable i believe the broader market will prefer RTX3080 over RX6xxx

I personally don't look at VRAM as a decider, though others might disagree.

- cards look evenly matched at 4k

- Nvidia can still provide non-linear gains through DLSS at 4k

- But AMD has promised Super Sampling to counter DLSS

- AMD can provide low single digit gains through SAM but as of now will be only limited to a narrowly defined platform

- Nvidia has promised something similar that will be more widely applied

Seems RT is the only tie-breaker here.. if nvidia can hit 120fps on 1440 even with DLSS on, it suddenly starts looking OK

I would also add DLSS in the mix for its non-linear potential and..

.. can only postulate it would be faster on nvidia due to dedicated tensor cores & more refined model parameters

So if the economics of both products are comparable i believe the broader market will prefer RTX3080 over RX6xxx

I personally don't look at VRAM as a decider, though others might disagree.

That's next level thinking Rich.... Think Im gonna go with a bit of that VPN, pay from india actionI am a heavy Youtube user (signed up for Premium with a VPN via India and its only a few quid per month... no ads, woohoo!) and have been on an absolute channel blockathon in the last few months getting rid of all of the Mickey Mouse techtubers vying for attention. 99% are complete garbage.

Caporegime

- Joined

- 7 Apr 2008

- Posts

- 25,195

- Location

- Lorville - Hurston

I just took a step back to look at the situation:

- cards look evenly matched at 4k

- Nvidia can still provide non-linear gains through DLSS at 4k

- But AMD has promised Super Sampling to counter DLSS

- AMD can provide low single digit gains through SAM but as of now will be only limited to a narrowly defined platform

- Nvidia has promised something similar that will be more widely applied

Seems RT is the only tie-breaker here.. if nvidia can hit 120fps on 1440 even with DLSS on, it suddenly starts looking OK

I would also add DLSS in the mix for its non-linear potential and..

.. can only postulate it would be faster on nvidia due to dedicated tensor cores & more refined model parameters

So if the economics of both products are comparable i believe the broader market will prefer RTX3080 over RX6xxx

I personally don't look at VRAM as a decider, though others might disagree.

When did the reviews drop?

- Status

- Not open for further replies.