-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

vram.

- Thread starter Michael Durrant

- Start date

More options

Thread starter's posts- Joined

- 9 Nov 2019

- Posts

- 104

exactly the 5080 would be very tempting if was 24gb but then watching gamernexus latest video, he showed a msi vanguard edition which the box stamped 24gb so dno if thats true or not or typo error.No, it is not. The 5070/5080 having the same VRAM as last gen is dumb, but nvidia don't care so just get your wallet out.

- Joined

- 9 Nov 2019

- Posts

- 104

its going to take a lot for me to switch from amd to nvidiaI recommend getting a GPU with 32GB of vram to be on the safe side if buying one in 2025

Just got be careful if it a nvidia gpu that 3 out 4 vram memory chips are not fake ones

Associate

- Joined

- 1 Sep 2013

- Posts

- 1,603

its going to take a lot for me to switch from amd to nvidia

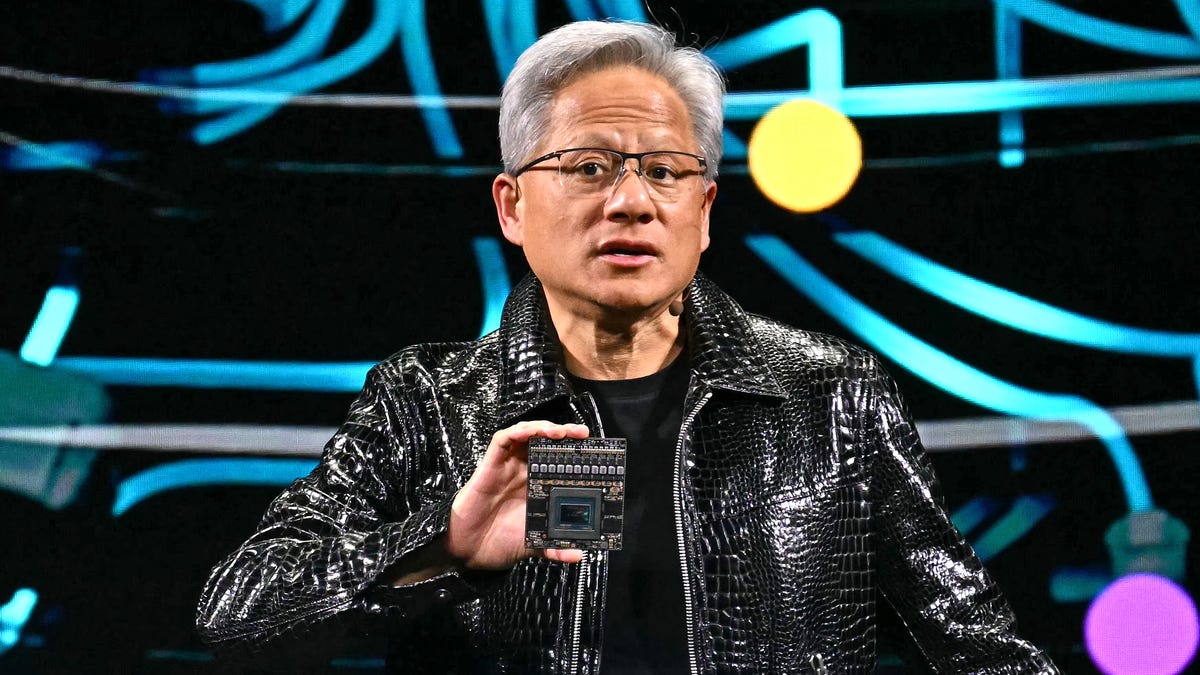

Will a free leather jacket do

Associate

- Joined

- 22 Nov 2020

- Posts

- 2,088

Shame that card could have easily had the grunt but for vram.I have a 4070ti (12GB) and play at UW 1440p.

There are numerous games which fill up the 12GB so I will be moving to a at least 16GB card this year (be it AMD or NVIDIA).

- Joined

- 9 Nov 2019

- Posts

- 104

The shine off that thing!Will a free leather jacket do

Will a free leather jacket do

The shine off that thing!

$8990

PATENT PRINTED CROC COLLAR BLOUSON

Shop PATENT PRINTED CROC COLLAR BLOUSON. Free shipping is available.

We’re Still Thinking About These Super Weird Gadgets From CES 2025

Plus, Nvidia CEO Jensen Huang delivered his keynote in a new leather jacket — part of his signature look

kotaku.com

kotaku.com

Last edited:

Enjoying your 98'234th playthrough of Half-Life 2?I have 3070 8gb vram and 34 UW monitor. My games run fine, and I have no idea what people are talking about. Just to prove my point, vram is, more or less, a pointless discussion without actual numbers.

Agreed. Really needed to have 24GB at that price. Maybe the super duper turbo edition will correct that next year.16gb fine if it's a card well under a grand.The 5080 is a joke.

- Joined

- 9 Nov 2019

- Posts

- 104

Sounds like a perfect business plan, doesn't it?Shame that card could have easily had the grunt but for vram.

I have 3070 8gb vram and 34 UW monitor. My games run fine, and I have no idea what people are talking about. Just to prove my point, vram is, more or less, a pointless discussion without actual numbers.

People generally don't buy current gen mid-high range cards to play old or low req' indie games.

Personally I was seeing V-RAM issues with almost anything on Unreal 5 with 8gb on a 3060ti and I was running out of memory before I was running out of grunt, the same was true of the RE Engine with some of the newer releases (RE4R for example). I was having those problems on a 2560x1080 screen, and I've since upgraded to higher resolution monitors.

I currently have mixed usage between a 3440 x 1440 34" Ultrawide and a 4K 65" TV, 12gb isn't enough for my use cases and I can't exactly see 16gb lasting me for as long as I'd like either while still being borderline in some instances. There is of course the "turn down some settings" argument, but the problem there is the same one I mentioned earlier, running out of VRAM before running out of power feels like forced obsolescence at prices which take the pee.

Last edited:

Eh... I just finished Starcom 1 and 2 from Steam - both are low req indie games. Second one I spent 42h in. All that on 4090.People generally don't buy current gen mid-high range cards to play old or low req' indie games.

People definitely don't buy expensive GPU just to play AAA games, they buy them for variety of reasons, not always with games in mind as priority. And, as I said many times in other topics, gameplay>>graphics. I spent 60h+ playing another super low req indie game Vampire Survivors. My friends and family also play both some AAA games and older or indie games on cards like 3070, 3080, 6800XT etc. - so middle+ range of respective generation. If they make good AAA game that plays well, is fun and then has also good graphics, I'll play it. Most of them suck these days, though, so I have MUCH more fun playing indie games.

People definitely don't buy expensive GPU just to play AAA games, they buy them for variety of reasons, not always with games in mind as priority. And, as I said many times in other topics, gameplay>>graphics. I spent 60h+ playing another super low req indie game Vampire Survivors. My friends and family also play both some AAA games and older or indie games on cards like 3070, 3080, 6800XT etc. - so middle+ range of respective generation. If they make good AAA game that plays well, is fun and then has also good graphics, I'll play it. Most of them suck these days, though, so I have MUCH more fun playing indie games.

The above said, I fully agree with vRAM being an issue - not in indie games, but sometimes I want to see/experience bling too and then I had already issues with Borderlands 3 on my 8GB NVidia card back then, with horrible stutter over time because of it. Since then everyone I know who wanted new graphics card got told by me avoid 8GB, get min. 12GB or suffer. And then AI models came and they also have high vRAM requirement if one wants to play with them at home. It's not just games and NVIDIA loves to push AI everywhere, yet they skimp on vRAM to even run that properly.Personally I was seeing V-RAM issues with almost anything on Unreal 5 with 8gb on a 3060ti and I was running out of memory before I was running out of grunt, the same was true of the RE Engine with some of the newer releases (RE4R for example). I was having those problems on a 2560x1080 screen, and I've since upgraded to higher resolution monitors.

As I said bit earlier - that sounds just like some company's perfect business plan, doesn't it?I currently have mixed usage between a 3440 x 1440 34" Ultrawide and a 4K 65" TV, 12gb isn't enough for my use cases and I can't exactly see 16gb lasting me for as long as I'd like either while still being borderline in some instances. There is of course the "turn down some settings" argument, but the problem there is the same one I mentioned earlier, running out of VRAM before running out of power feels like forced obsolescence at prices which take the pee.

@Tinek I do agree with you in terms of playing a range of games despite hardware, I have hours in low requirement 2D titles that I thoroughly enjoy, but presumably if you're spending £500-1000 you intend on playing more recent and demanding things too.

Really should have said "only" in my statement, poor wording on my end!

Absolutely spot on with the business plan, in fact I'm pretty sure there's videos and articles knocking around where people modified certain cards to double their V-RAM and the experience became night and day. Not exactly an easy solution for 99% of people in the market however, and one that would make me sweat when spending that much on a piece of hardware. I had borderline breakdowns doing delids back in the day and that was on CPU's that cost less than £200, and it was a much easier process!

Really should have said "only" in my statement, poor wording on my end!

Absolutely spot on with the business plan, in fact I'm pretty sure there's videos and articles knocking around where people modified certain cards to double their V-RAM and the experience became night and day. Not exactly an easy solution for 99% of people in the market however, and one that would make me sweat when spending that much on a piece of hardware. I had borderline breakdowns doing delids back in the day and that was on CPU's that cost less than £200, and it was a much easier process!

Last edited:

That WAS the plan but then publishers decided to create and release flop after flop after flop... You get me@Tinek I do agree with you in terms of playing a range of games despite hardware, I have hours in low requirement 2D titles that I thoroughly enjoy, but presumably if you're spending £500-1000 you intend on playing more recent and demanding things too.

Not my fault most of these games just sucked so hard - I don't play them for graphics alone, bad game is still a bad game, waste of time. I liked CP2077 (after many patches and fixes - I've waited many months after release before playing it), though, as an example.

Not my fault most of these games just sucked so hard - I don't play them for graphics alone, bad game is still a bad game, waste of time. I liked CP2077 (after many patches and fixes - I've waited many months after release before playing it), though, as an example.That, however, sounds like it COULD be a potential business opportunity too - modifying GPUs with more vRAM, hmm!Absolutely spot on with the business plan, in fact I'm pretty sure there's videos and articles knocking around where people modified certain cards to double their V-RAM and the experience became night and day. Not exactly an easy solution for 99% of people in the market however, and one that would make me sweat when spending that much on a piece of hardware.

vRAM itself isn't that expensive, but the service would likely cost something too. And of course no warranty.

vRAM itself isn't that expensive, but the service would likely cost something too. And of course no warranty.Not something I wanted to play with myself. Same as I don't touch liquid metal but instead phase change pads (one sits now on my CPU, works great).I had borderline breakdowns doing delids back in the day and that was on CPU's that cost less than £200, and it was a much easier process!

That, however, sounds like it COULD be a potential business opportunity too - modifying GPUs with more vRAM, hmm!vRAM itself isn't that expensive, but the service would likely cost something too. And of course no warranty.

I think if someone was confident doing that sort of work, and bought largely "dead" GPU's for low cost? Yeah, there's absolutely a potential for a bit of a boutique market I think. More of a side income but I think you could make money on it, especially if you offered it as a mixed repair/upgrade service with a no fee on failure.

I've seen a few videos where people bought supposedly dead GPU's for dirt cheap on ebay and more than half of the problems were something minor. How many 3000 and even 4000 series cards are out of warranty now that could benefit from a little TLC?

Interesting thought.

Having proper hardware in such repair shop and confidence, one could actually offer warranty too for some small fee. Or, one could just get insurance these days, which often works even better than warranty.I think if someone was confident doing that sort of work, and bought largely "dead" GPU's for low cost? Yeah, there's absolutely a potential for a bit of a boutique market I think. More of a side income but I think you could make money on it, especially if you offered it as a mixed repair/upgrade service with a no fee on failure.

Even LTT released such video not long ago, with similar results.I've seen a few videos where people bought supposedly dead GPU's for dirt cheap on ebay and more than half of the problems were something minor.

Which reminded me to check auctions for the pricing of 4090, out of curiosity - it seems with every new leak, the average pricing goes up, some are already 2k+ priced and people bid 1.5k+ for used ones (not long ago I've seen plenty for close to 1k - panic sellers). Makes me wonder if they know something about 5090 availability that we don't know yet. Also, if new AMD card is really around 500+ for the performance of 5070Ti, with FSR4 etc. - I'd be tempted to do a switch and wait this generation over to see what the future brings. Maybe better AA games, maybe actually better GPUs and not sidegrades, etc.How many 3000 and even 4000 series cards are out of warranty now that could benefit from a little TLC?