These kinds of comparisons make me think there is some kind of bug or misuse of DLSS models under some settings.

Nvidia have a state of the art DL super-resolution expertise, their results are well published and far better than standard up-scaling algorithms. So it is a bit if a mystery why in some cases DLSS is performing so badly.

Even more of a mystery why nvidia have let this go through their QA process. It is making them look bad, which is a shame because such techniques have huge potential to get us to high quality higher resolution displays. At 4K, there is an insane number of pixels but the actual information is far less

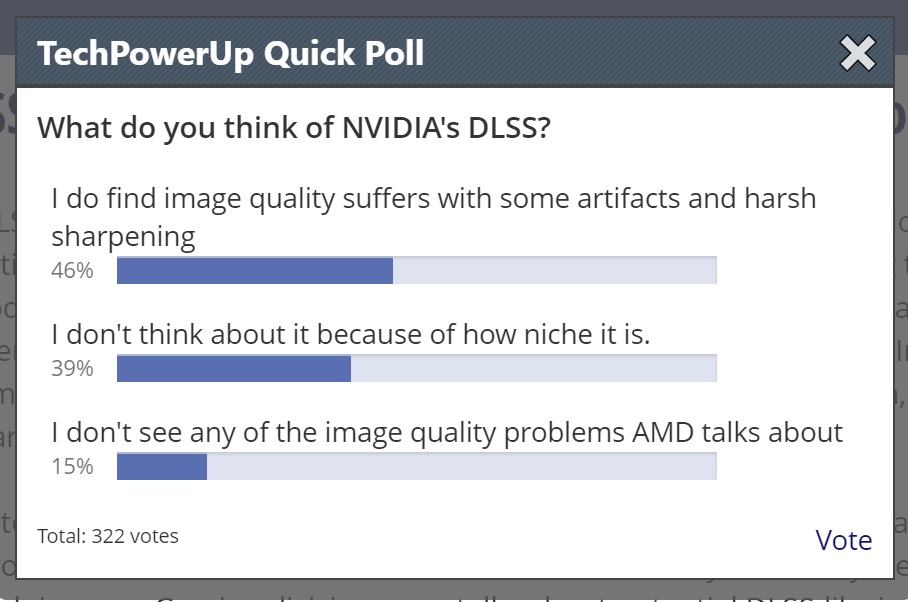

I hope to see big improvements in the next months but undeniably this is a great balls up on nvidia part. The worse part is they will now have an uphill batle to convince the skeptics that this is indeed a worthwhile approach in the future.