So everyone's on a bike?

Oh no......... you had to, you do know what's coming now? Right

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

So everyone's on a bike?

A third player would be brilliant and it is needed but i have 0 confidence Intel will be competing against Nvidia or AMD in gaming graphics.

I am optimistic.. after seeing Tiger Lake benchmarks, which houses the integrated LP version of Xe. Also Intel will be using the MCM approach which means linear price increase compared to the exponential growth we are accustomed to, due to yield issues. I would like to get my hands on a 4 tile version of the graphics card if they launch. Nothing like 60 billion transistors inside your case.

He is breaking NDA!Installing the biggest and best NVIDIA graphics card

https://twitter.com/sjvn/status/1303014997908389894

Good grief that's the funniest thing I've read in a while. Good job that man.I am more hopeful of Intel's Xe than any Radeon release. They are off by 15 billion transistors to offer any kind of competition at the enthusiast level (3080). I feel they have squandered away their 7 nm maturity purely due to a lack of ambition.. could have really turned the tables around this time..think it would be safe to write-off the Radeon brand and hope for Intel to fill the void

Edit: And don't forget that the flagship Ampere is 54 billion transistors while the RTX 3090 is roughly half of that. Nvidia is just slacking off while thanking AMD for letting them extend Ampere's shelf life by 2 more years

AMD chips will be smaller...due to the lack of tensor cores and dedicated raytracing cores

That could also make them slower.

Well this is a fun little thing when you consider consider how AMD's RT implementation is supposed to work: shaders can do RT calculations instead of rasterisation when needed.But without dedicated cores for that stuff taking up die space, they can use smaller dies and still have more transistors for normal GPU work...and thus be faster

Yeah, Intel finally caught up with AMD's 3 year old iGPU

I have this.

Believe Tiger Lake goes under 11xxx .. already launched for laptops, think it's a paper launch, the laptops are yet to hit shelf.

I would generally agree with your assessment but personally for RDR2 in particular I would sacrifice SO much just to hit that sweet 4K spot, because the game is otherwise so blurry it bothers me a lot. It's too bad their MSAA implementation is broken, it would help a bunch.

More interested in Intel's test configurations. They are notorious for producing garbage benchmarks.Be interesting to see the thermal benchmarks.

I have this.

Believe Tiger Lake goes under 11xxx .. already launched for laptops, think it's a paper launch, the laptops are yet to hit shelf.

I'm just stoked for Zen 3. Surely AMD must bring the latency down this time, and up the frequency?. That will all but eliminate Intel's last selling point. I have come very close to switching back to Intel a few times with the 10900K, when I see a sometimes 20+ fps advantage to Intel, but I have faith AMD will really bridge the gap this coming gen.

Well this is a fun little thing when you consider consider how AMD's RT implementation is supposed to work: shaders can do RT calculations instead of rasterisation when needed.

If you're not using RT then you get all the shaders doing rasterisation. If you are doing RT then a portion of the shaders switch over to RT "mode", so you have a chunk of transistors doing rasterisation and a chunk of transistors doing RT. That's Turing and Ampere, only the difference is Turing and Ampere can't repurpose their RT coures when not in use. So imagine then, instead of AMD having smaller dies because there are no dedicated RT cores, the increase the die size to have extra shaders.

You're always using 100% of the die, and if you're not doing RT then you get some extra oomph because there's more shaders to play with.

Fair enough

However, i'm not being a #### here, a couple of things.

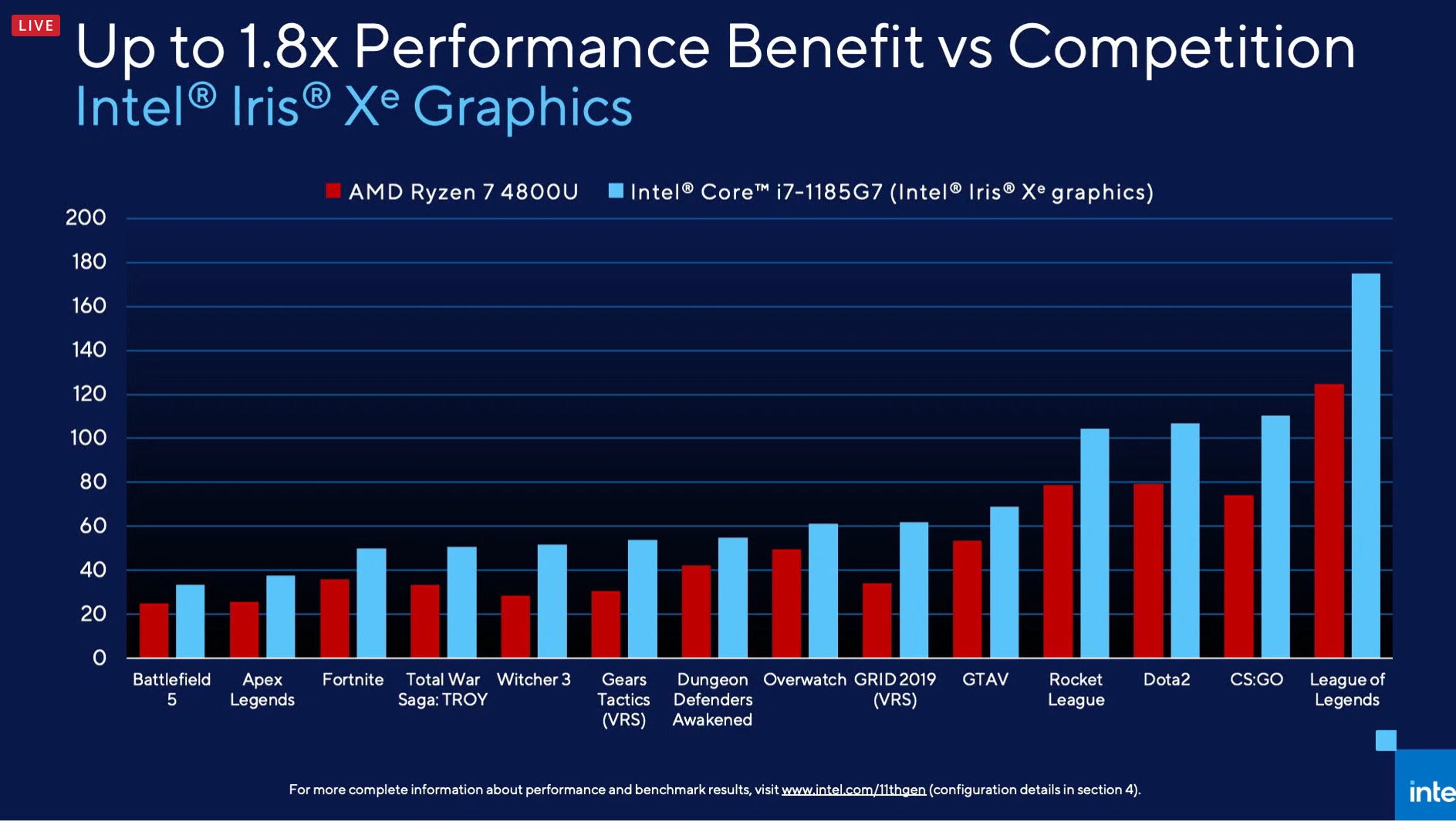

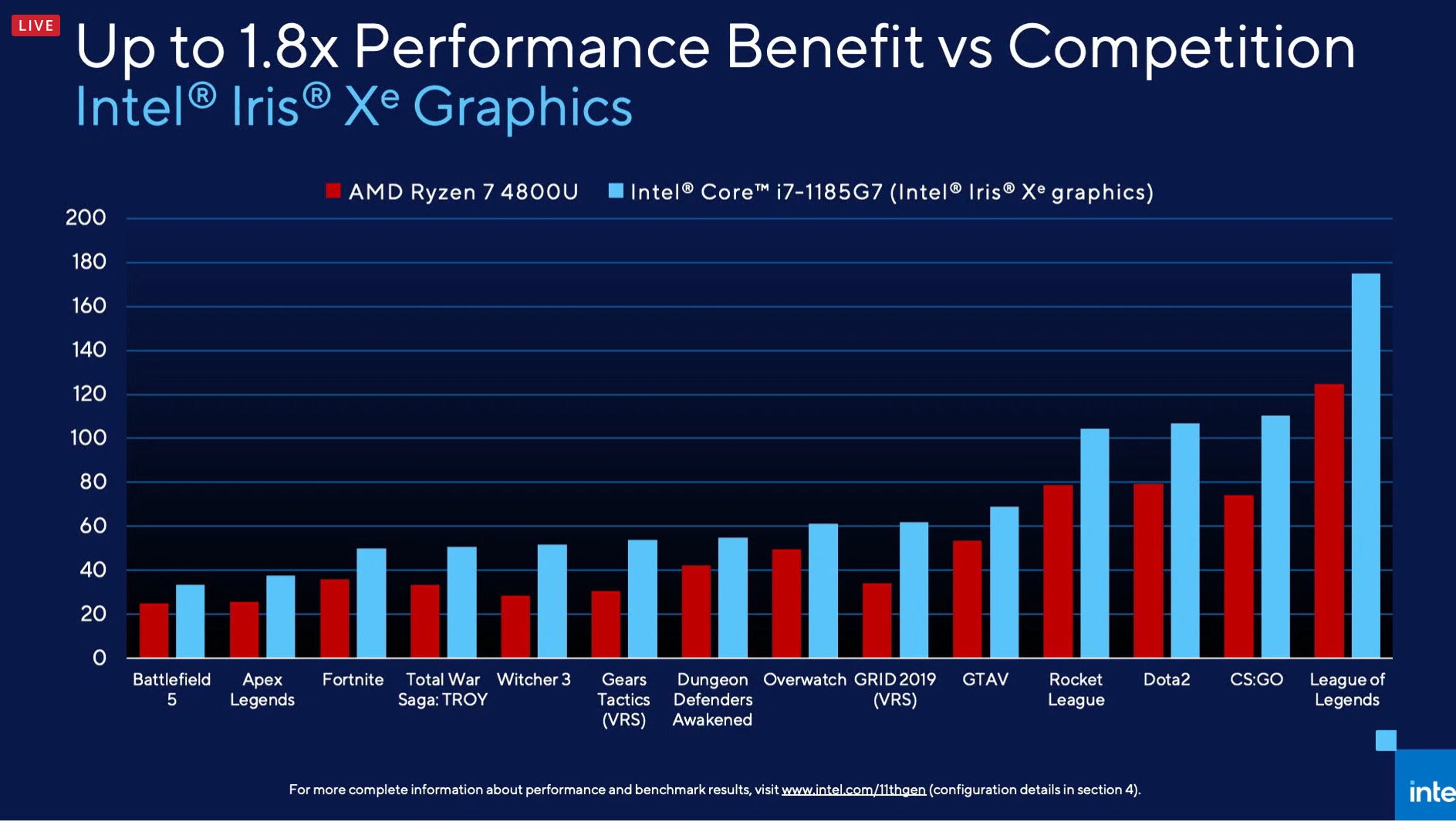

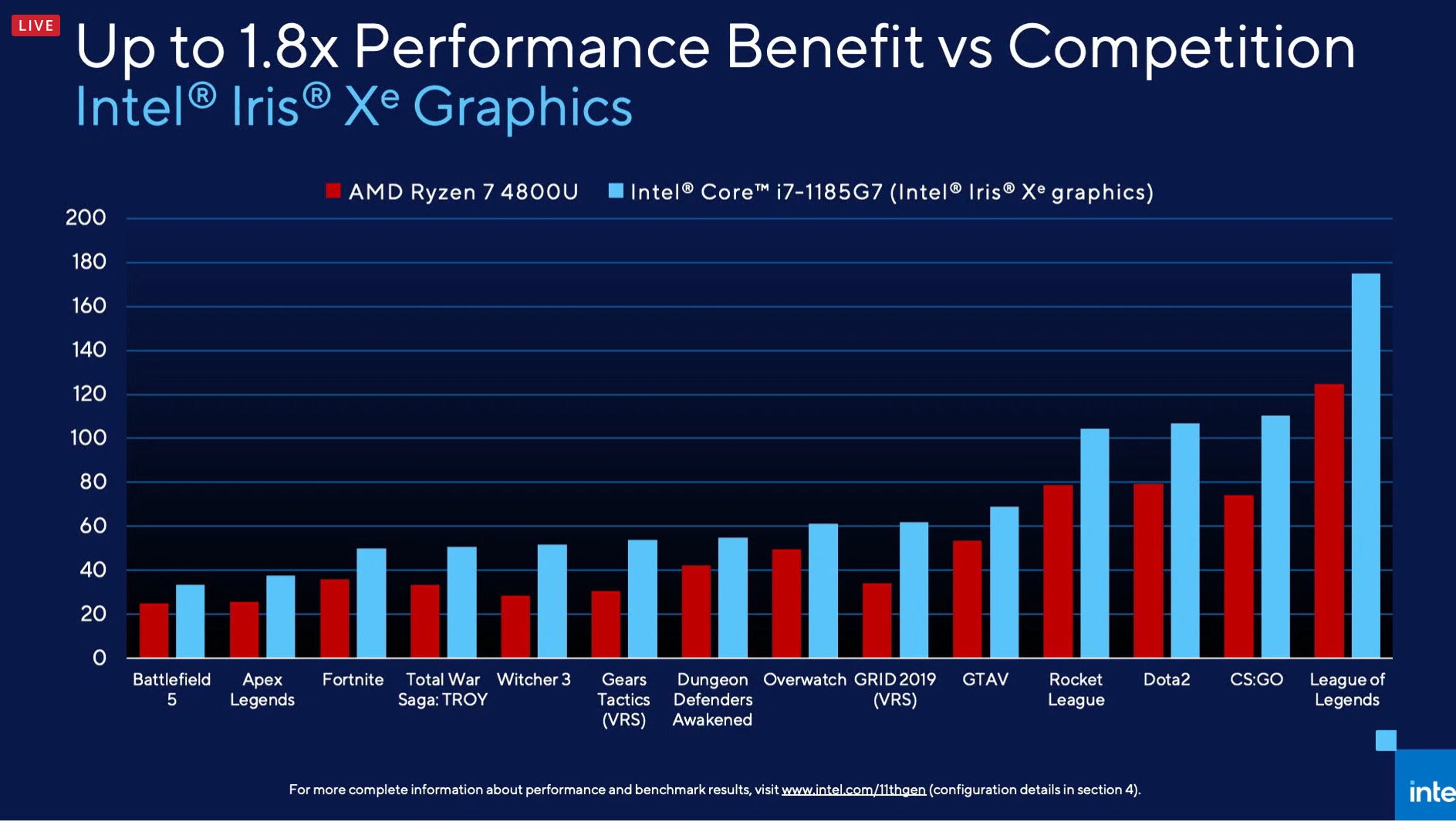

This is an Intel marketing slide, don't put too much faith in it, but mainly, the 4800U iGPU is pre-RDNA, its old GCN and its 8 CU, because AMD have had 0 competition in iGPU's they have literally been shoving the same junk level GPU cores into their chips for years. Believe me many of us have been watching AMD recycle the same iGPU over and over and over again for years crying when are they going to put a proper GPU in their chips?

When they do they will make everything on this slide look like for what it actually is, junk. when they stop putting junk in their own chips.

AMD are rumoured to put proper RDNA2 CU's in their APU's next year with DDR5.

Fair enough

However, i'm not being a #### here, a couple of things.

This is an Intel marketing slide, don't put too much faith in it, but mainly, the 4800U iGPU is pre-RDNA, its old GCN and its 8 CU, because AMD have had 0 competition in iGPU's they have literally been shoving the same junk level GPU cores into their chips for years. Believe me many of us have been watching AMD recycle the same iGPU over and over and over again for years crying when are they going to put a proper GPU in their chips?

When they do they will make everything on this slide look like for what it actually is, junk. when they stop putting junk in their own chips.

AMD are rumoured to put proper RDNA2 CU's in their APU's next year with DDR5.

I wonder if AMD are looking to deliver a one-two punch by showcasing their new GPUs in a system runing their new CPUs.

I'm just stoked for Zen 3. Surely AMD must bring the latency down this time, and up the frequency?