Soldato

- Joined

- 17 Aug 2003

- Posts

- 20,160

- Location

- Woburn Sand Dunes

It's broken because it wont scale? Where did you read that?

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

It's broken because it wont scale? Where did you read that?

256-bit GDDR6 memory means only 512 GB/s throughput. This is again mid-range performance.

If they think to offer it, though, for cheap and in low power consumption, it may be right there next to RTX 3070 or maximum RTX 3080 if very optimised software.

I think what's happening here is called "strategy". Amd has a marginal share of the PC GPU market, that causes some uncertainty in capacity allocation, so low risk approach like mass manufacturing mid range at competitive prices

Kopite provided a lot of decent 30X0 info so it it would unwise to dismiss it out of hand.Kopite says the leaked card is Navi 21 based:

https://twitter.com/kopite7kimi/status/1303890583123968000

Also Navi 21 might not be 505MM2,but "much" smaller:

https://twitter.com/KittyYYuko/status/1303986125225095169

https://twitter.com/comp_rd/status/1303986226546880512

.......with your 160CU 420W+ GPUsIt is, i am trying to keep myself grounded, honest....

.......with your 160CU 420W+ GPUs

some info here.

https://www.igorslab.de/en/chip-is-...-dispersal-at-the-force-rtx-3080-andrtx-3090/

Nvidia buys as far I know, working dies and pays for them not anything else.

so yields in a way wont be their concern only samsungs.

Good deal as they couldn't have tsmc 7nm and went with 8nm samsung and got a low payment deal.

well with that logic Intel is the biggest gpu manufacturer....

.......with your 160CU 420W+ GPUs

No different to tdp and heat either. AMD make a card that requires 300w and the usual Nvidiots come out mocking the hell out of it finding every meme possible playing on the power and heat issues calling it a disaster. Nvidia bring out a 350w card at extortionate pricing and those same Nvidiots get the tissues out fapping over how incredible the card will be and how much power will be used. All of a sudden the electric bill isn't an issue.

Thats week old

Count the CU's in this, it might be real, it might be fake, it might be real and AMD are just not counting Dual CU Shaders as 2 CU's, like Nvidia do........with your 160CU 420W+ GPUs

Count the CU's in this, it might be real, it might be fake, it might be real and AMD are just not counting Dual CU Shaders as 2 CU's, like Nvidia do.

Whatever it is this is what i was talking about.

4 Shader Engines

2 Shader arrays in each Engine

10 Shaders in each array

2 CU's in Each Shader

= 160 CU's

This is a completely bizarre post. Those memes came out originally about the Fx cards from Nvidia and then again with the first generation Fermi cards.

I take it you haven't been reading the Ampere thread, plenty of mocking about the size of the cards and the power requirements going over there.

And it's bizarre for another reason, no enthusiast cares overly much about power consumption as long as the performance is there and the cooler can handle it relatively quietly. The problem with AMD for the past number of launches is the reference coolers have been terrible, the power consumption has been very high and the performance hasn't matched the power use. Hence the mocking.

If the performance of the Ampere cards is worse than AMD's RDNA 2 cards and use more power, then there will be a lot of more mocking of Ampere cards. But, if it turns out that AMD's cards use more power just to be slightly behind Nvidia's performance, then, yeah, you will see more memes about AMD.

I'll say it again.

The one thing that no one got right about Ampere was the CUDA count, because no one knew Nvidia was going to release a Dual CUDA core Shader, this is how a 3080 went from being leaked as a 4352 CUDA core card to being an 8704 CUDA core card (its X2) A CUDA core is what AMD call a CU.

Its exactly the same thing, This slide shows a doubling of CU's, ignore the blue tab on the side i suspect that is someone's interpretation, count the CU's in the Digram, its written in the Shader Arrays to make it easy for you. 20 CU's in each Array with 8 Arrays, 8X 20 = 160.

LOOK at the diagram not the blue tab on the side.

I probably need to expand on this and own up to my own misunderstanding of what makes up a Shader.

This Block Diagram shows 5120 Shaders, not 10240 as i said before, Compute Cores are inside Shaders, not the other way round. As i said before.

So its 160 Compute Units across 5120 Shaders, not 5120 Shaders across 80 Compute Units, these Shaders are 'Dual Core' Shaders vs Single Core Shaders.

The Compute Capabilities of this GPU will quadruple over the 5700XT, it has 4X the Compute Cores. BUT, Just as this doesn't translate to Gaming Performance in Ampere vs Turing it will not do that for RDNA2 vs RDNA either. Each Shader will be better in gaming but by how much is anyones guess, if Ampere is anything to go by they are "A bit better".

Edit: I'm not an expert and this is how my understanding has evolved. I don't need to be an expert to count tho....

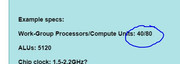

From my limited research they call 1 single precision MAD ALU as a shader core..so 1 CU or CUDA core contains "n" such ALUs, they will let this be known before hand.. another shortcut is to just divide GFLOPS by GHz and then halve it.

The reason why these ALUs don't translate to real world performance is because the bottleneck is somewhere else