What I also find hilarious is that 6 core CPUS (YES, the 7600x included, and also the 5800x 3d) kinda struggle to break 100 fps. The 12600k with it's useless ecores easily flies by. Picture that. A brand new late 2022 CPU that cost 350 at launch gets wiped by a 2 year old i5.

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NVIDIA 4000 Series

- Thread starter MrClippy

- Start date

More options

Thread starter's postsDoes it really look worse though? In RE4 the 8gb cards can run higher texture settings than the PS5 as seen in the DF video. Consoles are not doing very high/ultra textures, at best its the high setting on a low upscaled resolution.I'm not sure about re4 but in last of us if you turn the settings down then the graphics become worse than a console and I dunno about you but some people get a little angsty when their $1000 GPU runs and looks worse than a console

If someone paid $1000 for a 3070 that's not my problem.

Last edited:

What I love about this video is that it clearly demonstrates the nvidia fine wine.

A 3080 @ 1440p or 4k high basically matches a 6800xt @ 1440p or 4k MEDIUM.

Sorry, but I find that extremely hilarious considering the title of the video is about nvidias planned obsolescence.

The point of it was rather that an RTX 3070Ti matches an RX 6600XT at 1080P and 1440P and 4K.

They are all just unplayable but the 3070Ti cost X2 as much, its meant to be a much higher end card.

Last edited:

Then play at 1440p HIGH where the normal 3070 is 50% faster than the 6600xt? That's why graphics settings exists.The point of it was rather that an RTX 3070Ti matches an RX 6600XT at 1080P and 1440P and 4K.

They are all just unplayable but the 3070Ti cost X2 as much, its meant to be a much higher end card.

Does AMD even matter anymore?

This place, when a PC game releases, breaks Ampere=all the NV users start ****housing each other!

This forum is nothing. step outside to see the asylum.

The cpu performance isn't bad per we, it just needs a lot of cores. 6 cores of zen 4 are out of the picture for 100+ FPS. You need ecores or something with over 8 cores. Hogwarts is way way worse, every cpu struggles there with bottom low utilization

Well said Alex, very well said.

The 6800 XT and 6900 XT are only 16GB. So I look forward to those posts.

Why on earth does a 3080 need 20GB.

AMD probably had it right with 12GB and 16GB. But at some point these cards stop being 4K cards (for newer titles) anyway.

Last edited:

Associate

- Joined

- 27 Jan 2022

- Posts

- 806

- Location

- UK

Maybe TLOU PC performance will increase PS5 sales, so win win Sony?!

Yeah TLOU can wait another 5 years and when it's on Gamepass. I'm more interested in the Starfield hype train and expect zero problems on day one cough.

Yeah TLOU can wait another 5 years and when it's on Gamepass. I'm more interested in the Starfield hype train and expect zero problems on day one cough.

its going to be as polished as fallout 76Maybe TLOU PC performance will increase PS5 sales, so win win Sony?!

Yeah TLOU can wait another 5 years and when it's on Gamepass. I'm more interested in the Starfield hype train and expect zero problems on day one cough.

The 6800 XT and 6900 XT are only 16GB. So I look forward to those posts.

Why on earth does a 3080 need 20GB.

AMD probably had it right with 12GB and 16GB. But at some point these cards stop being 4K cards (for newer titles) anyway.

Because they like to play to their following, which mostly consists of amd fans, which just so happen to be the most vocal thus more clicks/views for them (which is fair enough given they make their living from YT), vram is basically the only thing that amd have over the competing nvidia gpus hence why it is such a hot topic, look back at the fury x days and vram didn't matter at all

Hilarious though that, 3080 should be 20gb yet it's competing gpu only has 16gb of vram.... Especially when it's been shown that AMD GPUs aren't as efficient in their vram utilisation as Nvidia GPUs across several games

AMD probably had it right with 12GB and 16GB. But at some point these cards stop being 4K cards (for newer titles) anyway.

Ding ding, all the vram on rdna 2 and 3090 is doing jack **** given they still have to drop settings and use higher presets of dlss/fsr too and funniest thing is still the fact that well regarded broken games are used to score points as Alex stated

And best of all, as even stated by these sites like computerbase, pcgamershardware, they state that there are still even problems on the best of the best pc hardware.

And best of all, as even stated by these sites like computerbase, pcgamershardware, they state that there are still even problems on the best of the best pc hardware.The people who keep on going on about it being nvidias fault because of them being stingy on vram are the real problem for the pc gaming scene as they are accepting what is quite frankly obvious lazy/poor ports and thinking it is perfectly acceptable that the only way to max games out now is by spending ££££.....

But given most people don't work in the development industry, they have no clue how things actually work. Soon we'll have 4090/7900xtx owners upgrading to the 5090/8900xtx just to be able to hit 60+ fps with max settings in the next lot of titles....

But given most people don't work in the development industry, they have no clue how things actually work. Soon we'll have 4090/7900xtx owners upgrading to the 5090/8900xtx just to be able to hit 60+ fps with max settings in the next lot of titles....

Last edited:

The latest games have made me realize, you need a 4090 to max out 1440p, and a 4080 to max out 1080p. No other card is capable or relevant, the rest of nvidia cards are lacking vram, amd cards are severely lacking RT performance. And god forbid you have a 4k monitor, there is no card capable of achieving 4k ultra on all titles..

Either that, or recent games are really, really broken

Either that, or recent games are really, really broken

The latest games have made me realize, you need a 4090 to max out 1440p, and a 4080 to max out 1080p. No other card is capable or relevant, the rest of nvidia cards are lacking vram, amd cards are severely lacking RT performance. And god forbid you have a 4k monitor, there is no card capable of achieving 4k ultra on all titles..

Either that, or recent games are really, really broken

It's the former I recon

Soldato

- Joined

- 14 Aug 2009

- Posts

- 3,430

I'll take "recent games are really, really broken" for 2 cents.The latest games have made me realize, you need a 4090 to max out 1440p, and a 4080 to max out 1080p. No other card is capable or relevant, the rest of nvidia cards are lacking vram, amd cards are severely lacking RT performance. And god forbid you have a 4k monitor, there is no card capable of achieving 4k ultra on all titles..

Either that, or recent games are really, really broken

Soldato

- Joined

- 28 Oct 2011

- Posts

- 8,789

Because they like to play to their following, which mostly consists of amd fans, which just so happen to be the most vocal thus more clicks/views for them (which is fair enough given they make their living from YT), vram is basically the only thing that amd have over the competing nvidia gpus hence why it is such a hot topic, look back at the fury x days and vram didn't matter at all

Hilarious though that, 3080 should be 20gb yet it's competing gpu only has 16gb of vram.... Especially when it's been shown that AMD GPUs aren't as efficient in their vram utilisation as Nvidia GPUs across several games

Ding ding, all the vram on rdna 2 and 3090 is doing jack **** given they still have to drop settings and use higher presets of dlss/fsr too and funniest thing is still the fact that well regarded broken games are used to score points as Alex statedAnd best of all, as even stated by these sites like computerbase, pcgamershardware, they state that there are still even problems on the best of the best pc hardware.

The people who keep on going on about it being nvidias fault because of them being stingy on vram are the real problem for the pc gaming scene as they are accepting what is quite frankly obvious lazy/poor ports and thinking it is perfectly acceptable that the only way to max games out now is by spending ££££.....But given most people don't work in the development industry, they have no clue how things actually work. Soon we'll have 4090/7900xtx owners upgrading to the 5090/8900xtx just to be able to hit 60+ fps with max settings in the next lot of titles....

Do you mean all those people who have been proven correct over time and didn't buy WAY overpriced 8GB and 10GB Nvidia cards which are now choking badly on VRAM?

Those people? They're the problem? It's much more like more like as Sly Stallone used to say in Cobra - "Skimpy VRAM is the disease, meet the cure"

When a card chokes, does it matter if it chokes on vram or just pure performance?Do you mean all those people who have been proven correct over time and didn't buy WAY overpriced 8GB and 10GB Nvidia cards which are now choking badly on VRAM?

Those people? They're the problem? It's much more like more like as Sly Stallone used to say in Cobra - "Skimpy VRAM is the disease, meet the cure"

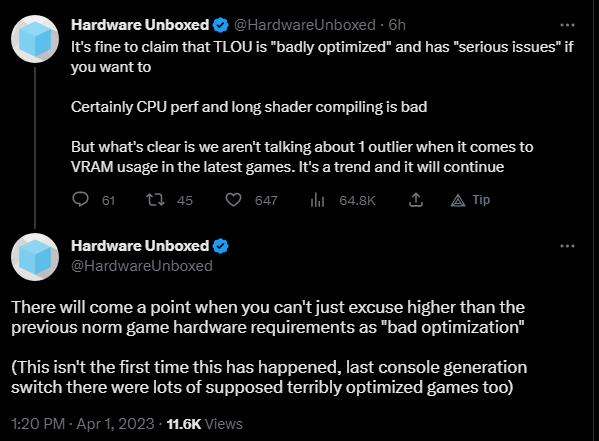

According to hwunboxed's TLOU test, I kid you not, a 3080 @ 1440p high or @ 4k high basically matches the 6800xt @ 1440p medium or 4k medium. The 3080 basically offers similar fps at higher quality settings. Who in their right mind would call that choking?

I know it sounds like a jest but it's not, it's basically the reality. If you wanna actually max out ALL games at native 1440p, you need a 4090. Nothing else cuts it. For 1080p, you can get away with a 4080 - maaaybe a 4070ti but youd be cutting close. No other card is even in the competition here.I'll take "recent games are really, really broken" for 2 cents.

But here we are complaining about the 2-3 years old 3070s and 3080s.

Soldato

- Joined

- 28 Oct 2011

- Posts

- 8,789

I don't know why new games just don't lock the texture setting based on your vram if it's an issue? Didn't DOOM do that back in 2016?

Anyway 10GB is still fine for me until the 5080, RE4 is the first game I've seen an issue which I resolved myself in 10 minutes on the demo playing with the settings (RT off).

Or just sell cards with adequate VRAM in the first place maybe?