Caporegime

Indeed. I would be incredibly surprised if AMD started kitting out a $199 card with GDDR5X.

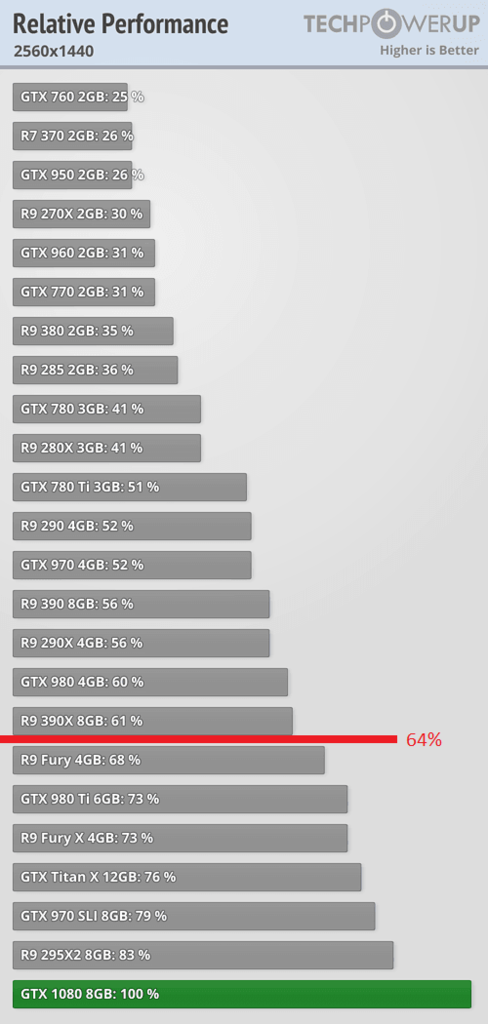

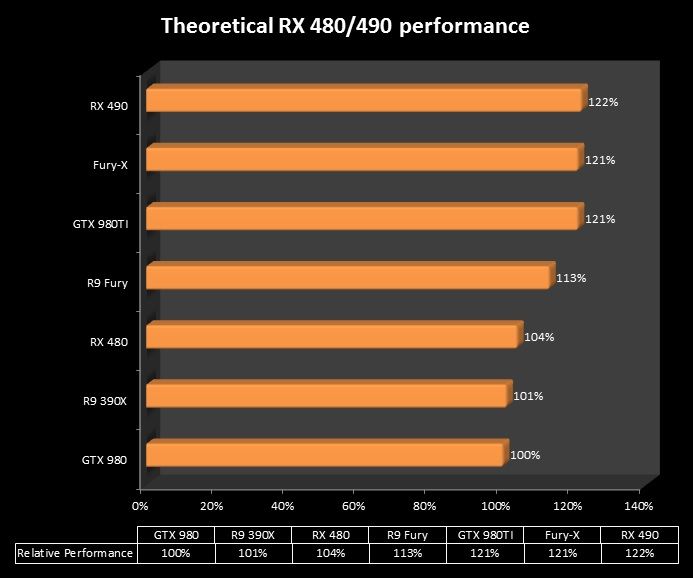

Also there is the fact that if it is only 390/390x performance, it isnt going to need more bandwith anyway, the 8ghz clocked DDR5 will be fine.

We know the $200 card (480) has 8Ghz GDDR5 (Not X)

The $300 one, who knows...

.

.