Super TLDR is that you use machine learning to provide a better upscaled image than you could otherwise achieve from low resolution source material. In very simple terms the algorithm learns what stuff should look like, and can use that knowledge to reconstruct high resolution images from lower resolution ones... imagine you were good at art (maybe you are lol) and you were shown a low res picture of a person's face... using your learned knowledge of what people look like, you could then draw a much higher fidelity picture from the original lower fidelity image by filling in the missing information yourself and it would probably look pretty accurate.

The general idea is that you can for example play at something that looks an awful lot like native 4k while only actually rendering at 1440p for example, which is obviously much easier to achieve high frame rates with - especially when you start to take the relatively poor ray tracing performance of current GPUs into account for example.

Less TLDR is that DLSS is Nvidia's own proprietary version of AI based upscaling. Originally for DLSS in particular this required training the algorithm using very high (16k I believe) source material from the game in question, and the AI would then learn to use more sparsely rendered data from lower resolution render targets and boost the resolution by filling in the blanks. More recently DLSS2.0 significantly altered how this works and is instead trained on a generic neural net which ditches the per game training requirement and works with TAA and motion vectors instead. It still requires per game implementation at this time, but is no where near as resource intensive.

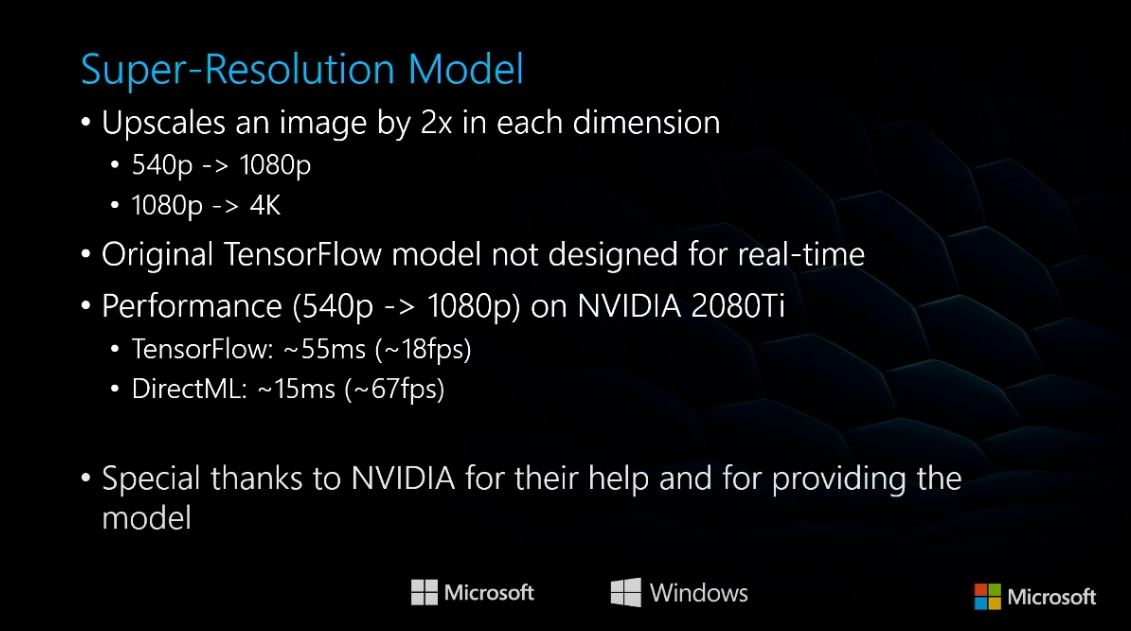

DirectML super resolution I stand to be corrected but I don't believe we know much about yet in terms of the nuts and bolts of how it works, but one could take a stab at it being some sort of generic neural net TAA based solution too. DirectML itself though is much broader than just super resolution/DLSS and aims to incorporate a larger range of machine learning based systems for gaming which is pretty cool.

There are also others, FRL is also developing machine learning upsampling techniques, there are open sourced variants using GAN style training.