Soldato

- Joined

- 30 Jul 2012

- Posts

- 2,775

Something better land this June, I need to buy.

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

I was disappointed AMD didn't announce the new high end card. I'll make do with my 1070 until then. Unless a crazy good deal comes along.

Whatever happens Nvidia will want to release before the next gem consoles. If they come out and are less than say a 2070 super I can see a lot of people jumping ship

When talking consoles I remember when they (Xbox one/PS4) said about 1080p/60fps now we all know the best they managed in most titles was 1080p/30fps or 720p/60fps, with the new consoles I'm sure some titles will run 4k/60fps but only the undemanding/ported older games, within a year or two you'll see 1080p/60fps being the goal with upscaling to 4k.

Even if Ampere isn't that great it'll still decimate anything consoles run, it's just the pricing that might be an issue, hopefully RTX 2080ti performance for £499 is possible, maybe if AMD can do something to give competition back into this market we'll start seeing massive gains again.

When talking consoles I remember when they (Xbox one/PS4) said about 1080p/60fps now we all know the best they managed in most titles was 1080p/30fps or 720p/60fps, with the new consoles I'm sure some titles will run 4k/60fps but only the undemanding/ported older games, within a year or two you'll see 1080p/60fps being the goal with upscaling to 4k.

Even if Ampere isn't that great it'll still decimate anything consoles run, it's just the pricing that might be an issue, hopefully RTX 2080ti performance for £499 is possible, maybe if AMD can do something to give competition back into this market we'll start seeing massive gains again.

PC hardware will always be faster than consoles, it's gauranteed like the sky will be blue, except in the UK where it's mostly grey - but blue like the sea

Very true but at this point AMD still hasn't had anything to compete with top end for years, if they manage to pull a Ryzen in the GPU sector you'll see prices freefall, if you said in 2015 you could get a 8 core CPU for under £100 on a £40 motherboard you'd laugh! While the R9 290x was a loud illegitimate child at least it was better than a 970 and able to get on par with the 980.RTX 2080 released at £750, its GTX 1080TI level performance, i very much doubt we will see RTX 2080TI level performance for £500 in the next generation of GPU's, this is Nvidia....

Very true but at this point AMD still hasn't had anything to compete with top end for years, if they manage to pull a Ryzen in the GPU sector you'll see prices freefall, if you said in 2015 you could get a 8 core CPU for under £100 on a £40 motherboard you'd laugh! While the R9 290x was a loud illegitimate child at least it was better than a 970 and able to get on par with the 980.

https://youtu.be/0G5oe-Cc994?t=251Snip

*

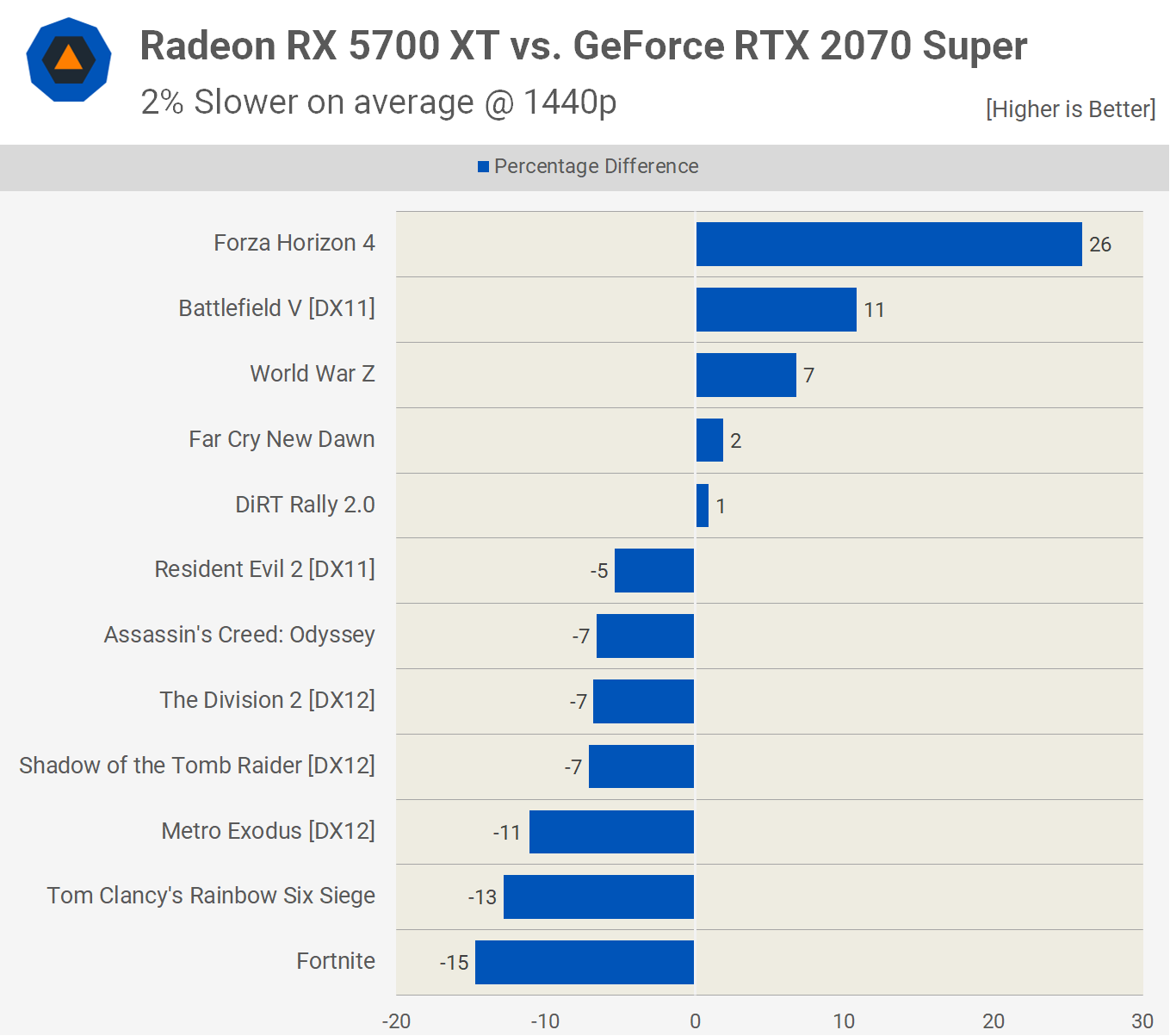

The 290x was never a true high end card that could completely dominate on the flagship of nVidia but it was enough to give people the option of buying from a competitor without having to worry too much of performance loss, now with the 2080s/2080ti AMD has nothing to compete against them with, even the 5700 XT (ignoring the driver problems) struggles to get near the 2070s, which would've been beaten back in 2014 by the 290 for less.

*

the 290x was able to rival the 980 while being cheaper, it was priced to compete with the 970 (which the 290x beat easily) and it could get on par with the 980, not in every game about but pairing a 980 vs 290x now the 290x will come out on top more often than not because nVidia stopped supporting their older cards with performance updates.

Who cares, that's all history and a far reality from today's landscape. Even on 7nm the best AMD manage is the radeon vii and the 5700xt which is still slower than some 10 series cards. That's the sad reality hence the utter gouging by nvidia.