I suspect that we are in the pre-T&L days of GPUs and that RT will have to be done cleverer. Much cleverer.

Silicon nodes just aren't advancing enough anymore (and transistors/cost is barely moving) whereas brute force RT requires that entry-level has RT performance of ~4090. Consoles are not going to dedicate that much silicon to the GPU, so a different approach will have to be found.

Maybe a mix of raster and RT as currently RT seems to be too keen to abandon all the raster techniques. Unsure about the technicalities but lets not forget that Nvidia's tensor sensors were created for the big cashcows of AI and data centre and they have to justify their presence on consumer cards hence their upscallers using them. I wouldn't be surprised if Nvidia RT is similar - brute forcing RT because it gives them advantage. It is even possible that Nvidia have though of cleverer ways of doing RT but like the excess tessellation: as long as the present model sells Nvidia card they do not care!

Nodes were moving like crazy back then though: XBox360 was 90nm at first, 8800GT was 65nm. The console was only ~200 million transistors whereas G92 was 750 million. And it wasn't even that large. No idea what cost/transistors was back then but it must have been cheap enough for Nvidia to have sold a 324mm2 part for $200 or so.

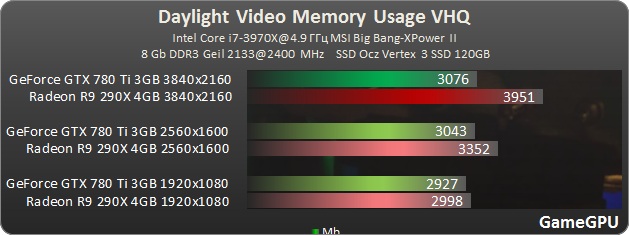

That is largely over, huge dies are expensive, what is relatively cheap is VRAM, and Nvidia (and to a far lesser extend AMD) could be far more generous with VRAM but then in Nvidia's case that is very much planned obsolescence and their reward for that tactic is near 90% marketshare.

Yet,PCMR excuse makes for low VRAM amounts on £800 cards. It reminds me exactly of Apple and people saying low RAM and storage amounts on Apple PCs is fine.

But apparently now 12GB of VRAM is fine for 4K for the next few years,because Nvidia wants to sell you an £800 RTX4070TI. Yet none of the people defending 12GB has fine for 4K for years,own a 12GB dGPU. If these people bought 12GB dGPUs to play games at 4K for the 3~5 years then it might show some actual belief.

But the issue is that Nvidia is not only skimping on VRAM but relative transistor increase per tier for their around 300MM2 dGPUs. If you compare their

So compare GA106(just under 300MM2) and AD104(just under 300MM2):

1.)

https://www.techpowerup.com/gpu-specs/nvidia-ga106.g966

2.)

https://www.techpowerup.com/gpu-specs/nvidia-ad104.g1013

3X the amount of transistors,and a 2.4X increase in performance of fully enabled AD104 over a slightly disabled GA106:

Now compare GA106 to TU116:

1.)

https://www.techpowerup.com/gpu-specs/nvidia-ga106.g966

2.)

https://www.techpowerup.com/gpu-specs/nvidia-tu116.g902

Both similar die sizes. A nearly 2X increase in transistors:

EVGA engineered a compact dual-slot design with the RTX 3060 XC that will fit all cases. Unlike all other RTX 3060 cards we've tested today, a metal backplate is included. EVGA's card ticks at a rated boost of 1852 MHz, and the cooler features the idle-fan-stop capability.

www.techpowerup.com

A nearly 50% increase in performance. So there you go - quite easy for Nvidia to have a 40% to 50% performance increase with a smaller die and still get lots of money.

This is almost mirrored by AMD. Navi 32 should be the Navi 22 replacement,and there should be another 40% increase(RX6800XT from RX6700XT)

The reality BOTH are just doing enough to appear to be competing.

What I'm saying is very simple and clear: The 4070 Ti's 12 GB is enough for it to play (in all currently available games) well at a settings level commensurate with its compute capabilities.

Since most people keep cards for 3~5 years,that means the 12GB will be fine for that time at 4K with maximum settings.OK.

But oh wait....current games at

settings level commensurate....

So don't tell me you are already having get out clauses now? But despite the RTX4080 16GB only have 20% TFLOPs than an RTX4070TI,it is nearly 30% faster at 4K,and slightly over 20% faster at qHD. Weird that. So basically that distance won't increase and won't change in the next few years. OK,good to hear.

But how do you know it will be OK in a game 6 months from now? Or two years So you don't - you

surmise it might be OK.

Premium products need to deliver on premium expectations. Needs to be more than fine.

Belief is not relevant, I'm talking about real results.

But you just stated a belief and a promise to everyone on this forum. Since most people keep cards for 3~5 years,that means the 12GB will be fine for that time at 4K with maximum settings? OK.

And what should we look at, titles years from now when the user will be looking to already have upgraded by then? I can't judge reality by hypotheticals because they're infinite.

No - people keep dGPUs for 3~5 years. Maybe you upgrade every year or two but most don't.

But you are looking at

because you promised 12GB of VRAM will be fine for years.

And they are, but that doesn't mean 12 GB won't be enough, especially on UE5.

But how do you know that - you not a developer. UE4 VRAM usage went up a lot too.

Why? It's irrelevant. Nanite changes the game when it comes to vram usage, it can't be compared like that. Moreover if you go test with the highest quality assets in UE5 vs lower ones you can see the memory differences are minimal, thanks to Nanite. So going forward an increase in AQ (which isn't feasible anyway) won't dramatically increase vram requirements.

And you can't use the past to predict the future, but I can use the present to judge the present. Besides, why should anyone care if in 4 years, let's say, a 4070 Ti user might run into a title (which he might not even care about) where it turns out he has to turn down textures a notch? In the meantime he'd have enjoyed the card for 4 years!

But that is your

So again,how do you know that your belief it will be fine? Don't callout others for belief when you are stating nothing but belief as fact.

What happens is Nanite. You should look into it.

More belief - maybe you should readup on game development. For someone who purports themselves as an expert,even UE4 saw a huge increase in VRAM usage over the years.

That's fine, but people should make sure their expectations align with reality though.

No people should align their expectations with cost and stop defending low VRAM amounts and upselling. I called out AMD repeatedly for its nonsense,especially as some want to defend "inflation" for why an RX7900XT wasn't a £600(or less) RX7800XT or Navi 32 being sold as an RX7800XT.Nvidia doesn't get a free pass.

The fact that you are defending an RTX4070TI 12GB which has the same memory subsystem as a £500 card is ridiculous,which has the same memory subsystem as an RTX3060 which is £250 during an era of cheap VRAM.

@Joxeon has stated this many times.

What happens to people who will have enjoyed a card for 2 years when a new console launches, the baseline is still Series S, and games are planned (and take) 4+ years? My guess - nothing.

It's not one of them, we have data to prove the opposite. That you think it may in the future prove to be one of them, well, that's just pure speculation.

But all you are stating is pure speculation with no real data to prove anything. But when data disproves what you are saying its down to "bad devs" or "poor console ports" or something else. That you

think it

may be in the future 12GB to be fine on a £800 dGPU, well, that's just pure speculation.

It's acceptable if it people buy it, but my points merely revolve around where it was better than the 7900 XT (and I've argued it is), then whether 12 GB is enough (all our data shows that it is).

No,because you are making a promise 12GB will be fine for years on an £800 dGPU. Not a £500 one,but one closing onto £1000.

An RTX4070 having 12GB might be OK,but not a card costing £100s more.But I now get you really want to buy an RTX4070TI.

I remember people were saying 8GB was fine on the RTX3070/RTX3070TI for years or even 10GB on the RTX3080. Yet how is that working out? Oh,yes many people just quietly upgraded for "other reasons". How many times these arguments happen. The same thing happens all the time.

But my own experience is that all the low VRAM is OK crowd never show faith in the products. They just happen to upgrade to higher VRAM cards very quickly because of "other reasons" or don't bother with them.

So you will say 12GB is fine,until the RTX5070 16GB is out in 18 months.

So again I don't agree with anything you are saying. You can attempt to "address" my points but none of your arguments really sway me. Just like you never really addressed what

@ICDP said. But Nvidia does not agree with you. The RTX4080 16GB is barely 20% faster and has more VRAM and the RTX4090 has 24GB.

OFC,you can go on for the next 10 pages trying to sell 12GB is all you ever will need on a premium priced dGPU. Just like 512MB,1GB,2GB,4GB,6GB,8GB,etc was all you ever needed.

You do you!

.

.

)

)