Not if it gets cancelled!

Even with their Zen CPUs,it took three generations to get chiplets fully sorted out:

1.)Zen/Zen+ introduced scalable CPU designs with Infinity Fabric. The Threadripper and Epyc CPUs had multiple dies. But power consumption of the IF massively increased.

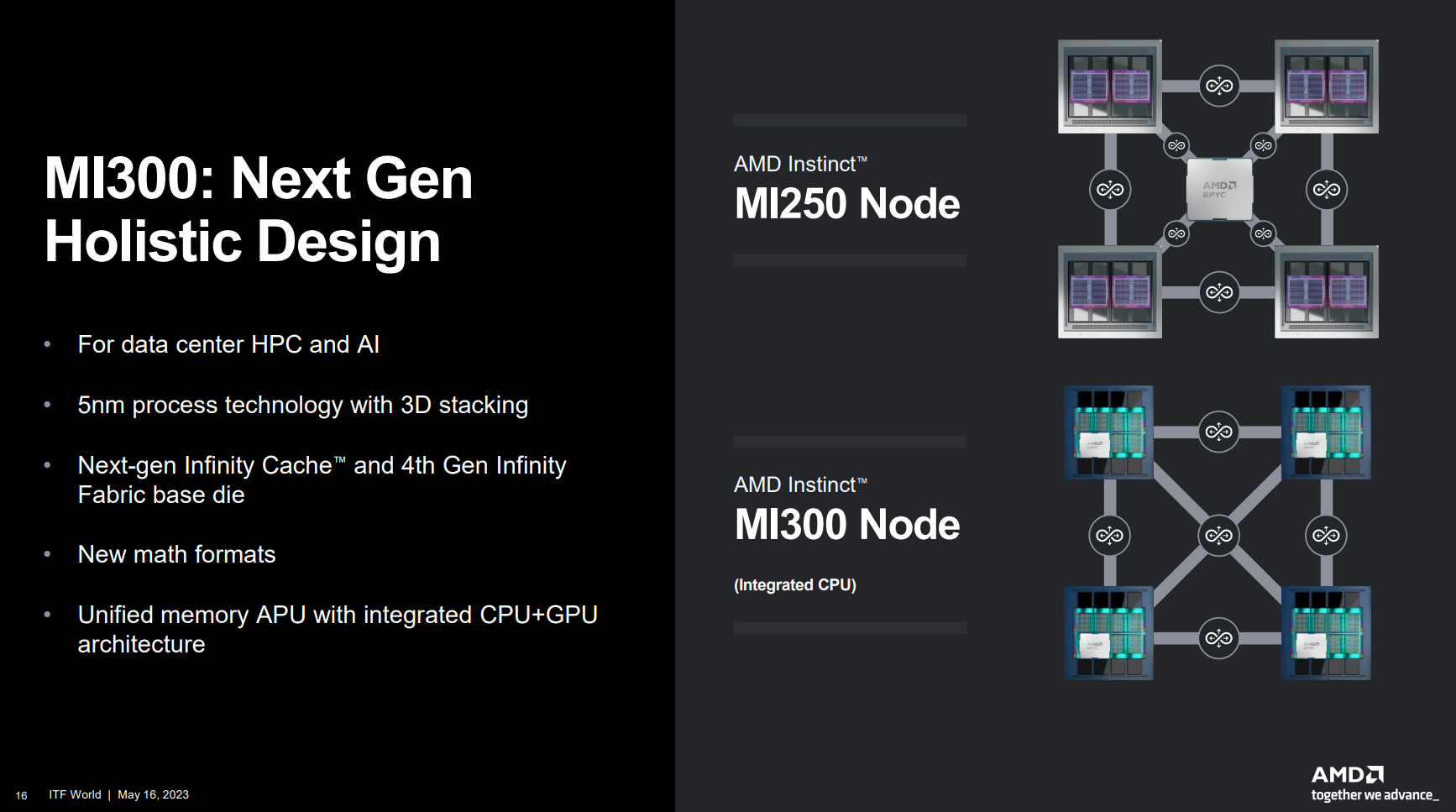

2.)Zen2 fixed some of the power issues and introduced 8C chiplets and doubled core counts.

3.)Zen3 introduced die stacking,and worked on improving latency. Memory controller also improved.

4.)Zen4 finally dropped IF power enough,the chiplet CPUs could be used in laptops.

With their dGPUs:

1.)RDNA1 split compute and gaming functions and was the first to use Infinity Fabric,and also get over GCN scaling problems.

2.)RDNA2 introduced raytracing,improved scaling and introduced Infinity Cache.

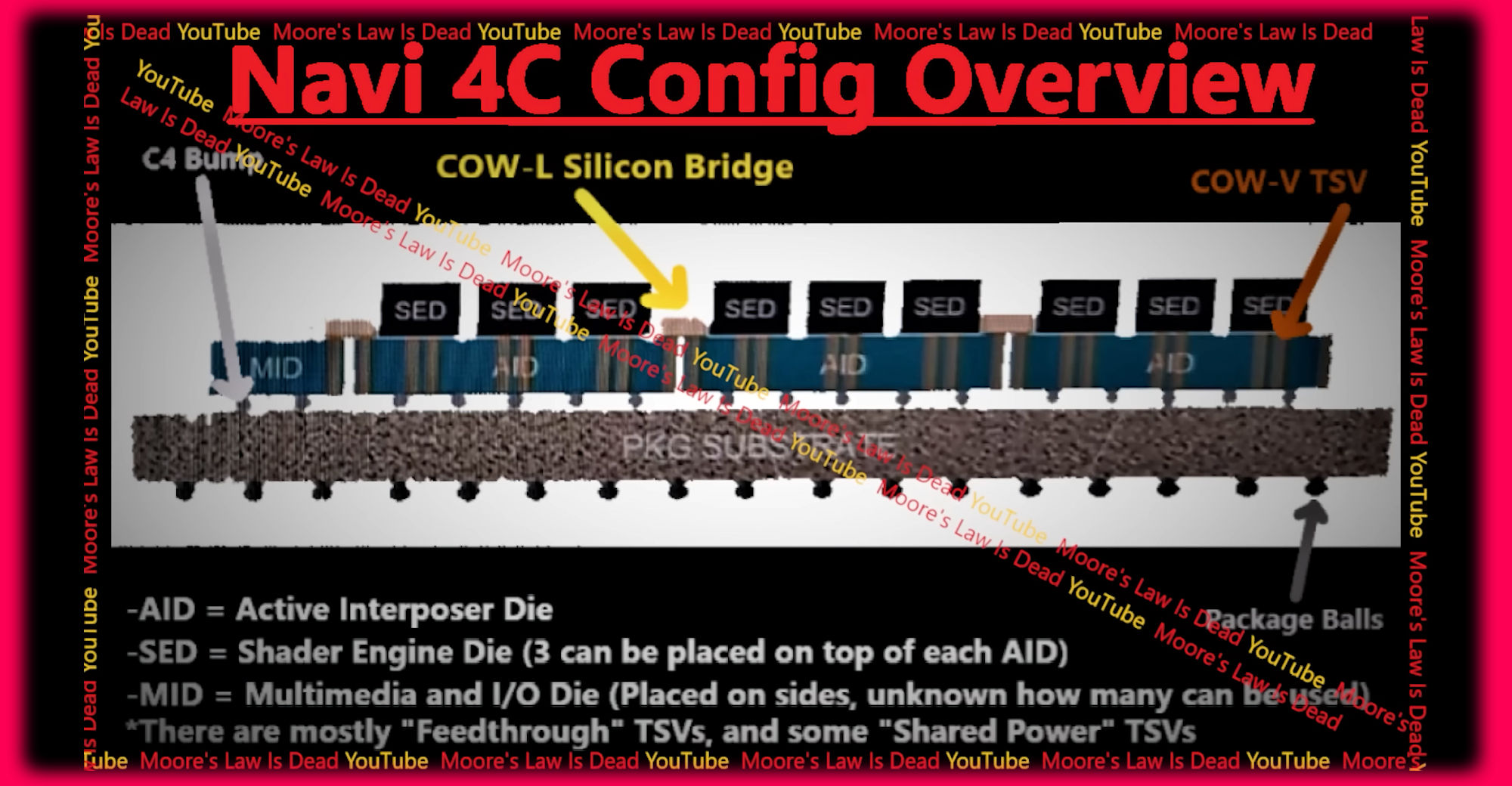

3.)RDNA3 optimised Infinity Cache,introduced machine learning functions and went to chiplets.

AMD did what they did with Zen,Zen+ and Zen2 in the first three generations of RDNA. They were trying to do a Zen3 and Zen4 technical move in one generation? Maybe they need to be less ambitious on the technical side and try and concentrate on baby steps first. Ideally first improve the design with a solid RDNA4 and then move onto more complex arrangements with RDNA5.

one wouldn't call the Zen architecture a fail, 6 years after AMD started on that road Intel still can't compete with it.

one wouldn't call the Zen architecture a fail, 6 years after AMD started on that road Intel still can't compete with it.