@MartinPrince I think you are missing the point of why I talked about Lightroom. The

Lightroom batch tests have nothing to do with core count as its beating Intel and AMD CPUs with much bigger core counts. The scaling shows this with Ryzen 3000 tests,as they defy normal scaling - its down to the huge L3 caches probably.

If you were using Lightroom exports as an indication of single core performance,Zen2 would have the highest single threaded performance of any CPU tested. But everyone knows this is not single threaded performance or multi-threaded performance being measured.

The chart you demonstrated above is a batch test. I'm not sure you've worded that correctly as ANY batch test is always FULLY MULTI-THREADED. Consequently, they have a lot to do with core count. Extra cache will aid performance though the main reason for the better performance of the 3900x is the full usage all core clock speed + IPC. The Threadripper 2950x is ~3.5ghz compared to the 3900x which is ~4.2Ghz plus it has higher IPC (~18% I believe). This alone could account for the 3900x's 12/24 better performance than the 2950x 16/32 not including the larger cache:

2950X = 16x3500 = 56,000

3900x = 12x4200 x1.18(IPC) = 59,472

Either way that graph is definitely a fully multi-threaded graph and I can pretty much guarantee you that every CPU on that list was maxing out all of its cores when doing that batch test. As such it is not really the topic of this thread, neither is power consumption or value for money etc. We're just concerned with single threaded performance or as I prefer, non-fully multi threaded performance.

With this being an overclockers forum, a few folks do run overclocked CPU's. The big issue for me is that most of the charts and reviews do not compare standard overclocked systems (High end air/AIO).

As I've demonstrated, there can be quite a disparity in the results when comparing stock systems with overclocked ones. This is more pronounced in software that uses less than the maximum threads available.

The fact that Humbug, an experienced member with over 30,000 posts, can be so off base believing his Ryzen 3600 with PBO would be faster than an overclocked 9700K in single threaded (or non fully multithreaded) software makes me wonder if there is a general lapse of understanding of;

- How software utilises cores/threads

- How overclocking on both platforms works.

If you look at DXO Photolab on Humbug's post you will see it uses about 8 threads.

My 3900X exhibits a similar behaviour:

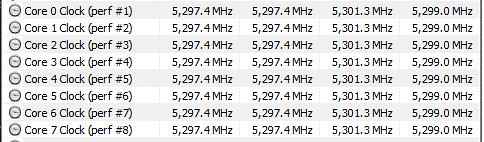

Though look at the core clock speeds for the 3900X taken during just the 18secs while DXO Photolab was exporting during the test.

The columns are Current - Minimum - Maximum - Average

You can see that 8 cores are using the average of ~4.25Ghz

This is the 3900X core clock during the ~9min Single Thread run on Cinebench r20

You can see that 1 core did achieve 4.6Ghz though the average of that core (along with 1 other) was 4.5Ghz. The other cores are way below it.

We all know that the advertised clock speed of Ryzen 3900X is 4.6Ghz but what a few people don't understand is that under most conditions where software is using several threads then it does not reach that speed, plus you normally can not do an all core overclock exceeding 4.6Ghz. Most people will be able to do all core overclock 4.3-4.4Ghz. Though as Grim5 stated earlier in the thread, doing an all core overclock on Ryzen 3000 will lessen Single-Threaded performance.

Compare the DXO Photolab test of the 9700k at stock speeds.

and now overclocked.

The Cinebench R20 Single-Threaded difference between Humbug's 3600X and my 9700K is ~12%. The Geekbench single threaded benchmark difference is 20% (I made a mistake earlier running Geekbench in 64bit, the 32bit difference is 20%) but

the real world performance in software that can leverage ~8 cores/threads then the difference is ~35%.

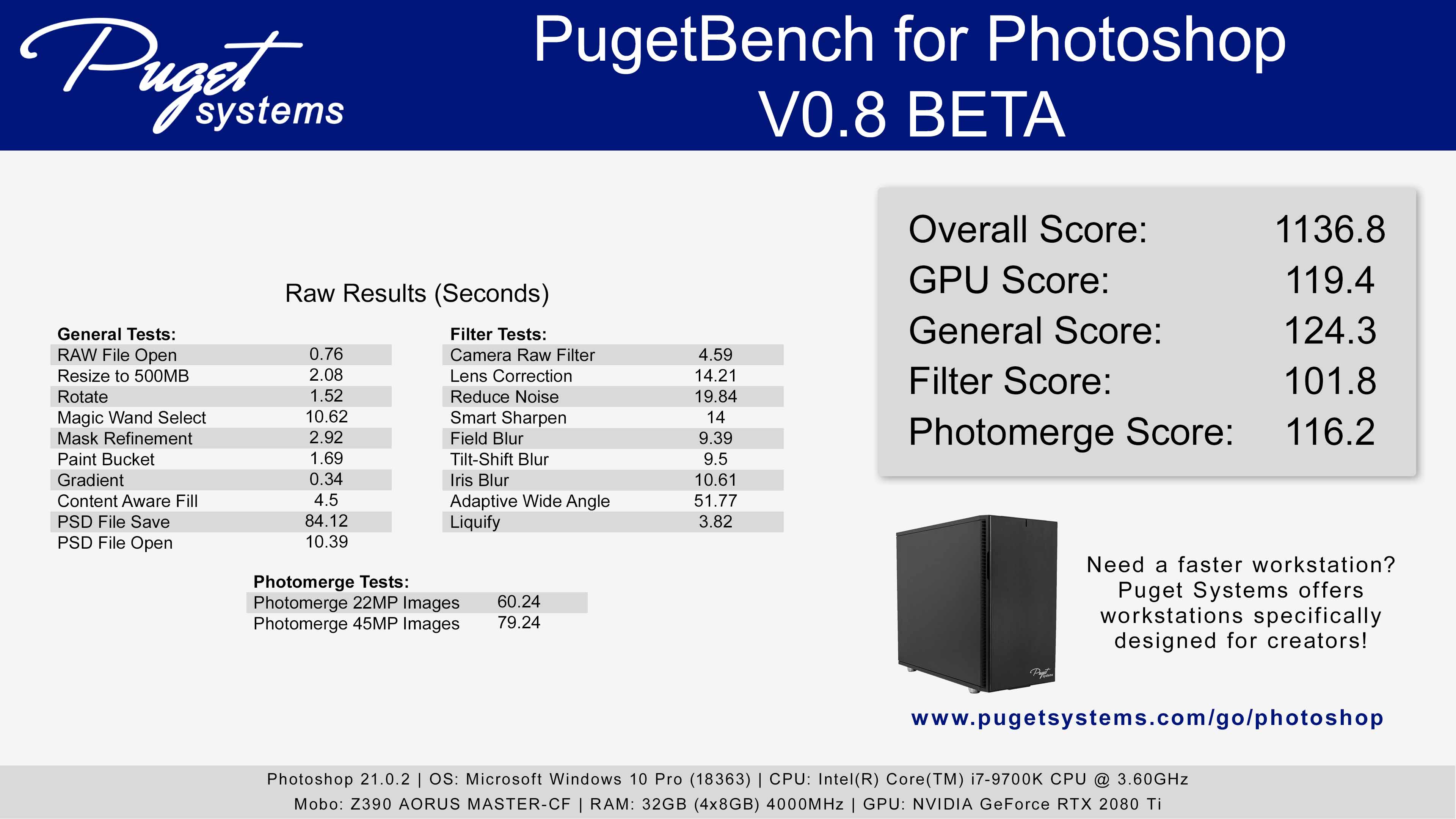

Also if you look at the Photoshop Puget Overall score for the top rated systems score is ~940 for the 3900X, 3960X and 9900K, my 9700K scores 1136, which is ~20% faster. This is simply the current position when it comes to overclocked systems on both platforms and has been for the last 6 months. This may well change in the next 6 months but that is another topic.