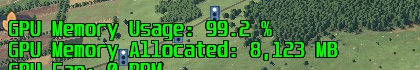

Typically I find the game that is my "go to" to be the one where my 3070 runs out of VRAM....

.....what difference that makes I'm not sure, it is not as tho I have a 8GB / 16GB switch to throw. Otherwise the card is rock solid, absolutely, and has been great at either 1440p or UW. It runs really well. There are some later titles it seems to run out of grunt with, well to a point, Hogwarts springs to mind, need to get back to that one. But a part of me reckons that inadequate development and optimisation plays a big part at dragging the performance down as does any GPU limitation.

It wasn't my first choice of that gen, that went to a Red Devil LTD ED 6800XT. It lasted around 2 weeks, returned for a refund. That AMD card ran as expected in the AAA titles I had to test it but in the Indie type games I played it was horrendous. AMD support forums were of little use but it did remind me of historical problems I had in the past with ATI.

With the above in mind there will probably be a resignation and acceptance of sticking with another Nvidia card, even tho I would like that to be by choice of knowing an AMD one would work as expected, but it doesn't, for me.

www.overclockers.co.uk