Caporegime

- Joined

- 18 Sep 2009

- Posts

- 30,309

- Location

- Dormanstown.

290X's and 390X's could be had at cheaper prices than the current 580's to be honest.

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

Multi GPU versus Single GPU, also you're comparing the flagship AMD single GPU's to a mid range single GPU.

It's not even close to the same thing.

I'm not sure what's "sweet" about the 580 price point given that it was available in 290X's years and years ago.......

wait what ??

Single chip gpu vs crossfire on one card... Hows that even comperavle LOL

Bet they would make Vega 64x2 but case in pc would go on fire

580 is 290x performance from ALMOST 5 Years ago with 150 pound lower price....

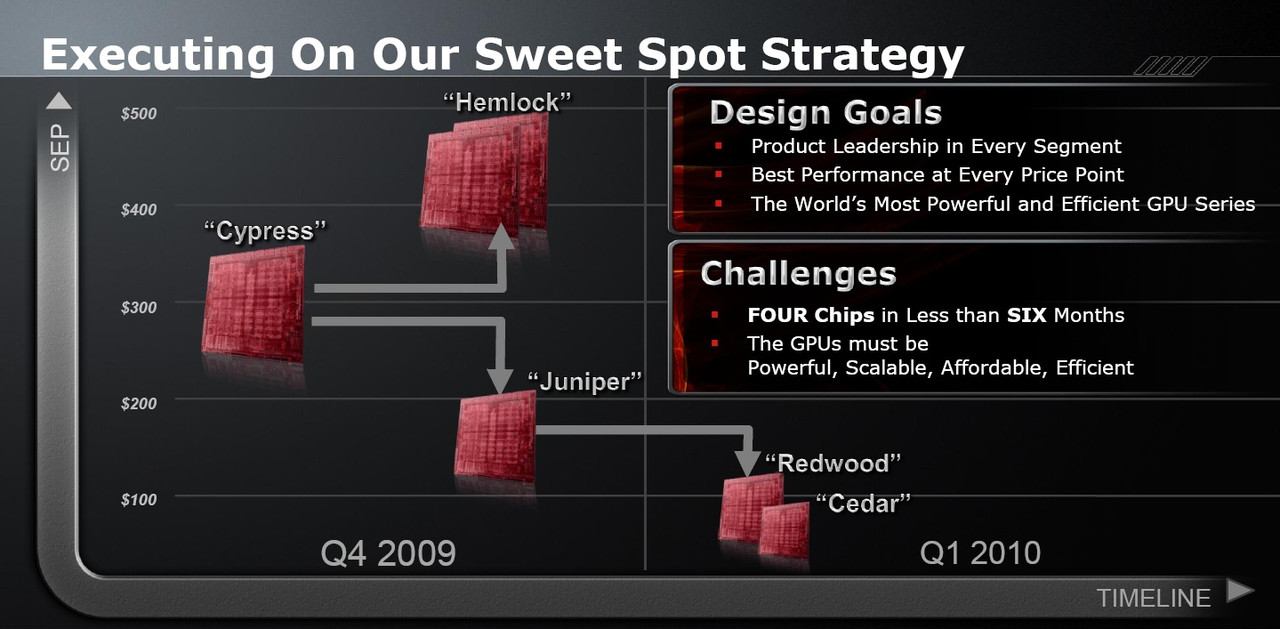

https://www.pcper.com/reviews/Graph...Card-and-AMD-Eyefinity-Review/Radeon-HD-5800-Just as we saw with the Radeon HD 4870 launch last year, AMD is going for a "sweet spot" strategy in which they design a GPU for the performance segment and then address other areas based on that GPU. With the HD 4870 they released the HD 4850, 4830 and 4770 for the lower price points and also created the HD 4870 X2 dual-GPU card for the ultra-high end segment. It looks like AMD is again taking that approach as we'll see Hemlock dual-GPU cards out before the end of the year based on this roadmap.

I still fail to see how a 250-300 pound 580 is priced at the sweet spot, it's stagnated performance wise for years and years.

I find it depressing.

The sweet spot is about the die area of the chip, not about how much the price inflates

Nvidia are beating them at that game then surely.

Damn, how many iMac Pros are they selling? Seems a fairly niche product.

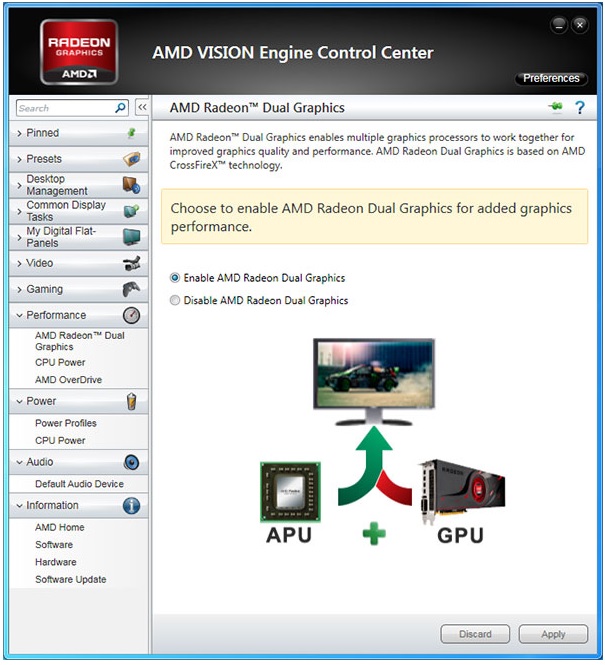

The idea around multichip GPUs is making the GPUs appear as a single GPU to the Dev and end user, this was mentioned on the release of DX12 and Vulkan.

The issue with traditional CF/SLI is getting the GPUs to produce perfect alternate frames.

An MCM chip does not utilize crossfire or sli, it is seen as one chip like Ryzen is.

Same. Multi-GPU chips worry me because of how poor crossfire/sli support is currently. Most games still primarily use DX11 and developers are slow to change. If, theoretically, AMD were to drop a multi-GPU chip today that beat the 1080 Ti in raw performance and TFLOPS, it would still be crushed in gaming due to poor optimization for multiGPU, latency problems and poor frametimes. It probably won't work well until widespread DX12, Vulkan, and explicit multi-adapter support becomes mainstream. And that's kind of a problem of what comes first, chicken or the egg? Developers do not have much incentive to make efforts to get these things working since the vast majority of the market isn't using multi-GPU configs.

It was a pipe dream really, when David Wang at Computex 2018 stated Multi-GPU (infinity fabric) for their GPU's i had no idea he was only taking about the professional market where it is accepted easily by their software same goes with mining software, unfortunately infinity fabric still doesn't solve the problem with multi-GPU gaming i thought maybe there was some kind of break through with infiinity fabric LMAO oh boy just another pipe dream.

I can't remember the program called (Hydra possibly?) that had the IGP and GPU working together but it gave humungous amounts of frames but gaming like that was impossible, as it was a stutter fest. Completely broken.The IGP and discrete GPU working together has always been hit and miss (more miss in terms of experience)

Didn't it also allow for Nvidia and amd/ati gpu to also render together

The IGP and discrete GPU working together has always been hit and miss (more miss in terms of experience)

Conclusions

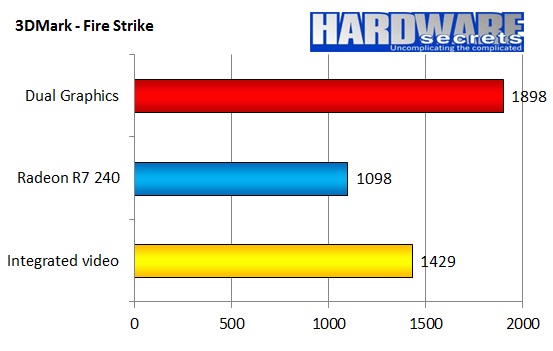

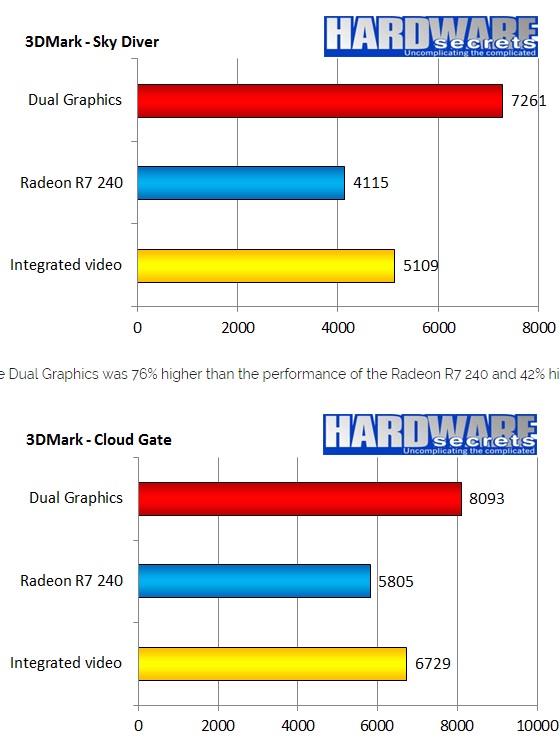

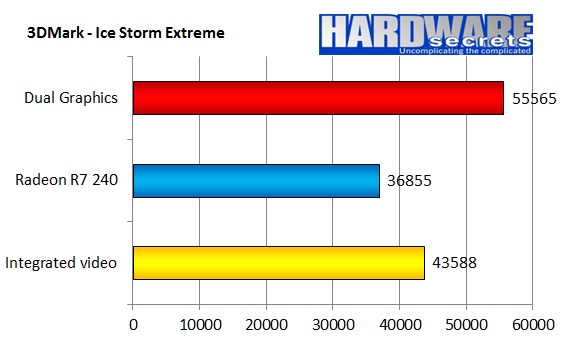

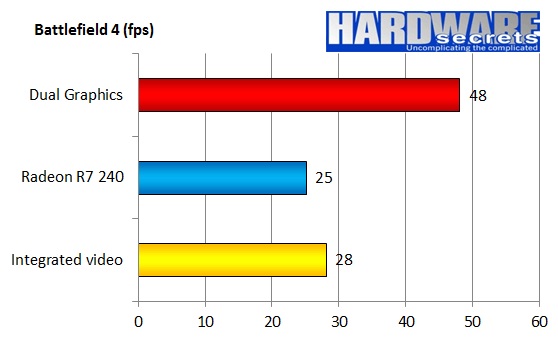

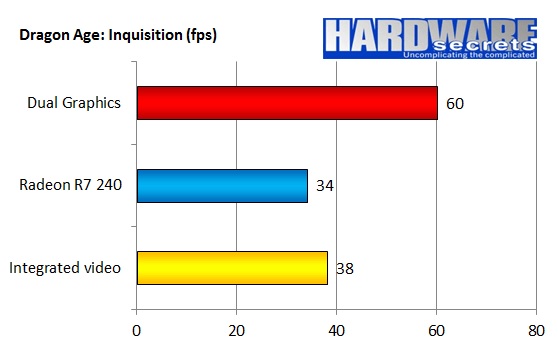

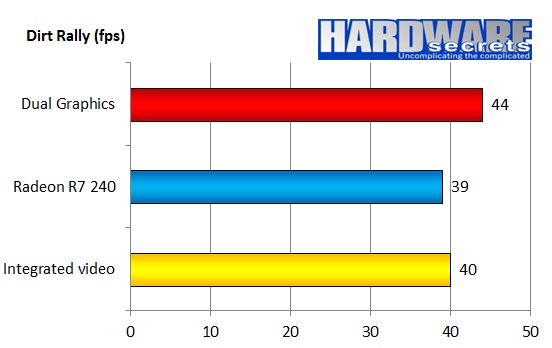

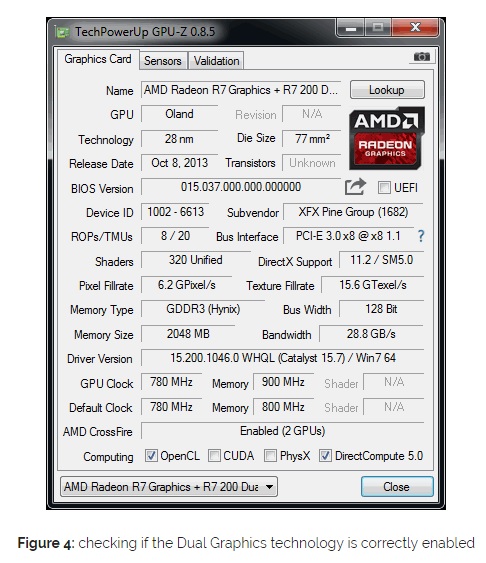

Our tests showed clearly that the AMD Dual Graphics technology really boosts the performance in games. On most of the tested games (as well as on the 3DMark benchmarks) the performance with the technology enabled was superior to the obtained with the integrated video alone or with the discreet video card.

In two games we ran, however, there was no performance difference. Our guess is that, in those tests, the performance was being limited by the CPU, which is not a high-end processor, after all.

Another important detail that was clear is that the integrated GPU of the A8-7670K is more powerful than the Radeon R7 240 video card we used. It makes sense, since the technical specs of this graphic engine are slightly superior to the ones of the used video card.

So, if you have an A8 or A10 CPU, there is no sense in buying an entry-level video card and disable the integrated video of the processor: you will be burning money and maybe even reducing the video performance of your computer. However, you will get a performance gain in most games if you buy a video card that can be used together with the integrated video of your processor using the Dual Graphics technology.

print screen windows xp

print screen windows xp free image uploading

free image uploading

You're living in the past through Rose tinted glasses. Hybrid crossfire was limited and wasn't perfect at all.

You're also just saying buzzwords too.