Soldato

Power is not energy used, it is a rate of consumption. In my example I use 0.3 kWh of electricity per day to game. If I mined in that same period it is at least 3.6 kWh per day usage. That's a lot more energy consumed.

I've not included TV/monitor and audio system as I'm comparing apples to apples. It's likely TV/Netflix would be watched too for a portion of the time I was mining so difficult to truly separate.

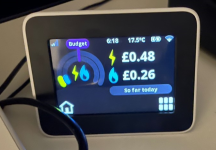

In your case then, gaming costs you 0.48 kWh per hour whereas mining for the day is 8.4 kWh. Quite the difference.

You say you're comparing apples to apples and then proceed to compare power usage per hour vs per day.

I'm with @gpuerrilla on this - comparing apples to apples (e.g. over the same time period) - gaming uses far more power than mining does, and it makes perfect sense to include your monitor, speakers etc. since they wouldn't (shouldn't) be in use while mining, but certainly would be during gaming.