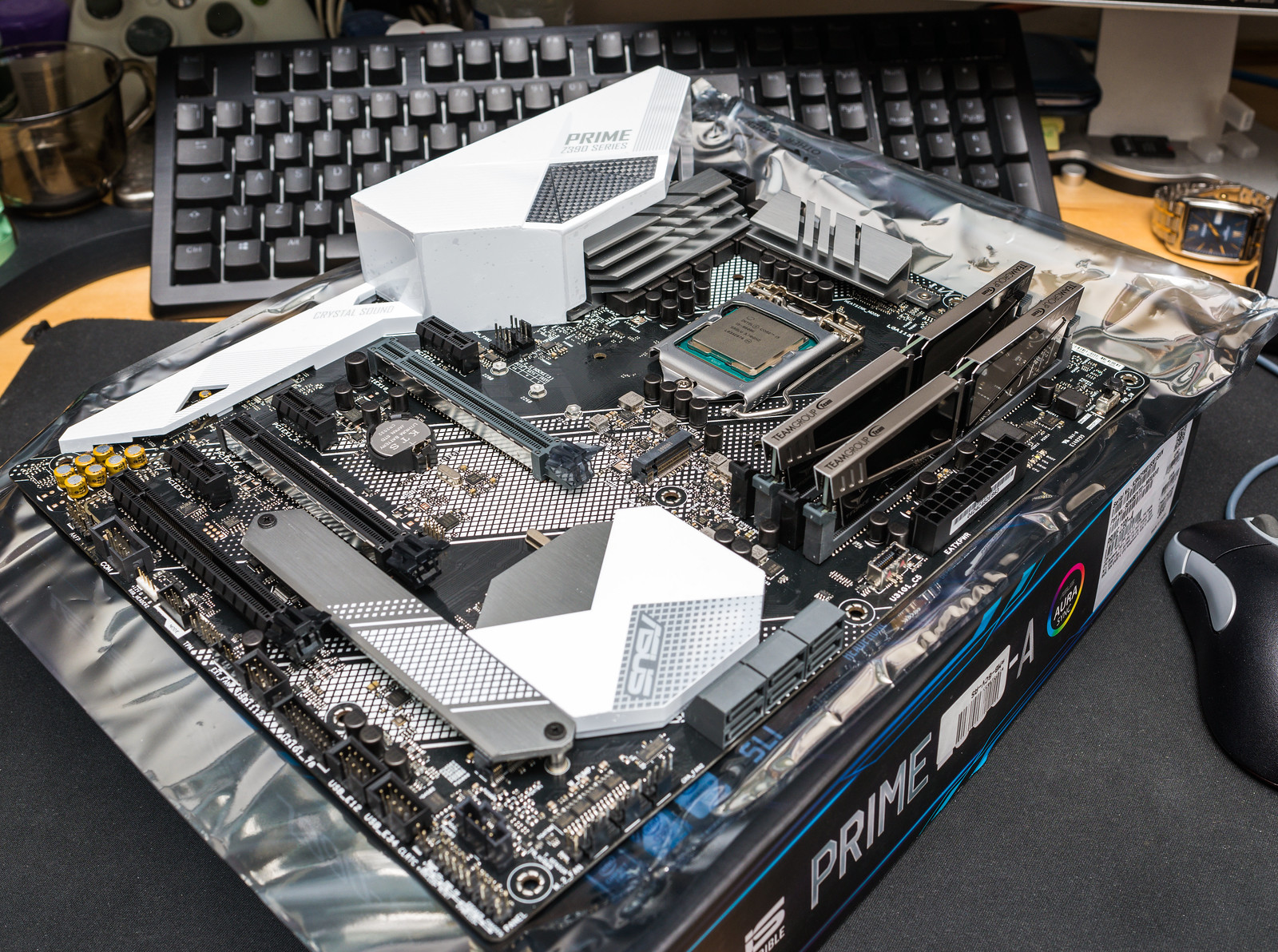

I managed to squeeze the 2x2GB corsair sticks in but had to remove the fins from the one module to get it to fit under the cooling fan. The intel expander card is now in the board which makes things much tidier. I can also now fit the side panel fan in place to aid cooling the cards.

Back side looks reasonably tidy.

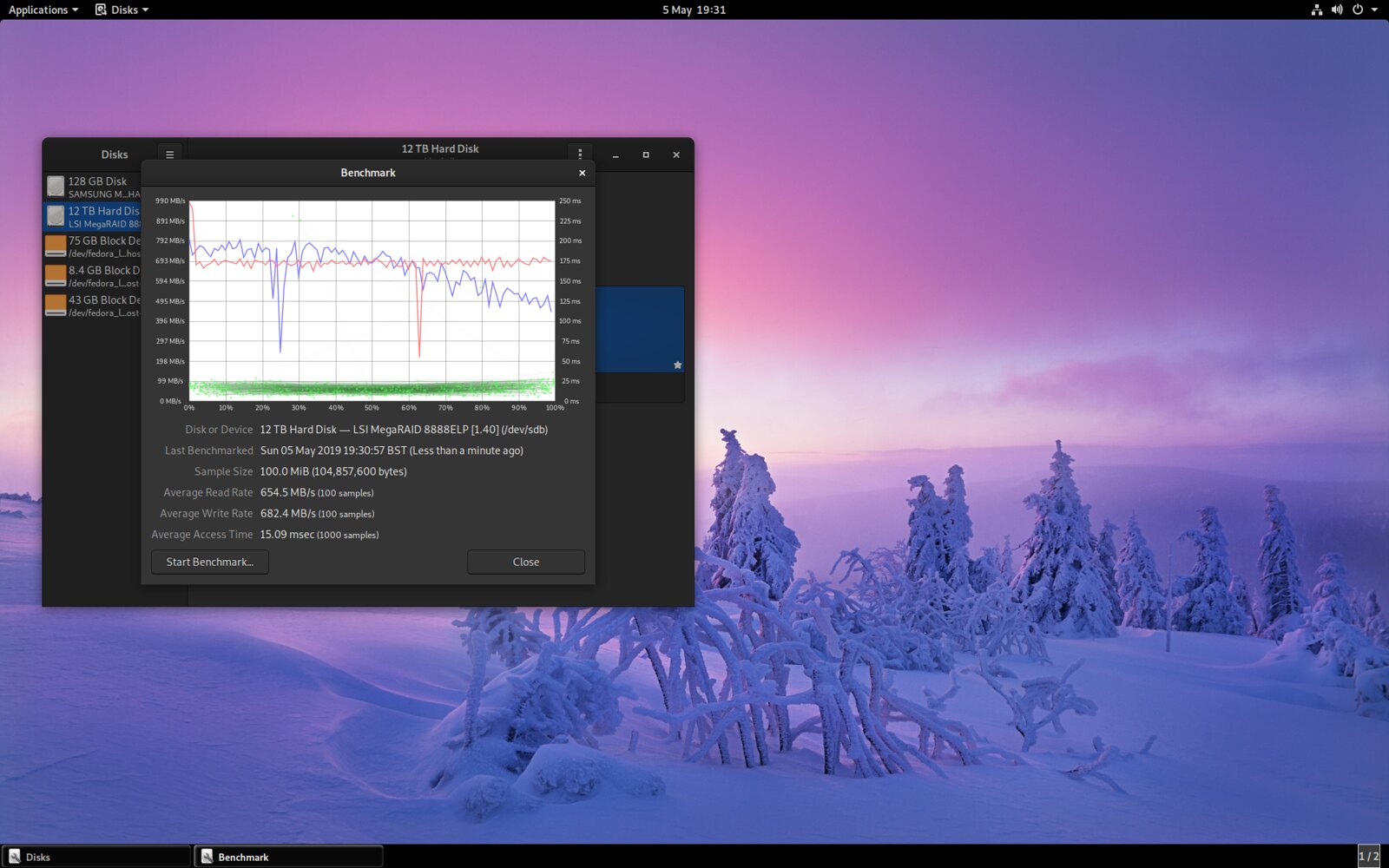

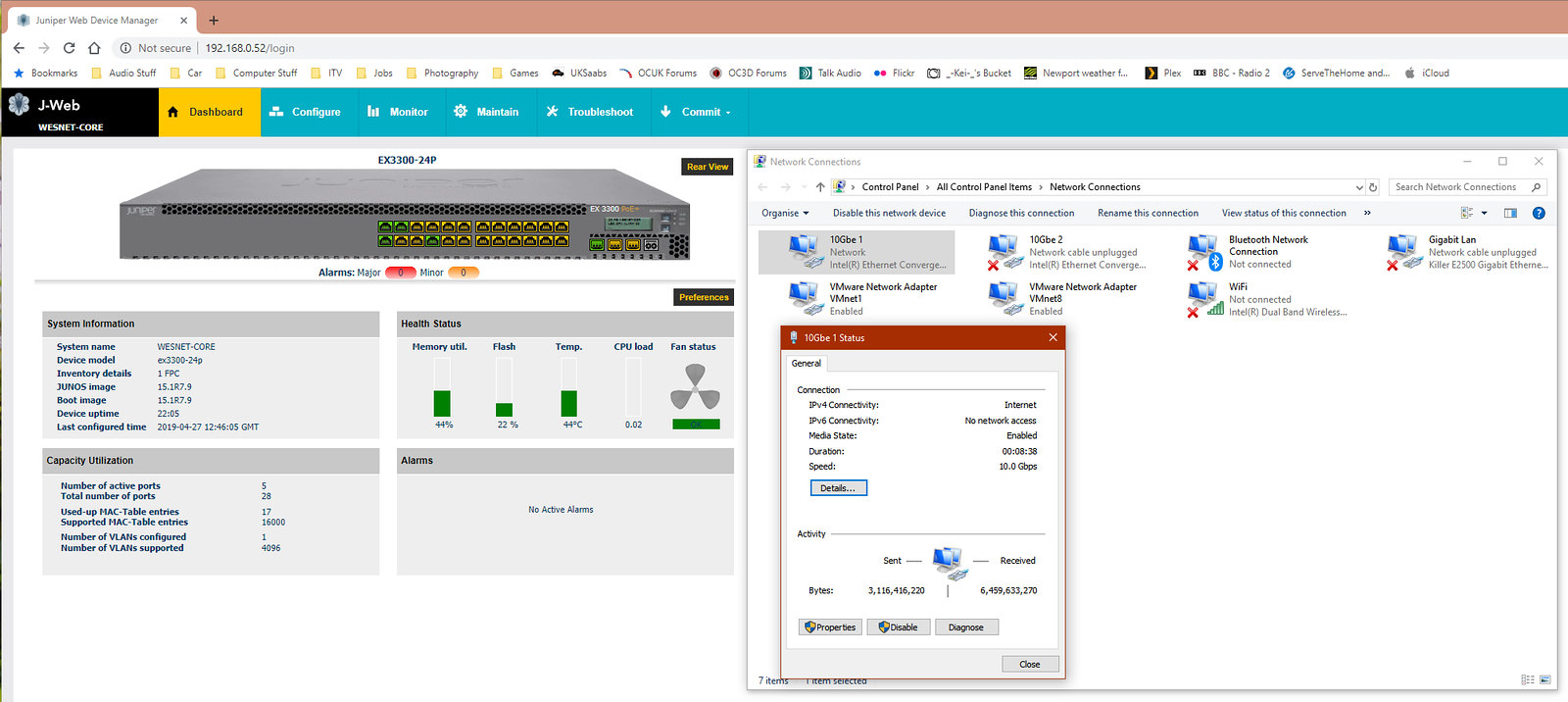

It initially wouldn't boot up as it didn't like the memory config. Tried upping the DRAM voltage and tried dropping to 1333 (from 1600) but no dice, I had to run 1066 in order to get it to boot. Might be possible to run it all at 1600 by tweaking the timings but I'm not sure it's worth it in this usage case. Linux seemed to cope ok with the changes, however I've run into network issues as it will not pickup an ip address. I'll need to look into it further tomorrow.

Back side looks reasonably tidy.

It initially wouldn't boot up as it didn't like the memory config. Tried upping the DRAM voltage and tried dropping to 1333 (from 1600) but no dice, I had to run 1066 in order to get it to boot. Might be possible to run it all at 1600 by tweaking the timings but I'm not sure it's worth it in this usage case. Linux seemed to cope ok with the changes, however I've run into network issues as it will not pickup an ip address. I'll need to look into it further tomorrow.