Caporegime

- Joined

- 18 Sep 2009

- Posts

- 30,539

- Location

- Dormanstown.

Yeah, the shared floating point does make a difference.

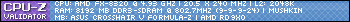

I noticed when comparing my Athlon x2 270 to a dual core Kaveri in Cinebench (floating point dependent, much like games). Single core, the Kaveri gave my Athlon a beating. With all (two) cores firing, they were near identical.

Presumably, a lot of games aren't optimised to favour cores from separate modules?

They're meant to be, it's the threading priority. Like HT, in a 2 threaded game, a game wouldn't use core 1 and the 2nd logical core on it, it'd use 2 separate cores.

), followed by athlon xp, 'barton', the original FX dual core.

), followed by athlon xp, 'barton', the original FX dual core.