You seemed confused with the CPU, chiplets and the I/O dies. The chiplets is the CPU dies.

I did query you on that but you seemed so convinced and as I only have a passing interest I deferred to you.

No, I'm not confused, I know what a chiplet is, what an I/O die is and what a CPU is, and to be honest it still makes no sense for the PCIe controller to be on the chiplets. That quote from the Anandtech article is purely an assumption based on AMD

not saying something (Tom's Hardware made a similar assumption), the article itself is dated November last year from a first look at EPYC Rome and even now AMD have not revealed information about the I/O die's full capabilities. And if the I/O die doesn't hold the PCIe controller too, why in the blue hell is it so big?

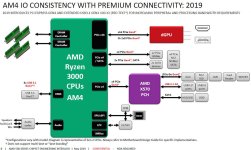

I've had a look around the tinterwebs and I can find nothing which states exactly where the PCIe controller resides in Zen 2. Plus, if the PCIe controller is on the chiplet, then that means CPUs with smaller chiplet counts will also have fewer PCIe lanes. So for example, EPYC Rome has 128 lanes available, so that's 16 lanes on each chiplet. X570 has 24 lanes, so that means there are 8 lanes explicitly blocked from being used? And does that mean ALL Ryzen 3000 CPUs will be dual chiplet implementation (and therefore using 4 lanes each to communicate with the I/O die) in order to get the 24 lanes? Because a single chiplet only has 16 lanes in it.

Furthermore, why would the chiplet in an EPYC Rome CPU be responsible for socket-to-socket communication? The whole point is I/O die to I/O die connectivity, lashing the 2P sockets together with 48 or 64 lanes, but the

chiplet is responsible for that?

But at the same time, if the I/O die for EPYC has to host all the PCIe lanes for up to 8 chiplets then that suddenly seemed too much.

There is a Serve The Home article which talks about how EPYC Rome has a 8 sets of 16 lanes and how they can be configured to expose PCIe lanes for the system, lane allocation between sockets and I/O die to chiplet communication on the Infinity Fabric. That's 128 lanes available per EPYC Rome CPU. Only 128 lanes in an I/O die that size seems perfectly fine to me.

All in all, I'm not saying I'm right or wrong, I'm simply saying I don't know, nor does anybody else because AMD haven't revealed full details on the I/O die. But PCIe controller on the chiplet just makes no sense; the chiplets do the computing, the I/O die does the communication. That's the entire point, surely?