My doom eternal videos, as said, I'll upload longer footage when I get more time, they are still processing so HD/4k versions probably won't be finished till later tonight:

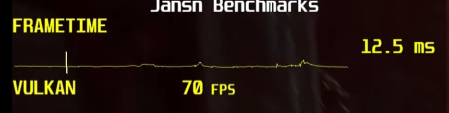

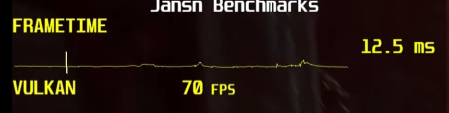

I count one area where FPS drops to 57, no frame latency spike/stutter at that point, which is unusual if vram did run out i.e. nothing like we see on the above 6800xt video:

I count one area where FPS drops to 57, no frame latency spike/stutter at that point, which is unusual if vram did run out i.e. nothing like we see on the above 6800xt video: