I really doubt games will be allocating that much, but let's see..

for current gen at 4k the standard vram usage is like 6gb-8gb at ultra, next gen seems like it will be 8gb-12gb.

I don't think 16gb will be utilised in any game for atleast another 6 years. That's just me looking at history and speculating, anything could happen...

Games barely touch 8gb now and its the end of this console era where consoles had 8gb of shared ram.

Consoles have 16gb this time, so i don't think 16gb will be used until 2025+

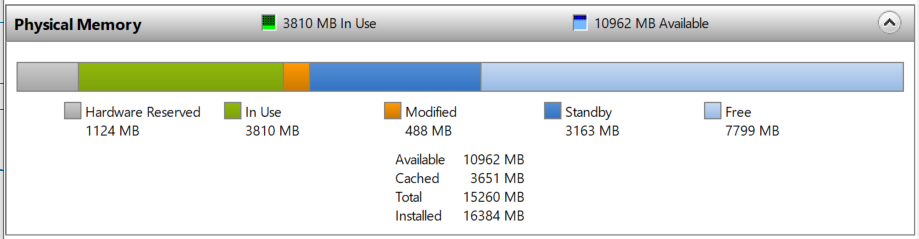

I have seen that performance slow downs appear BEFORE the whole memory capacity is occupied.

The system needs some amount of free and in standby memory to be there and when the memory is saturated, you get terrible stutter.

More system memory and more VRAM should be used to offload the SSD work, too.