Soldato

- Joined

- 21 Jul 2005

- Posts

- 20,702

- Location

- Officially least sunny location -Ronskistats

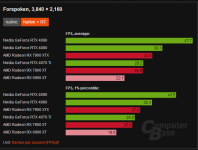

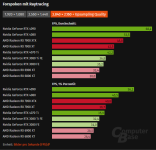

I have an RTX3060TI myself. The RTX4060TI is really an RTX3050 replacement,so despite Nvidia adding cache like AMD does,it can only do so much at higher resolutions.

Oh another thing AMD did that got copied that gets glossed over.

would have pushed the 3080 10GB to the xx60 tier of cards... maybe it should have been called a 3070 or 3070ti with 10GB/20GB and the 3080ti 12GB with maybe 24GB of VRAM should have been the og 3080.

would have pushed the 3080 10GB to the xx60 tier of cards... maybe it should have been called a 3070 or 3070ti with 10GB/20GB and the 3080ti 12GB with maybe 24GB of VRAM should have been the og 3080.