I suspect you'll be right.

Mark my words!

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

I suspect you'll be right.

I'm not so sure - the 20-series was a bit of a reality-check for Nvidia and Ampere's pricing and performance were adjusted in response (then completely torpedoed by Covid/crypto-mining) - Nvidia's current situation with Ada may be OK for them (with the AI boom) but it can't be good for their AIB partners.

I doubt we'll ever get back to Ampere's price-to-performance vs. 20-series but I expect a more consumer-friendly line-up for the 50-series than we have right now (insert 'sweet summer child' memes below).

Mark my words!

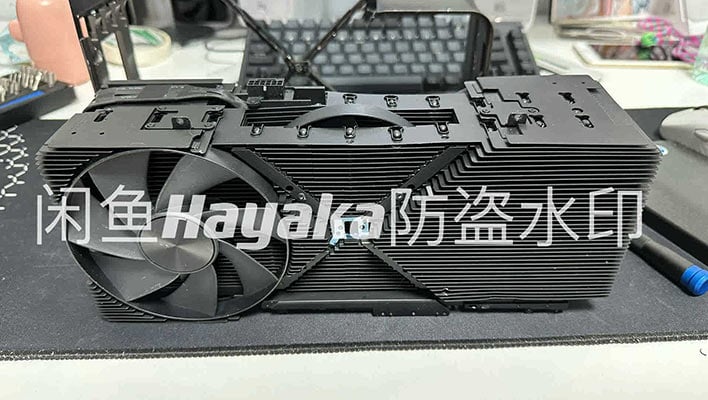

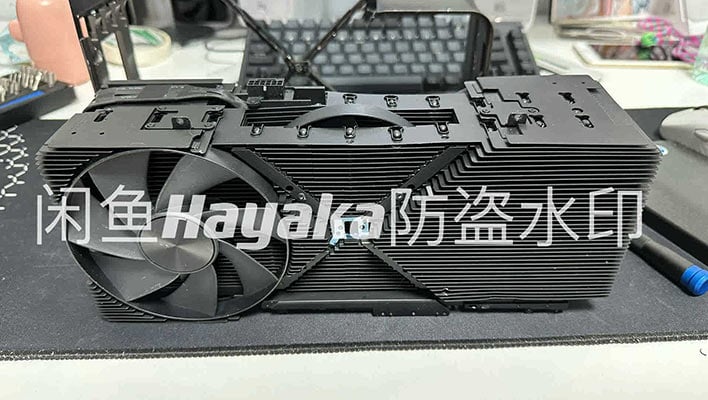

Teardown Of Prototype Cooler For Canceled 4090 Ti Reveals A Hidden Surprise

These teardown pictures form the most extensive exposé to date of what is thought to be an NVIDIA GeForce Ada Lovelace SKU that has been axed.hothardware.com

Another look at the alleged 4090 ti cooler, it has another fan buried in the middle of the heatsink.

Likely will be used for the 5090, I guess.

Nah, it's a bulky and ugly mother.Likely will be used for the 5090, I guess.

It runs cool due to the cooler.What's the reason for the heatsinks getting ever larger? I thought the 4000 series ran pretty cool

What's the reason for the heatsinks getting ever larger? I thought the 4000 series ran pretty cool

Rumor had it that the 4090 was originally designed to run at 600w so all the coolers got scaled up - when Nvidia finally realised they were taking crazy pills they scaled it back to a more reasonable (!) 450w (but by then all the AIB's had already made their new coolers).What's the reason for the heatsinks getting ever larger? I thought the 4000 series ran pretty cool

)

)Rumor had it that the 4090 was originally designed to run at 600w so all the coolers got scaled up - when Nvidia finally realised they were taking crazy pills they scaled it back to a more reasonable (!) 450w (but by then all the AIB's had already made their new coolers).

This also had the humourous side effect of making AMD looks like fools when they trumpeted the energy efficiency of RDNA3 only to realise they were less energy-efficient than Nvidia (ooops!)

Yeah, AMD (well, specifically Radeon's) marketing has seemingly always been terrible but the 7000-series launch was pure clown-world.Oh yeah I remember this from AMD it got me hyped and how wrong was I

This also had the humourous side effect of making AMD looks like fools when they trumpeted the energy efficiency of RDNA3 only to realise they were less energy-efficient than Nvidia (ooops!)

What's the reason for the heatsinks getting ever larger? I thought the 4000 series ran pretty cool

Well, GTX 970 was 150w TDP. 4080 aka x70 series card more likely is 300w.This was a flat out lie by AMD. The energy efficiency of RDNA2 and RDNA3 is almost the same and when you have moved to a better node that is not good engineering. They have always had issues with energy efficiency with Nv having a clear lead in this area for most of the past decade but I hoped they had turned a new leaf when RDNA2 was so good in this area.

What this means to me as a consumer is I will not go above a certain wattage for a gpu. I think the 350w for my 7900xtx is my upper limit and any new gpu purchase will have to be under that power consumption for me to seriously consider it