You also need to look at the context. GPUs have been sold for huge profit margins in recent times MSRP was basically just a paper figure. They can still sell for a reasonable amount and make normal profits, whether they will want to do that is another thing. They could also be underplaying the next gen to shift the current stock. Once that liability had gone I wouldn't be surprised to see some announcements.Everything is getting more expensive, not entirely sure how GPUs are going to avoid it. Also, the dumbing down of the 4080 makes sense as if the 3080 has such a reputation, 4080s will end up selling (price dependent) regardless of performance but due to reputation. Also the people who missed out last time who are determined not to this time.

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NVIDIA 4000 Series

- Thread starter MrClippy

- Start date

More options

Thread starter's postsIf there is one thing history has repeatedly shown it is that each time this situation crops up, with newer more power hungry cards, it's that enthusiasts who can afford high-end cards WILL bother as they chase performance and don't give a crap about new PSU or electricity costs. Saying people won't buy them unless there is a 2x performance increase is also silly as that is just not realistic or expected; people will buy the cards for a +50% performance increase which is still very respectable and a huge boost in minimum frames at higher resolutions.

I'm not concerned about power for money reasons. It's the heat.

A 400w gpu already requires a dedicated window-mount AC to avoid getting my sim rig all sweaty in the summer. Blasting the central AC would freeze my wife out of any other part of the house since she's not running a damn space heater in the summer like me.

I could buy a more powerful window mount AC unit, but the temperature tug of war is already comical and results in awkward, uncomfortable temps while the GPU and and window mount AC unit fight over which directione the room temperature should go at the beginning of a session.

Everything is getting more expensive, not entirely sure how GPUs are going to avoid it. Also, the dumbing down of the 4080 makes sense as if the 3080 has such a reputation, 4080s will end up selling (price dependent) regardless of performance but due to reputation. Also the people who missed out last time who are determined not to this time.

Reputation? As in GPU = status symbol?

Average buyers just look for a quick reference i.e. Nvidia= known brand so I'll buy it. The mindshare is real.Reputation? As in GPU = status symbol?

Soldato

- Joined

- 21 Jul 2005

- Posts

- 20,769

- Location

- Officially least sunny location -Ronskistats

I'm not concerned about power for money reasons. It's the heat.

So aside from new psu + more heat + everything mentioned its not an issue!

Not exactly a bargain anymore is it.

While "mindshare" seems to be helping Nvidia relative to AMD, current stock and price trajectories seem to show there are limits.Average buyers just look for a quick reference i.e. Nvidia= known brand so I'll buy it. The mindshare is real.

People can buy the mighty 3090Ti for just over $1500 right now, but the number of people willing to do so appears to be less than the number of units on the shelf, as they have sat there for about a week now. Almost $500 Below MSRP...sitting on the shelf.

Soldato

- Joined

- 21 Jul 2005

- Posts

- 20,769

- Location

- Officially least sunny location -Ronskistats

Its been said before we have reached saturation a while back. Those that wanted a gpu now have one. The average 1060 steam hardware survey gamer does not need a 3080+ card.

The general punters out there baulk over the prices and probably have a ceiling of about £350. Would be handy to see current sales data to be able to interpret the reality.

The general punters out there baulk over the prices and probably have a ceiling of about £350. Would be handy to see current sales data to be able to interpret the reality.

Caporegime

- Joined

- 7 Apr 2008

- Posts

- 25,153

- Location

- Lorville - Hurston

Your energy bill will also sky rocket when using a AC and a powerful gpuI'm not concerned about power for money reasons. It's the heat.

A 400w gpu already requires a dedicated window-mount AC to avoid getting my sim rig all sweaty in the summer. Blasting the central AC would freeze my wife out of any other part of the house since she's not running a damn space heater in the summer like me.

I could buy a more powerful window mount AC unit, but the temperature tug of war is already comical and results in awkward, uncomfortable temps while the GPU and and window mount AC unit fight over which directione the room temperature should go at the beginning of a session.

I would love to go back to amd but sadly it looks like they still won't be matching nvidia in the 2 areas that I care about:Will probably be more of an attraction to those that couldn't get hold of a decent 3000 series card, much like Turing when it came out and people with 1080ti's were less profound to jump since the 1080ti was such a big step up compared to previous gen.

Think i'd be more interested to see what AMD brings out this round as for the 2nd time it will be competing and competing well with Nvidia so hopefully that will level out prices abit especially if AMD decides to undercut them (which they normally do).

Upscaling:

FSR 2 is noticeably better than fsr 1 but has a few issues compared to dlss still

- performance gain isn't as much as dlss in RT scenarios (you need all the perf. you can get when it comes to RT and high res. + high refresh rate gaming)

- at the moment, still not in most games I care about or really need the extra perf. for, obviously this will change going forward but not exactly filling me with confidence the current showing

- IQ results are very subpar outside of their poster boy game i.e. deathloop and even then it's a bit meh if you're used to dlss particularly for temporal stability

Obviously this could and should improve going forward but how long will that be? Another 2+ years?

RT:

So far, it seems like RDNA 3 will only be slightly upgraded over RDNA 2, at least what amd has said so far hasn't filled me with confidence i.e. "RT will be more advanced than RDNA 2", not exactly hard to beat RDNA 2 RT and that kind of statement isn't selling it... whereas intel have stated their RT perf beats ampere....

That and AMD seem to have problems getting RT to render properly in some titles initially, which either gets resolved with a driver update or/and game update.

If amd can't deliver on those 2 points then they need to price their gpus accordingly i.e. be at least £100 cheaper than the competition imo, as attested to by likes of HU etc., the "RTX" feature set is valuable and does hold some weight over amd.

If 40xx is **** for RT or/and price is silly, I'll just simply reduce settings further or/and use dlss balanced more often and wait till something worthy comes along, playing at 3440x1440 more often these days over 4k also helps increase longevity.

When these things could print money at a rapid pace, there was no realistic way to "saturate" the market because the more you bought, the more money you could print. That has changed now.Its been said before we have reached saturation a while back. Those that wanted a gpu now have one. The average 1060 steam hardware survey gamer does not need a 3080+ card.

The general punters out there baulk over the prices and probably have a ceiling of about £350. Would be handy to see current sales data to be able to interpret the reality.

I think Nvidia noticed the change in market conditions. The 3080's msrp seemed to indicate that Nvidia noticed what happened with Turing.

Associate

- Joined

- 1 Oct 2020

- Posts

- 1,236

No, as in the amount of difficulty had in getting a 3080, and the fact that it's generally considered to be the optimum bang for buck high end card. People may then say if the 3080 was the sweet spot, then the same must be true of the 4080, regardless of whether it has (relatively speaking at least) been hobbled a little.Reputation? As in GPU = status symbol?

They tried that with Turing when they offered gamers 1080Ti performance for 1080Ti money. (Because ray tracing)No, as in the amount of difficulty had in getting a 3080, and the fact that it's generally considered to be the optimum bang for buck high end card. People may then say if the 3080 was the sweet spot, then the same must be true of the 4080, regardless of whether it has (relatively speaking at least) been hobbled a little.

I don't think the ploy was as effective as Nvidia had hoped it would be. Otherwise they would have priced the 3060Ti at $700 and scribbled "3080" on the box in crayon. -and just gave us the same level of performance at the same price point as the previous gen.

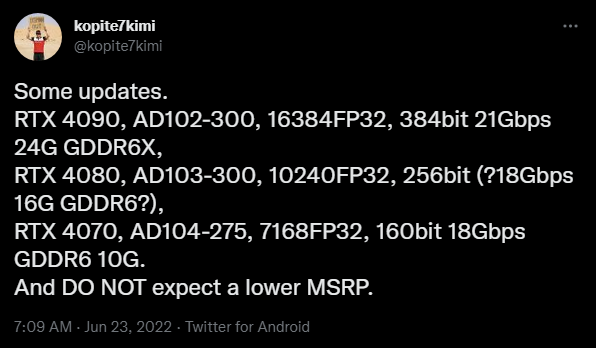

Leaks say the RTX 4070 is 300 watts, that is a bit concerning to me, its 100 watts higher than my current GPU.

Having said that if its RTX 3090 level performance that's fine, in which case an RTX 4060 might be better suited to me, but that also depends, if its an 8GB £500 card i might as well get an RTX 3070 now.... or better still an RX 6800.

Having said that if its RTX 3090 level performance that's fine, in which case an RTX 4060 might be better suited to me, but that also depends, if its an 8GB £500 card i might as well get an RTX 3070 now.... or better still an RX 6800.

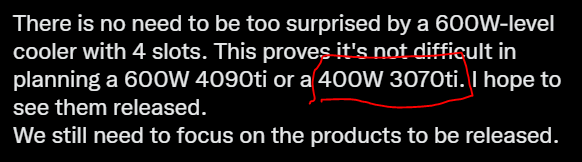

"Oh don't worry about stupidly high power consumption, i hope to see them released"

These cards aren't even out yet and already the pro brand lobby have their activist hats on, that's annoying, these things will cost 50p an hour to run but don't put pressure on my favourite brand to do better, just bend over and take it, give it to me dry Jens, love you.

Genuine question, you have been going on about vram and how nvidia are awful for this, no longevity, people are silly to be buying gpus with low vram etc. etc. yet you are happy enough to buy a 8gb/3070?Leaks say the RTX 4070 is 300 watts, that is a bit concerning to me, its 100 watts higher than my current GPU.

Having said that if its RTX 3090 level performance that's fine, in which case an RTX 4060 might be better suited to me, but that also depends, if its an 8GB £500 card i might as well get an RTX 3070 now.... or better still an RX 6800.

I'm surprised you aren't back on amd already as you don't care/rate RT nor dlss nor much of anything about nvidias offerings over amds so surely amd would be better for your needs/wants?

I'm surprised you aren't back on amd already as you don't care/rate RT nor dlss nor much of anything about nvidias offerings over amds so surely amd would be better for your needs/wants?You said it yourself:"Oh don't worry about stupidly high power consumption, i hope to see them released"

These cards aren't even out yet and already the pro brand lobby have their activist hats on, that's annoying, these things will cost 50p an hour to run but don't put pressure on my favourite brand to do better, just bend over and take it, give it to me dry Jens, love you.

Leaks say the RTX 4070 is 300 watts, that is a bit concerning to me, its 100 watts higher than my current GPU.

Having said that if its RTX 3090 level performance that's fine, in which case an RTX 4060 might be better suited to me, but that also depends, if its an 8GB £500 card i might as well get an RTX 3070 now.... or better still an RX 6800.

How about wait and see, so far everything points to a 4070 at least matching a 3090 but still using less power and chances are, if anything like ampere, you'll be able to undervolt and reduce power consumption by at least 80w.

Genuine question, you have been going on about vram and how nvidia are awful for this, no longevity, people are silly to be buying gpus with low vram etc. etc. yet you are happy enough to buy a 8gb/3070?I'm surprised you aren't back on amd already as you don't care/rate RT nor dlss nor much of anything about nvidias offerings over amds so surely amd would be better for your needs/wants?

You said it yourself:

How about wait and see, so far everything points to a 4070 at least matching a 3090 but still using less power and chances are, if anything like ampere, you'll be able to undervolt and reduce power consumption by at least 80w.

I think what you need to get through your head is this: Nvidia, Intel, AMD its all the same to me, they come in different coloured boxes and they have different driver UI's, but in the end they all do the same thing.

Beyond that DLSS is irrelevant to me, Ray Tracing isn't, that's why Nvidia are still an option to me, they just do it better, at least with the current crop of GPU's, VRam also matters to me, so i'm torn between AMD and Nvidia, which ever brand i end up with, i don't know, i honestly have no idea, i am looking at both the RTX 3070 and the RX 6800 and i don't know which i like more.

If i wait until the next generation, and i'm in doubt as to weather its worth it, power consumption may come in to it, because come October we could all be paying 60 to 70p per KW hour. You can under volt any GPU, or CPU, in high stress loads my CPU's cores pull 80 watts at 4.7Ghz, down from 105 watts at 4.6Ghz, a 12600K pulls 200 watts, i'm never going to get that anywhere near my current CPU, and that's the point, a high power consumption chip is always going to consume more power than a low power consumption chip. So the "but you can under-volt it" argument applies to everything, so it really doesn't apply at all.

Fair enough, thought you also weren't a fan of RT either, maybe it was someone else. If RT is something you care about and given how it is being added to the majority of games and will only gain more traction with more effects being added going forward, you won't want to be going with amd imo but then again, if you won't use dlss/fsr then RT performance will not be satisfactory....I think what you need to get through your head is this: Nvidia, Intel, AMD its all the same to me, they come in different coloured boxes and they have different driver UI's, but in the end they all do the same thing.

Beyond that DLSS is irrelevant to me, Ray Tracing isn't, that's why Nvidia are still an option to me, they just do it better, at least with the current crop of GPU's, VRam also matters to me, so i'm torn between AMD and Nvidia, which ever brand i end up with, i don't know, i honestly have no idea, i am looking at both the RTX 3070 and the RX 6800 and i don't know which i like more.

If i wait until the next generation, and i'm in doubt as to weather its worth it, power consumption may come in to it, because come October we could all be paying 60 to 70p per KW hour. You can under volt any GPU, or CPU, in high stress loads my CPU's cores pull 80 watts at 4.7Ghz, down from 105 watts at 4.6Ghz, a 12600K pulls 200 watts, i'm never going to get that anywhere near my current CPU, and that's the point, a high power consumption chip is always going to consume more power than a low power consumption chip. So the "but you can under-volt it" argument applies to everything, so it really doesn't apply at all.

That is true but ampere undervolts very well, iirc better than RDNA 2? Also bear in mind, ampere is considerably better for RT performance efficiency too (if you will be playing RT titles...)

If you are really that power conscious, you can always do as tna suggested, lock your fps to a certain fps i.e. 60 as ultimately it looks like both rDNA 3 and ada will be consuming more power.

Soldato

- Joined

- 28 Oct 2011

- Posts

- 8,606

I'm not concerned about power for money reasons. It's the heat.

A 400w gpu already requires a dedicated window-mount AC to avoid getting my sim rig all sweaty in the summer. Blasting the central AC would freeze my wife out of any other part of the house since she's not running a damn space heater in the summer like me.

I could buy a more powerful window mount AC unit, but the temperature tug of war is already comical and results in awkward, uncomfortable temps while the GPU and and window mount AC unit fight over which directione the room temperature should go at the beginning of a session.

You know what? I'd be asking myself this question:

Am I playing this hobby? or is this hobby playing me?

Sorry but this is simply not true, not all GPU's are the same and to say otherwise is a real oversimplification. AMD and Nvidia both have different strengths and weaknesses and different implementations of common technologies which affect their performance in various games and when using various API's and rendering techniques. Considering that you also clearly contradict yourself in your second paragraph by highlighting some notable differences shows that you are perhaps a little confused and lacking in the specifics of this particular topicI think what you need to get through your head is this: Nvidia, Intel, AMD its all the same to me, they come in different coloured boxes and they have different driver UI's, but in the end they all do the same thing.

Beyond that DLSS is irrelevant to me, Ray Tracing isn't, that's why Nvidia are still an option to me, they just do it better, at least with the current crop of GPU's, VRam also matters to me, so i'm torn between AMD and Nvidia, which ever brand i end up with, i don't know, i honestly have no idea, i am looking at both the RTX 3070 and the RX 6800 and i don't know which i like more.