Fingers crossed they are as disruptive as the HD4000 series. They went for the jugular with their price/performance.If Nvidia released a GPU for £1200, why should AMD release a competing product too cheap?

AMD just need to wait for Nvidia to announce their disgraceful pricing and then undercut them by 20%.

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NVIDIA ‘Ampere’ 8nm Graphics Cards

- Thread starter LoadsaMoney

- Start date

More options

Thread starter's postsIt will easily beat it imo.Are we expecting a big Navi to even match a 2080ti?

Question is, if they can fit 2080 performance in a console, why are you doubting their ability to match a 2080Ti performance in a big fat graphics card?

If anything even just looking at a 5700XT, it would be bizarre if they could not match a 2080Ti on a better process and new architecture. Not to mention they can use a bigger die size.

People make 2080Ti performance sound like the holy grail, it was a very disappointing jump from a 1080Ti in the bloody first place. It will be easy to beat using a new architecture and smaller manufacturing process etc.

That was one of my favourite series. The 4870 cost me £200 brand new on release and was the best card money could buy at the time and much better than a 8800GTXFingers crossed they are as disruptive as the HD4000 series. They went for the jugular with their price/performance.

Soldato

- Joined

- 30 Nov 2011

- Posts

- 11,499

LOL, the video isn't 720p which completely invalidates your point of view. As it stands it is proof at 4k.

PC Specs:

Intel Core i7-9700K, 8x 4800 Mhz (OC) 32 GB DDR4 3000 RAM ASUS ROG STRIX GeForce RTX 2080 Ti ASUS ROG STRIX Z390F Mainboard 250 GB Samsung 970 Evo Plus M.2 PCI SSD 4 TB HDD Windows 10 Pro 64bit

The point of my posts on the subject is that we are in a time in which we are getting new consoles at the same time we are getting new video cards. The point of which is to play games, not glorify video cards. So your car analogy is horrible as we don't wash nor detail video cards. Neither do we meet up at the local walmart parking lot and lay our video cards in the parking spaces for all to see.

What we do using them for is playing games with. Some, if not all, of which will be ported from either the playstation or xbox ecosystem. An ecosystem in which we have to pay attention to in order to know how we upgrade.

That entails that we pay attention to price structure. Because that determines what will attract us to buy, not what we are brand loyal to.

Remember, upgrading the PC has and will always be a hobby. The purpose of the hobby is to play the games I enjoy. That hobby can fluctuate between PC or console depending on current market trends. So it would behoove the GPU market to pay attention, for example.

Yes exactly, we use our gaming PC's to play games, most of the games I play on PC are not on console, so its completely irrelevant to me what is or isn't happening in consoles. Every time there is a game on console that has cross play, the console gamers get demolished by PC users and accuse every single one of them of being "hackers". I've played CS:GO on and off for 5 years, I usually come mid to high table and sometimes come top of the board. I suck at Fortnite. I can count the number of times I have honestly thought someone was "hacking" in the last 10 years of competitive online games on 1 hand.

My analogy was pointing out the this is a PC forum Graphics Card section (the name being overclockers.co.uk is a pretty big clue), banging on about consoles repeatedly as people seem wont to do isn't going to do anything productive because fundamentally most "PC gamers" are PC gamers for a reason. Some have both. My kids wanted one because of the shiny adverts but even my 6 year old plays on PC about 90% of the time and the console only gets switched on when they want to play that infernal dance game. The quality of the graphics plays in to their choice precisely 0%.

What the price of a console is vs. what the cost of a PC is is completely irrelevant to me, just as the price of a BMW is irrelevant to me as I don't like them. People have been saying consoles will kill PC gaming for the best part of 20 years, it hasn't happened and PC's continue to break records in terms of sales numbers and values. Its nonsense and will continue to be nonsense.

If you honestly don't care which you play on, PC or console, then clearly, based on sales data of the last 20 years, "the market" isn't you.

Last edited:

Surely the most important aspect will be assest quality. If the next generation of games are getting a bump up in assest quality then all cards regardless of resolution will need a bump up in VRAM capacity.Less powerful cards = lower resolutions = you need less VRAM. 6-8GB VRAM, combined with all of their memory efficiency tech, is likely (I'll be interested to confirm iit either way) suitable for 1440p. I would be interested to see Gamers Nexus run a detailed article on this and I may shoot them an email to consider it as I could find no good comparisons of VRAM usage from this year on the interwebs when I searched.

It will easily beat it imo.

Question is, if they can fit 2080 performance in a console, why are you doubting their ability to match a 2080Ti performance in a big fat graphics card?

If anything even just looking at a 5700XT, it would be bizarre if they could not match a 2080Ti on a better process and new architecture. Not to mention they can use a bigger die size.

The answer is that they will need a fair bit of improvement with rdna2. It will end up needing to probably be 4-4500 shaders or so to match the Ti even if it ends up being slightly better than Turing is (which rnda1 isn’t at the minute).

Now that’s not to say they can’t just that it’s going to be a big and expensive card non the less.

Now rdna is already 7nm. So the improvements to come will only be architecturally. IMO the big jump has already happened when they moved to rnda1.

The XSX is only a 3328 core part. PS5 only a 2304 core part. Quite a big difference between them.

The Ti has 50% more shaders/cudas over an RTX 2080 yet we don’t see 50% more performance. The 1080Ti had only 40% over the 1080 for reference. Chances are we could be getting to a point where they just don’t scale as well anymore. And we see that comparing these cards at say 1080p.

The Ti at 4k is still quite a big jump over the 1080Ti especially since release where it’s punching 30-50% in most games.

Last edited:

Soldato

- Joined

- 6 Jan 2013

- Posts

- 22,194

- Location

- Rollergirl

It will easily beat it imo.

Question is...<snip>

Having experienced AMD for the first time with my Vega 64, the questions I'm askng are:

- Will it be less expensive for the same performance?

- Will the control panel be simplified to a point where I don't need Google to use it.

- As above, will overlocking get anywhere near the simplicity of Nvidia?

- Will it be plug and play out of the box, without the need for all this undervolting carry on - even that isn't a case of just tweaking a single voltage slider.

There's more to a GPU experience than raw power.

In your own words: This is the first time in 20 years in console history, that the CPU of the Console is equal to the latest Desktop

Obviously is not. 3950x drops in the same socket as an 8core/16 zen. And there's also 3900x. I'm not talking about threadripper.

Ironic.

Going off topic now so i will rein it in after this before i get whacked by a mod.

This will surprise people but the 2013 Xbox CPU, with the 8 Jaguar cores at 1.75ghz had the approximate CPU compute power of an K1 Nvidia Shield or, a 2012 Google Chromebook.

In short, the 2013 Xbox Cpu was awful at launch. the IPC, Clock, Architecture, ram bus speed - the lot.

In CPU terms, this new 2020 Xbox will be as fast as the same 2020 Xbox running a 3900x at the 99th percentile, in the majority of gaming scenarios.

We have proven it on the forum time and time again in our own independent performance testing, games programmers don’t (with small exceptions) use all the extra cores they are given beyond 6. Games are not as multithreaded as they should be.

Our £7k rigs worked well in 3dmark and Cinebench but they meant little when running Batman or, Crysis benchmarks, IPC mattered, not cores.

If you took a 3900x and plugged it into the socket into the 2020 Xbox X and did a blind test against the standard version, on a TV, in-game, I doubt anyone would notice the difference.

Hence, my claim that we have console gaming performance parity with a Desktop PC is simply because the IPC performance of the Zen2 heart and the clock speed is now as fast as a desktop for its intended purpose - playing games.

Microsoft and Sony could have gone quicker on the CPU clocks but i bet that in their internal CPU clock testing, they came to the same conclusion that this board came to years ago - CPU clock only gets you to a certain FPS point and beyond that, you are burning heat budget to beat a benchmark, the actual user experience is not improved and you are better off either upgrading your CPU architecture or, more effectively, spending the heat budget on GPU clock and faster, hotter, VRAM.

Like Nvidia did with the latest Super cards, see, i got Nvidia in there twice. #Proudface.

Soldato

- Joined

- 28 May 2007

- Posts

- 10,211

Having experienced AMD for the first time with my Vega 64, the questions I'm askng are:

- Will it be less expensive for the same performance?

- Will the control panel be simplified to a point where I don't need Google to use it.

- As above, will overlocking get anywhere near the simplicity of Nvidia?

- Will it be plug and play out of the box, without the need for all this undervolting carry on - even that isn't a case of just tweaking a single voltage slider.

There's more to a GPU experience than raw power.

First point nobody can answer but atm you get more performance on AMD for your money.

The control panel is already simple, you are probably just used to Nvidias old out of date interface.

Most PC enthusiasts don't want it to be like Nvidia as it takes away all the control and fun. You do know there is an auto OC button in there. You cant get any more simple than one button press.

My Vega 64 is plug and play but I do have the option to improve things by tinkering. Again this is classed as fun for most on here and why the pc is a great platform.

To me it sounds like you need to be looking at next gen consoles for all that plug and play stuff.

Going off topic now so i will rein it in after this before i get whacked by a mod.

This will surprise people but the 2013 Xbox CPU, with the 8 Jaguar cores at 1.75ghz had the approximate CPU compute power of an K1 Nvidia Shield or, a 2012 Google Chromebook.

In short, the 2013 Xbox Cpu was awful at launch. the IPC, Clock, Architecture, ram bus speed - the lot.

In CPU terms, this new 2020 Xbox will be as fast as the same 2020 Xbox running a 3900x at the 99th percentile, in the majority of gaming scenarios.

We have proven it on the forum time and time again in our own independent performance testing, games programmers don’t (with small exceptions) use all the extra cores they are given beyond 6. Games are not as multithreaded as they should be.

Our £7k rigs worked well in 3dmark and Cinebench but they meant little when running Batman or, Crysis benchmarks, IPC mattered, not cores.

If you took a 3900x and plugged it into the socket into the 2020 Xbox X and did a blind test against the standard version, on a TV, in-game, I doubt anyone would notice the difference.

Hence, my claim that we have console gaming performance parity with a Desktop PC is simply because the IPC performance of the Zen2 heart and the clock speed is now as fast as a desktop for its intended purpose - playing games.

Microsoft and Sony could have gone quicker on the CPU clocks but i bet that in their internal CPU clock testing, they came to the same conclusion that this board came to years ago - CPU clock only gets you to a certain FPS point and beyond that, you are burning heat budget to beat a benchmark, the actual user experience is not improved and you are better off either upgrading your CPU architecture or, more effectively, spending the heat budget on GPU clock and faster, hotter, VRAM.

Like Nvidia did with the latest Super cards, see, i got Nvidia in there twice. #Proudface.

I’m guessing they have gone with the die they did because it was easier for AMD to fab on both being 7nm. It would cut down the costs as the cpu is already in production. Perf wise at 4k the difference between say a 10 year old i5 2500 and a brand new 3950x is minimal.

It will more so be to utilise the massively improved computational power and cache of the cpu for other tasks. Those other tasks likely being Raytracing and the new SSD as well as other background apps and features (game resume etc) without bogging down the CPU during actual gameplay.

Last edited:

Soldato

- Joined

- 6 Jan 2013

- Posts

- 22,194

- Location

- Rollergirl

To me it sounds like you need to be looking at next gen consoles for all that plug and play stuff.

Yes, you're talking to a guy with 13,800 odd posts, a member since 2013 and one search through the project log or MM forums would tell you that you're assumption is well wide of the mark.

Anyway, I don't want to stray too far off topic. There's more to Nvidia than raw power, and I'm speaking from experience using both. That's the point I'm making.

The answer is that they will need a fair bit of improvement with rdna2. It will end up needing to probably be 4-4500 shaders or so to match the Ti even if it ends up being slightly better than Turing is (which rnda1 isn’t at the minute).

Now that’s not to say they can’t just that it’s going to be a big and expensive card non the less.

Now rdna is already 7nm. So the improvements to come will only be architecturally. IMO the big jump has already happened when they moved to rnda1.

The XSX is only a 3328 core part. PS5 only a 2304 core part. Quite a big difference between them.

The Ti has 50% more shaders/cudas over an RTX 2080 yet we don’t see 50% more performance. The 1080Ti had only 40% over the 1080 for reference. Chances are we could be getting to a point where they just don’t scale as well anymore. And we see that comparing these cards at say 1080p.

The Ti at 4k is still quite a big jump over the 1080Ti especially since release where it’s punching 30-50% in most games.

Are they not going to a more mature 7+ manufacturing process? Also 5700XT is not a million miles away from 2080Ti performance and it is 251mm2 so there is a lot of room right there alone.

I think you are underestimating the performance leap rdna 2 will bring. They will have been working on that for a very long time on consoles. It will bring nice efficiency gains imo. In my mind beating 2080Ti is not even a question. The question is how close can they come to tithe 3080Ti/Titan. That will depend on how well it scales.

We will see I suppose. I am not expecting it to match or beat nvidia’s top offerings myself, but as I said 2080Ti performance will be easy to match or beat. I will eat my hat if it doesn’t and you can quote me on that!

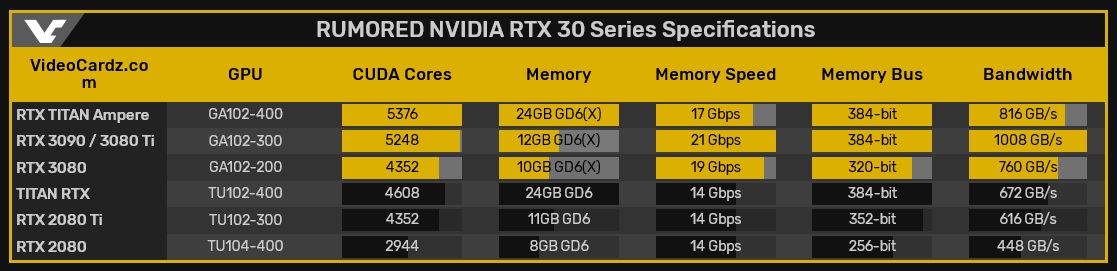

If these specs are true, then that means the 3090 will be sub-ti standards hence the lack of ti.

Presumably this mean there's a 3090ti coming later, so the 3090 is in essence the new x80 and the 3080 is in essence the new x70 (Similar to how the GTX680 and GTX670 were the new GTX570 and GTX560 respectively with the GTX580 replacement of that series called the GTX780).

Question is where will the pricing fall...

Yeah, that would suck. They would get even more stick online if they do this. But I guess if it does not impact their bottom line, they won’t care.If these specs are true, then that means the 3090 will be sub-ti standards hence the lack of ti.

Presumably this mean there's a 3090ti coming later, so the 3090 is in essence the new x80 and the 3080 is in essence the new x70 (Similar to how the GTX680 and GTX670 were the new GTX570 and GTX560 respectively with the GTX580 replacement of that series called the GTX780).

Question is where will the pricing fall...

If these specs are true, then that means the 3090 will be sub-ti standards hence the lack of ti.

Apparently it's a marketing thing. Nvidia have found that there's been consumer confusion about the difference between Ti and Super. So the 3090 will be the replacement for the 2080 Ti. Presumably there will be a 3090 Super refresh 12 months later

Lol, I just ran out of VRAM on my 2080, emulating the N64 in RetroArch, which crashed back to desktop.

..in fact, to run some games that ran in the N64's 'hi-res' 480i mode & then with an 8x resolution upscale, would need over 16GB of VRAM!

I may have to spend £2.5K for the RTX Titan just to play Perfect Dark at 4K

..in fact, to run some games that ran in the N64's 'hi-res' 480i mode & then with an 8x resolution upscale, would need over 16GB of VRAM!

I may have to spend £2.5K for the RTX Titan just to play Perfect Dark at 4K

Soldato

- Joined

- 14 Aug 2009

- Posts

- 3,263

games programmers don’t (with small exceptions) use all the extra cores they are given beyond 6.

That's simply because the current consoles have about 6cores available to developers, so no much incentive to code for more. Give them more and they'll use it.

I think you are underestimating the performance leap rdna 2 will bring. They will have been working on that for a very long time on consoles. It will bring nice efficiency gains imo. In my mind beating 2080Ti is not even a question. The question is how close can they come to tithe 3080Ti/Titan. That will depend on how well it scales.

Will it matter if will have the same inflated cost?

The whole talk about RT co-processor or 3D stacking will mean for sure a high price tag unless both companies are willing to accept a smaller profit margin.

I guess the battle will be in the mainstream. If something similar in performance with the GPU from the consoles come around $299 tops, will be a step in the right direction.

Last edited:

snip

Thinking about what you said about the 3090...

Do you think there could be a 3090 ti later on?

Soldato

- Joined

- 30 Nov 2011

- Posts

- 11,499

If these specs are true, then that means the 3090 will be sub-ti standards hence the lack of ti.

Presumably this mean there's a 3090ti coming later, so the 3090 is in essence the new x80 and the 3080 is in essence the new x70 (Similar to how the GTX680 and GTX670 were the new GTX570 and GTX560 respectively with the GTX580 replacement of that series called the GTX780).

Question is where will the pricing fall...

If anything, looking at chip naming, the 3080 has been dragged up to the xx102 level, not the 3090 coming down.

Are they not going to a more mature 7+ manufacturing process? Also 5700XT is not a million miles away from 2080Ti performance and it is 251mm2 so there is a lot of room right there alone.

I think you are underestimating the performance leap rdna 2 will bring. They will have been working on that for a very long time on consoles. It will bring nice efficiency gains imo. In my mind beating 2080Ti is not even a question. The question is how close can they come to tithe 3080Ti/Titan. That will depend on how well it scales.

We will see I suppose. I am not expecting it to match or beat nvidia’s top offerings myself, but as I said 2080Ti performance will be easy to match or beat. I will eat my hat if it doesn’t and you can quote me on that!

Saved for later

I think they could do it and i bet you're probably right, they could match the Ti but its still going to take a big core part even with rdna2. They aren't going to be matching it with the next 2560 core 5800xt for example.

I doubt they will be matching ampere on the top end though in my opinion when nvidia go 7nm i am expecting big gains. Probably 50% or so. Doubtful AMD will get that even with 7nm+