Associate

- Joined

- 24 May 2015

- Posts

- 607

LOL just as we're talking about this

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

I think even der8auer or buildzoid would struggle to look at those pics and go "caw, that's a good'un that!".Here's a real life example of the 12V-2x6 connector quandary. I'm in the process of installing my new Antec HCG Pro 1200W PSU and MSI 5080 and MSI states I should use the PSU included 12V-2X6 cabling if available. I've posted the following images in the PSU forum as well,(sorry for the cross posting), what do you think of the cabling provided:

Pin Images

Imo it lands on nvidia. I find it a little unfair to be putting the responsibility for this on the psu and cable manufacturers. Why should other companies be required to change their spec, probably for a cost, because of poor nvidia design decisions.

.

. .

.Ok, but how is the PSU meant to know what can or can't be drawn from a particular cable?It's not about blaming PSU manufacturers. They've built it to spec.

It sounds like a new spec is required with PSUs now becoming 1kW beasts. Having the potential for all of that to go through a single wire with no protection on the PSU side is absurd. A misbehaving device will burn your house down.

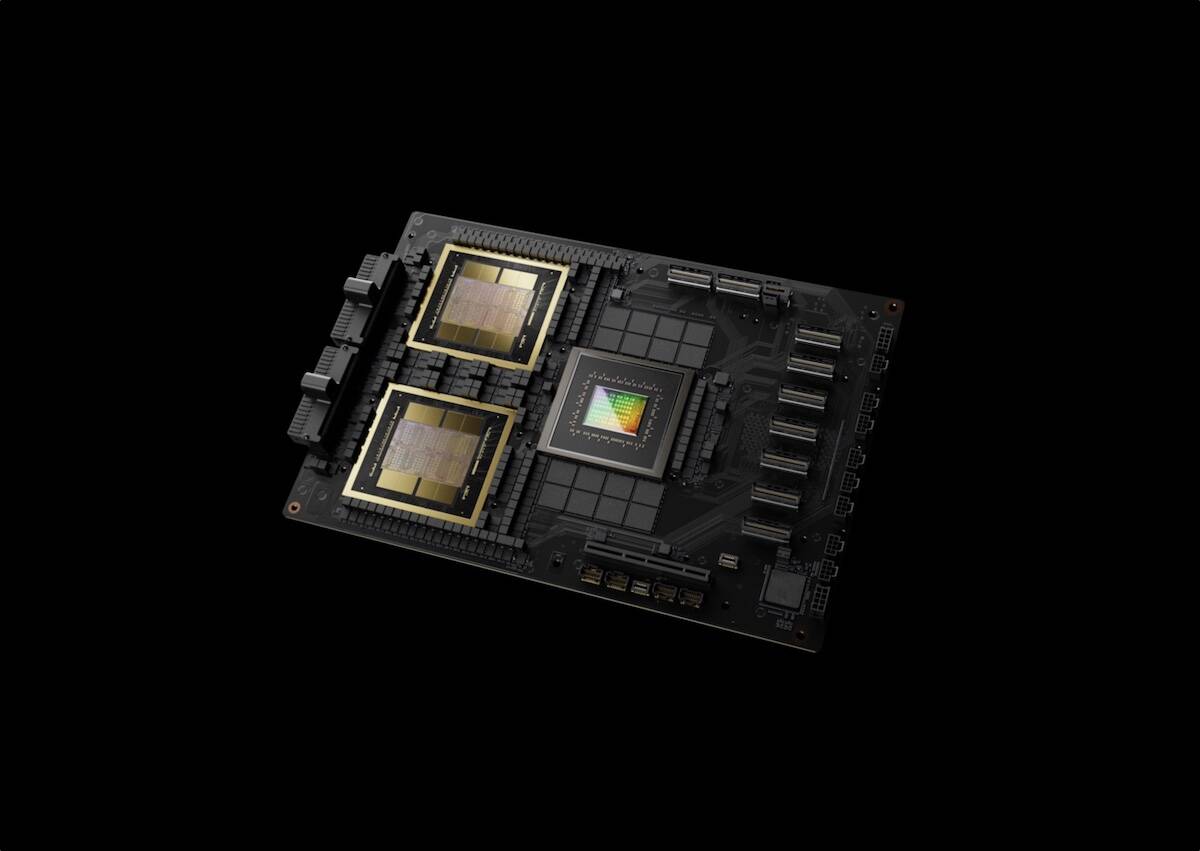

Through 8 PCI-E 8-pins...What is the connector used by this 1000W graphics card?

NVIDIA B200 SXM 192 GB Specs

NVIDIA GB100 x2, 1837 MHz, 16896 Cores, 528 TMUs, 24 ROPs, 98304 MB HBM3e, 2000 MHz, 4096 bitwww.techpowerup.com

Ok, but how is the PSU meant to know what can or can't be drawn from a particular cable?

Here's a real life example of the 12V-2x6 connector quandary. I'm in the process of installing my new Antec HCG Pro 1200W PSU and MSI 5080 and MSI states I should use the PSU included 12V-2X6 cabling if available. I've posted the following images in the PSU forum as well,(sorry for the cross posting), what do you think of the cabling provided:

Pin Images

You have 2 rendered frames which accept inputs and then multi frame gen adds 1-3 frames between, the time between the 2 normally rendered frames increases slightly for each generated frame that’s inserted and the latency is derived from that gap between the 2 normally rendered frames.No It doesn't wait for the next frame, the input lag would double if it did that and it doesn't.

So if i make a device that use an 8 pin PCI-E power connect that needs 75W on one of the pins and 50W on another i wouldn't be able to do that even if i specced my cable correctly.Because the cable has a spec. PCI-E 8 pin is 150W with each of the 3 power pins drawing 50W each. With a 12 pin cable, with 6 power pins, its 100W each.

If someone uses an adaptor two combine 8 pin wires into a 12 pin wire, then conveniently, 50W + 50W = 100W.

PSU will require some transient spike detection, but should not permit continuous out of spec power. This keeps everything within the spec of the cables and connectors.

Going to give it a go.Looks fine.

The connector is so small that I highly doubt there is a problem there

I'm still going to give it a go and take a punt on it. Wish me luck.I think even der8auer or buildzoid would struggle to look at those pics and go "caw, that's a good'un that!".

Interpolation, like TV's do, doubles the input lag. DLSS FG must doing something else as it doesn't double input lag.You have 2 rendered frames which accept inputs and then multi frame gen adds 1-3 frames between, the time between the 2 normally rendered frames increases slightly for each generated frame that’s inserted and the latency is derived from that gap between the 2 normally rendered frames.

Put your fingers on it running a game and see if any of the wires are getting hotter than any others.I'm still going to give it a go and take a punt on it. Wish me luck.

It doesn’t double latency however there is a penalty applied for each generated frame added because the time between the 2 normally rendered frames increases for each generated frame added.Interpolation, like TV's do, doubles the input lag. DLSS FG must doing something else as it doesn't double input lag.

I'm not going to argue with your description as you've not said pre-rendered, but if you're suggesting DLSS FG is just interpolation then I think you'd be wrong.

DLSS frame generation is using extrapolation according to everything I can find. The XE in Intel Xess is precisely because intel claim it's doing extrapolation, and the articles covering it say it's because they borrowed the idea from nvidia's frame gen.

Yeah, although the drop from x3 and x4 is less than the drop of native to turning on frame generation.It doesn’t double latency however there is a penalty applied for each generated frame added because the time between the 2 normally rendered frames increases for each generated frame added.

So for instance if your running 60fps native and then apply FG your native base fps actually decreases so it’ll drop to say 55fps for FGx1 50fps for FG x2 and 45fps for FG x3