Lol. Can i come with you? Tough im coming anyway. (not like that you dirty rats)

BF4

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

Lol. Can i come with you? Tough im coming anyway. (not like that you dirty rats)

I was gonna post about the date format but decided it wasn't worth it.

x8 AA

So in Thief we should expect to see a 770 faster than a 290X and a 660 beating out a 7950 boost? I bet you won't. Your example is poor and does not work because GameWorks libraries are not part of Thief. The engine may be the same and the engine may favour Nvidia, but it does not explain the results in question at all.

UrrrGHHh. The 290 had barely been on the market when Arkham Origins launched Matthew, they fixed most of the performance gripes the following day after release. What more do you want?

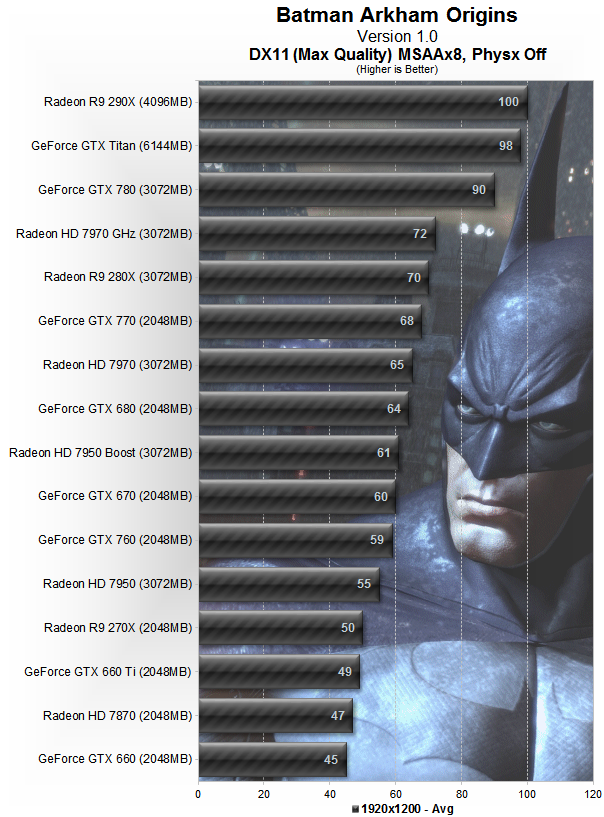

It's the same game engine. It COULDN'T BE MORE relevant. It's only not relevant to you because you've convinced yourself that GameWorks is the soul reason for it. When I'm showing you another Unreal Engine 3 title that NV cards are dominant in. If I install Arkham City, I bet you I could emulate near exactly the same scenario when using 8XAA.

Enough with the corporate conspiracies.

The numbers don't lie though Matt and look at all the other game bench threads and nVidia own in all of them, except Batman....

You have a pair of 290's, but if you want to play with FXAA, be my guest, I think MSAA looks great

1. Score 89.9, GPU nvTitan @1320/1901, CPU 2500k @5.0 khemist Link

2. Score 89.3, GPU 780ti @1250/1962, CPU 4770k @4.5 zia Link

3. Score 88.8, GPU 780ti @1295/1950, CPU 4960X @4.7 MjFrosty Link 322.21 drivers

4. Score 86.5, GPU nvTitan @1280/1802, CPU 3930k @4.8 whyscotty Link

5. Score 86.1, GPU 780ti @1208/1800, CPU 4770k @4.6 Dicehunter Link 331.82 drivers

6. Score 85.3, GPU nvTitan @1280/1879, CPU 3930k @4.625 Gregster Link 332.21 drivers

7. Score 84.8, GPU 780 @1400/1850, CPU 3770k @5.0 Geeman1979 Link

8. Score 84.5, GPU 290X @1200/1625, CPU 3970X @4.9 Kaapstad Link 13.11 beta 8 drivers

9. Score 83.5, GPU 780 @1424/1877, CPU 3930k @5.0 whyscotty Link 332.21 drivers

10. Score 82.9, GPU 780ti @1150/1750, CPU 4770k @4.4 zia Link

Regardless though, it changes nothing. The fact is they confessed to the thing we all argued about for ages.

I accept it and I don't see the issue. Why would you run a game with only FXAA on a 290X? The arguments have always been that when you turn the dials up, then "X" GPU really starts to shine.... Not when you turn the dials down lol

And that is me out for the day as well. Sadly I will be drinking heavily all day and betting money on nags

They didnt confess to anything, we always knew they were closed libraries and that they dont give the source code to AMD, the argument wasnt about that, the argument was that this totally disables AMD from making ANY changes to their drivers to alter the performance, which is the bit that is laughably false

3D gaming can't use MSAA, so basically I shouldn't touch anymore GW titles to play in 3D as my 290X is going to be castrated.

Thanks Nvidia, thanks a lot.

Nvidia have fessed up and that's cool because AMD got off their **** and created Mantle that doesn't gimp Nvidia in direct competition with AMD in DX in any way?

Like I said, "That is your choice" It makes no odds to me if you buy/don't buy a GameWorks title. All I have done is point out how the 290X is faster than my Titan in Batman: AO and yet in every other games benchmarks, I can beat the 290X.

I have never heard people moan before about getting more fps than me

I have put 78 hours into this game and it is stunning in 3D.

Well done, deflected everything I said about an enforced performance penalty in 3D.

Most on AMD won't get to enjoy BAO 3D on AMD, if that's the way to promote PC gaming, well, Nvidia certainly knows how to stifle PC Gaming.

Please, no point replying btw unless it's to directly address closed AMD driver optimization with the end result being reduced performance in 3D.