Isn't there a bridge in the box??

Don't think so as there are several different sizes to match motherboard spacing.

There are also problems SLIing reference and non reference 1080s with a hard bridge due to height differences.

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

Isn't there a bridge in the box??

AMD already said to reporters after the previous event that the 8GB version fo the RX480 was only well, I forget exactly which $229 or 239, which absolutely does leave room for a different card at $300 but it may mean recommended max AIB price though again adding 30% or so to the cost for a custom cooling solution is.... excessive.

X being ten in Roman numerals, following the other R designations Radeon uses for products then? Not saying it's a cert but I wouldn't be as confident as you seem.

Yup, this is the issue. I'm honestly expecting small Vega to give the 1080 a bit of a pasting and I wouldn't be surprised if big vega beat GP102 comfortably as well.

If small vega has HBM2, it will destroy 1080 in overall performance and performance/watt.

Easy. It offers enough bandwiodth for the current chips and is cheaper than HBM and with the power requirements of the current generations you dont need the power savings from HBM.

AMD made that mistake on the Fury.

There will be chips from both sides which will need HBM2 but these arent them.

In fact, I am pretty sure the Titan and 1080ti wont use HBM either despite what people are hoping for.

GDDR5x is used on literally one card... yet has become the memory to use, even though HBM2 is on two cards(two supposedly available.... but no one has actually seen a working GP100).

This is a fact, if 1080 used HBM to achieve it's bandwidth, it would be in the region of 150W instead of 180W and it would be dramatically harder for AMD to beat it's performance/w with a gddr5 RX480.

If Nvidia wanted to give 1080 512GB/s of bandwidth, what with it losing ground to Fury X and 980ti at 4k compared to 1080/1440p, it would have to increase power by likely another 20W or so to achieve it, HBM wouldn take an extra 5W to do that.

Using an older architecture, the reason the Nano could beat Nvidia on performance/w against a newer architecture, was purely by using HBM.

Whatever the power limit on GP102 is, Nvidia will run into the situation where the memory will use a lot more power so the gpu core can use a lot less power within a reasonable single card wattage. Again HBM allowed a Nano to match a newer architecture in performance/w with an older one. Now think Vega, newer than Pascal realistically with HBM vs Pascal with gddr5x which uses significantly more power at any given level of bandwidth. AMD is going to have a HUGE advantage.

We also both have some supposedly leaked specs, it's funny because when you were using GP102/GP104/anything Nvidia leaked specs it's fine, but leaked Vega specs is a no because you say so.

From GP106 > GP104 die size, from most generations gaps in die sizes, from RX480 die size it's incredibly reasonable to presume small Vega will be a bigger die than GP104, and based off supposed architectural efficiency of Polaris without HBM, it's extremely naive to believe a larger Vega core with HBM won't beat a 1080.

Leaks put it as a circa 4000 shader part, it will probably be 350-400mm^2 both because of that shader count, the need to have a decent gap between it and RX480 die size/shader count, the need to have enough shaders to utilise HBM2 bandwidth and because leaks put it at 4000 shaders. All of that is errm... an idea of what Vega's specs are, and all of those ideas would have it comfortably ahead of the 1080 and what's more is if it uses HBM2, it can probably do that in the same power as the 1080, if it uses gddr5(x) it will likely use a bit more power but maybe still have better performance/w.

Kaap with more of his ridiculous rhetoric on the demerits of HBM. As many have said before, fiji performance was nothing to do with HBM, get over it.

The only reason GDDR5X is being use in place on GP104 1080's is due to it being cheaper to implement, as you do not need to worry about interposers etc. Nothing to do with HBM's performance.

I don't get what you have against HBM memory

As we all know HBM1 performance can be updated to work entirely differently by drivers... so somehow it is still the HBM1 that is the problem.

From what I recall doesn't Fury X beat a 980ti at 1080p, and at every resolution and every setting except Hyper(de-optimised memory storage Nvidia are paying devs to use to hurt 4GB cards, both Nvidia and AMD ones) and lowest settings... funnily enough.

But still you know, HBM1 sucks, that it's beating the 980ti is irrelevant.

Fury is a not optimised overly large core built on an architecture not particularly designed for that number of shaders. It was more than anything a test bed for HBM so they could implement it, get the production chain for HBM1 established, start ramping that up, learn about HBM and how to optimise their next architecture that will use it and make improvements. Vega will bring chips with architecture tweaks designed for both HBM and a higher number of shaders.

Fury X is the reason AMD is able to bring HBM2 to higher volume and cheaper products than Nvidia will manage this generation.

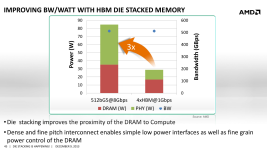

512GB/s via GDDR5 is a split of 35W chips and 50W PHY layer, via HBM1 it's roughly 17W chip and 12W PHY. GDDR5x doesn't reduce the PHY layer power much at all, regardless of bus size or chip power moving X amount of data off a chip takes Y power without a huge amount of difference, 512bit with 6Gbps chips or 256bit bus with 12Gbps chips will use about the same PHY power. You'll save about maybe 10W at 512GB/s using less GDDR5x chips over GDDR5 as you use half the chips but the chips themselves run faster and use more power overall.

HBM2 makes this comparison even worse as it reduces chip power to achieve 512GB/s.

HBM will always use significantly less power, that 50W difference can't go away. That means a 250W high end chip which will probably use around 512GB/s or more, meaning 75W or so will be purely memory leaving 175W for the chip. AMD within the same power budget will use maybe 25W for the memory leaving 225W for the gpu.

The bandwidth achievable with gddr5/x is not a problem, it never was. The problem is the power it will take to achieve it. HBM will always use significantly less power than GDDR5.

The sole reason an older GCN architecture could remotely rival Maxwell a much updated one in performance/w was purely HBM using much less power. It saved 50W. With gddr5 Fury X would need to use over 300W or more likely, have 500-1000 less shaders, either way reducing performance/watt massively and making a Nano completely unachievable, not size but the compelling performance in that sized package or it would have been so loud and hot it wouldn't have worked.

HBM2 will do the same and be an even bigger difference vs even higher bandwidth high end chips this generation.

Are you serious? LOL!

Polaris is a price/perf/efficiency based part, HBM is still too expensive to put on a mainstream part.

The only person not thinking outside of his box is you.

Your argument about heat. It just blew my mind and I couldn't treat it as a serious comment given that it's grounded in bull poop.

No it's because the chips memory controller & arch can't SATURATE HBM. Please keep up.

Look Kaap, keep going around in circles, the only person making himself look daft is you. Plenty of people understand the issues with memory capacity for first gen HBM and where the issues are with fiji performance, yet you keep up this little act since you have to be right about it when you are not.

And the same again with GP104, it is all about cost that go beyond the part itself such as sorting out production lines. Which is why their HBM2 efforts are going towards GP100 since it is their first HBM based part alike fiji was AMD's HBM/Interposer pipe cleaner. mainly since Nvidia can charge an arm and a leg for it and recover those production setup costs etc since they have had less time to get this done in comparison to AMD who have had years of R&D time to get it right.

Buying an AMD card doesn't make you not biased, it provides you with a platform to say you're not biased and think you're serious.

Pascal is hot with a very small area and a lot of heat... really.... literally only a few months ago you were saying Fiji ran hotter than Hawaii because you both didn't understand the concept of power output compared to die area and you actually attempted to refute the concept and deny basic physics by insisting that when pointed out that Fury X had a significantly lower w/mm^2 output than Hawaii I was wrong and didn't know what I was talking about.

It's hilarious that back then you were arguing against an incredibly basic physics principal but now you're using it to defend a 1080.

One, HBM would drop actual power usage coming from the memory controller by 30-35W at that bandwidth level and two the HBM dies are separate so it wouldn't result in extra heat build up but in fact lead to a cooler running 1080 core, lead to lower power usage and higher sustained clockspeeds in the same power usage.

AMD didn't drop HBM, they used it on a product that is still sold, their next product uses HBM2. In the same way Nvidia is using gddr5 on a 1070 AMD is using GDDR5 on lower end cards also. There was never any intention to have HBM in every segment instantly due to cost and there was never any intention for Nvidia to use GDDR5x in every card due to cost. AMD have specifically stated the intention to use HBM2 and so have Nvidia, neither have dropped the technology but both will use the latest version of it.

But when Nvidia launches a GDDR5x card using 12Gbps chips, I'll be sure to spout the ridiculous idea that Nvidia has dropped using GDDR5x 10Gbps chips because they are a failed technology and no good.... because that is the incredibly ridiculous argument you're making as a claim that neither AMD or Nvidia will use HBM1 any more.

Pot calling the kettle black, you crack me up Kaap, keep it up.

200euro difference between the 1070 and a 480 and OC the 480 you now have the best card for 1440p and lower resolutions.

If your at 4k, wait for Vega as it will blow away the 1080 in october.

The king the 480 the card of decade is soon here.

Kaap HBM is the way forward end of story. Everything has its shelf life and GDDR5 is coming to the end of its at the top end. HBM and technology's like it will be taking over.

You could go pray at the shrine like Flopper and see if you are worthy of receiving such tech in the future if that's what worries you.

Drunkenmaster is right here. On all counts.

Which I dont think I've ever said before!

C'mon Kaap, this isn't a winnable argument man. I dont think anybody can prove with absolute certainty that your theories(or the theories you've heard) are completely untrue, but there's enough evidence suggesting they most likely are. Meaning that believing them over the more rational explanation is..........irrational.