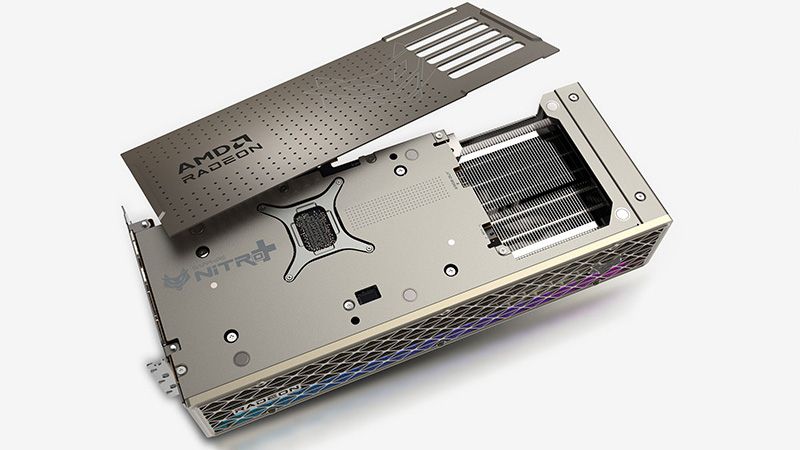

Each to their own obviously, hell some folk don’t even want glass panel cases while other want it ‘360’I don't care what the GPU looks like

Mine is pimped out like an 80’s disco, vertically mounted watercooled gpu, fan leds that react to sound, the whole shebang - and yet the whole thing is hid under my desk (for the time being) so I don’t even notice it when gaming. But I know she looks purdy and that makes me happy.